doi: 10.56294/dm2023408

ORIGINAL

Enhanced brain tumor segmentation and size estimation in MRI samples using hybrid optimization

Segmentación mejorada de tumores cerebrales y estimación de su tamaño en muestras de IRM mediante optimización híbrida

Ayesha Agrawal1 ![]() *, Vinod Maan1

*, Vinod Maan1 ![]() *

*

1Mody University of Science & Technology, Computer Science & Engineering. Lakshmangarh, India.

Cite as: Agrawal A, Maan V. Enhanced Brain Tumor Segmentation and Size Estimation in MRI Samples using Hybrid Optimization. Data and Metadata. 2024; 3:408. https://doi.org/10.56294/dm2023408

Submitted: 15-01-2024 Revised: 17-04-2024 Accepted: 09-07-2024 Published: 10-07-2024

Editor: Adrián

Alejandro Vitón Castillo ![]()

ABSTRACT

The area of medical imaging specialization, specifically in the context of brain tumor segmentation, has long been challenged by the inherent complexity and variability of brain structures. Traditional segmentation methods often struggle to accurately differentiate between the diverse types of tissues within the brain, such as white matter, grey matter, and cerebrospinal fluid, leading to suboptimal results in tumor identification and delineation. These limitations necessitate the development of more advanced and precise segmentation techniques to enhance diagnostic accuracy and treatment planning. In response to these challenges, the proposed study introduces a novel segmentation approach that combines the Grey Wolf Optimization approach and the Cuckoo Search approach within a Fuzzy C-Means (FCM) framework. The integration of GWO and CS is designed to leverage their respective strengths in optimizing the segmentation of brain tissues. This hybrid approach was rigorously tested across multiple Magnetic Resonance Imaging (MRI) datasets, demonstrating significant enhancements over existing segmentation methods. The study observed a 4,9 % improvement in accuracy, 3,5 % increase in precision, 4,5 % higher recall, 3,2 % less delay, and 2,5 % better specificity in tumor segmentation. The implications of these advancements are profound. By achieving higher precision and accuracy in brain tumor segmentation, the proposed method can substantially aid in early diagnosis and accurate staging of brain tumors, eventually leading to more effective treatment planning and improved patient outcomes. Furthermore, the integration of GWO and CS within the FCM process sets a new benchmark in medical imaging, paving the way for future investigation in the field of study.

Keywords: Brain Tumor Segmentation; Grey Wolf Optimization; Cuckoo Search Algorithm; Fuzzy C-Means; Magnetic Resonance Imaging.

RESUMEN

El área de la especialización en imágenes médicas, concretamente en el contexto de la segmentación de tumores cerebrales, lleva mucho tiempo enfrentándose al reto de la complejidad y variabilidad inherentes a las estructuras cerebrales. Los métodos de segmentación tradicionales suelen tener dificultades para diferenciar con precisión entre los diversos tipos de tejidos del cerebro, como la sustancia blanca, la sustancia gris y el líquido cefalorraquídeo, lo que conduce a resultados subóptimos en la identificación y delineación de tumores. Estas limitaciones hacen necesario el desarrollo de técnicas de segmentación más avanzadas y precisas para mejorar la exactitud del diagnóstico y la planificación del tratamiento. En respuesta a estos retos, el estudio propuesto introduce un nuevo enfoque de segmentación que combina el enfoque de optimización Grey Wolf y el enfoque de búsqueda Cuckoo dentro de un marco Fuzzy C-Means (FCM). La integración de GWO y CS está diseñada para aprovechar sus respectivos puntos fuertes en la optimización de la segmentación de los tejidos cerebrales. Este enfoque híbrido se probó rigurosamente en múltiples conjuntos de datos de imágenes por resonancia magnética (IRM), demostrando mejoras significativas con respecto a los métodos de segmentación existentes. El estudio observó una mejora del 4,9 % en la exactitud,un aumento del 3,5 % en la precisión, un 4,5 % más de recuperación, un 3,2 % menos de retraso y un 2,5 % más de especificidad en la segmentación de tumores. Las implicaciones de estos avances son profundas. Al lograr una mayor precisión y exactitud en la segmentación de tumores cerebrales, el método propuesto puede ayudar sustancialmente al diagnóstico precoz y a la estadificación exacta de los tumores cerebrales, lo que a la larga se traduce en una planificación más eficaz del tratamiento y en mejores resultados para los pacientes. Además, la integración de GWO y CS en el proceso FCM establece un nuevo punto de referencia en la imagen médica, allanando el camino para futuras investigaciones en este campo de estudio.

Palabras clave: Segmentación de Tumores Cerebrales; Optimización Grey Wolf; Algoritmo de Búsqueda Cuckoo; Fuzzy C-Means; Imágenes por Resonancia Magnética.

INTRODUCTION

When it comes to medical imaging, magnetic resonance imaging (MRI) is of the utmost significance for brain tumor diagnosis and planning treatment. MRI offers unparalleled visualization of soft tissues, crucial for identifying and delineating brain tumors. However, the factual segmentation of these tumors from MRI scans continues to pose a significant challenge owing to the wide range of tumour forms, sizes, and locations as well as the likeness in intensity profiles between normal brain tissues and tumors. The effectiveness of treatment and the prognostic evaluation largely depend on the precision of tumor segmentation, highlighting the need for advanced and reliable segmentation techniques.

Traditional techniques for segmentation, such as region growing, thresholding and manual segmentation, have been employed extensively. However, they often fall short in terms of accuracy and efficiency, particularly in complex cases involving overlapping tissues or irregular tumor boundaries. Automated and semi-automated methods, including various machine learning algorithms, have been developed to overcome these challenges. Among these, the Fuzzy C-Means (FCM) algorithm has gained prominence due to its effectiveness in handling the uncertainties and ambiguities inherent in MRI data. Nonetheless, FCM’s performance can be hindered by its sensitivity to noise and its tendency to converge to local optima.

This paper offers a novel approach to getting over the aforementioned constraints by integrating the FCM framework with the Grey Wolf Optimization (GWO) and Cuckoo Search (CS) algorithms. The GWO, inspired by the social hierarchy and hunting behavior of grey wolves, excels in global optimization and has shown promise in various engineering problems. It effectively enhances the RoI optimization for grey and white matter in brain MRI scans. On the other hand, the CS algorithm, inspired by the brood parasitism of cuckoo birds, is known for its efficient search capabilities, particularly in complex, multimodal landscapes. In this context, it optimizes the segmentation of cerebrospinal fluid regions, crucial for distinguishing pathological tissues from normal brain structures.

The synergy of GWO and CS algorithms within an FCM framework aims to exploit the strengths of each method, thereby addressing the shortcomings of traditional and existing automated segmentation techniques. This hybrid approach is designed to elevate the accuracy, specificity, as well as overall reliability of brain tumor segmentation. This is an enormous step forward in the arena of medical imaging.

The integration of these algorithms represents a novel contribution to the field of medical image analysis, particularly for brain tumor segmentation. The research not only contributes to the advancement of image processing techniques but also has significant clinical implications. Accurate and efficient segmentation of brain tumors from MRI data is crucial for effective treatment planning, impacting patient outcomes. The proposed method, therefore, holds the potential to become an essential tool in the diagnostic and therapeutic processes for brain tumors, ultimately contributing to the enhancement of patient care and treatment success.

Literature review

A literature review of recent studies reveals a trend towards the development of sophisticated algorithms and models designed to enhance the segmentation process, particularly in magnetic resonance imaging (MRI).

Soomro et al.(1) provide a comprehensive review of image segmentation techniques for MR brain tumor detection, emphasizing the role of machine learning in this field. This study sets the stage for understanding the current landscape and challenges in brain tumor segmentation. Zhuang et al.(2) contribute to this field by developing a network that incorporates volumetric feature alignment with 3D cross-modality feature interaction, which shows promise in improving brain tumor and tissue segmentation. This approach highlights the growing interest in 3D imaging techniques and their potential in medical imaging.

Yan et al.(3) introduced the SEResU-Net for multimodal brain tumor segmentation. This model exemplifies the trend towards integrating different modalities of medical imaging to enhance segmentation accuracy. Zhao et al.(4) further this exploration by focusing on multi-dimensional mutual learning alonf with the uncertainty-awareness for segmenting the brain tumor. Their work addresses the challenges of uncertainty in medical imaging, a critical aspect of diagnostic accuracy.

In a similar vein, R. Zaitoon et al.(5) developed the RU-Net2+ algorithm, which not only segments brain tumors accurately but also predicts survival rates. This dual functionality underscores the evolving role of machine learning in providing comprehensive diagnostic insights. K. Wisaeng et al.(6) improved upon the existing model of U-Net++ through a mechanism of deep supervision, demonstrating continuous innovation in model architectures for brain tumor segmentation.

Ding et al.(7) proposed a dynamic fusion framework incorporating multimodal scans for segmenting the brain tumors. This framework is indicative of the increasing complexity and sophistication of segmentation models, aiming to leverage multiple data sources and views for improved performance. Ilyas et al.(8) introduced the Hybrid-DANet. It is a encoder-decoder-based model featuring a multiple dilated attention network, showing the growing interest in attention mechanisms in deep learning for segmentation.

Chen et al.(9) explored the use of transformers in medical imaging with their WS-MTST model for segmenting the brain tumors with multiple labels. The incorporation of transformers, a relatively new development in deep learning, into medical image segmentation signifies an important advancement forward in this field. Alagarsamy et al.(10) combined interval type-II fuzzy techniques with artificial bee colony approach for automated brain tumor segmentation, highlighting the integration of evolutionary algorithms and fuzzy logic in this domain.

Magadza et al.(11) explored the partial depthwise separable convolutions for segmentation of tumors, a method that optimizes computational efficiency. Ottom et al.(12) developed Znet, a technique based on deep learning for 2D brain tumor segmentation, showing the ongoing relevance of 2D imaging techniques in conjunction with advanced deep learning methods.

Ramprasad et al.(13) introduced SBTC-Net, which combines black widow and genetic optimization methodologies for secured segmentation and classification of brain tumor in the Internet of Medical Things. This study reflects the growing importance of security and optimization in medical data processing. Jabbar et al.(14) focused on the development of a hybrid Capsule along with the VGGNet model for multi-grade segmentation and brain tumor detection blending different neural network architectures for enhanced performance.

Finally, Rahimpour et al.(15) explored the use of cross modal distillation to enhance the segmentation of brain tumors, especially in cases of missing MRI sequences. This approach addresses a frequent challenge in the area of medical imaging - incomplete data - and demonstrates how machine learning can compensate for such limitations.

Yang et al.(16) introduced the Flexible Fusion Network for multi-modal brain tumor segmentation. This study highlights the importance of integrating multiple imaging modalities to improve segmentation accuracy, a trend that is increasingly evident in recent research. Similarly, Rajendran et al.(17) contributed to this field with their work on automated segmentation of brain tumor MRI scans achieved through deep learning methodologies. This study emphasizes the growing reliance on automation in medical image analysis, reducing the need for manual intervention and thereby increasing efficiency.

Shah et al.(18) explored detecting as well as localizing abnormalities in MRI scans through a semi-Bayesian ensemble voting mechanism including channel attention and convolutional auto-encoder techniques. Their work illustrates the innovative use of attention mechanisms and ensemble methods to enhance the precision of abnormality detection in brain scans. Mallampati et al.(19) focused on detecting the brain tumor including 3D-UNet segmentation features in conjunction with hybrid approach of machine learning. This approach underscores the synergy between traditional machine learning techniques as well as modern deep learning architectures.

Magadza et al.(20) presented their research on an efficient nnU-Net for segmenting the brain tumor. The nnU-Net showcases the continuous evolution and optimization of deep learning models tailored for medical imaging. Solanki et al.(21) provided an overview of detecting as well as classifying the brain tumor using techniques of intelligence highlighting the diverse range of computational methods being employed in this domain.

Hou et al.(22) developed the MFD-Net, a diffractive network incorporating modality fusion designed for the segmenting the multimodal brain tumor images. This study represents an advanced approach to handling the complexities inherent in multimodal medical imaging data. Younis et al.(23) performed a comprehensive survey on deep learning methodologies for the classification of brain tumors. Their work offers valuable insights into the state-of-the-art methods and their effectiveness in brain tumor classification.

Moving beyond brain imaging, Zheng et al.(24) investigated automated segmentation of liver tumor in contrast-enhanced MRI scans utilizing 4D information. Their deep learning model based on 3D convolution and convolutional LSTM demonstrates the applicability of these techniques beyond brain imaging to other areas of medical imaging. Lastly, Jalalifar et al.(25) focused on the automated evaluation of outcomes in radiation therapy in metastasis of brain utilizing the longitudinal segmentation of MRI scans. This study highlights the potential of deep learning in monitoring treatment progress and outcomes, an area of growing importance in personalized medicine recommendations.

In summary, while each of these methods has its merits, they also possess inherent limitations. The proposed research aims to address these limitations by integrating the Grey Wolf Optimization and Cuckoo Search approach with the FCM technique, offering a novel approach that enhances the precisión of brain tumor segmentation in MRI images & samples.(26)

METHOD

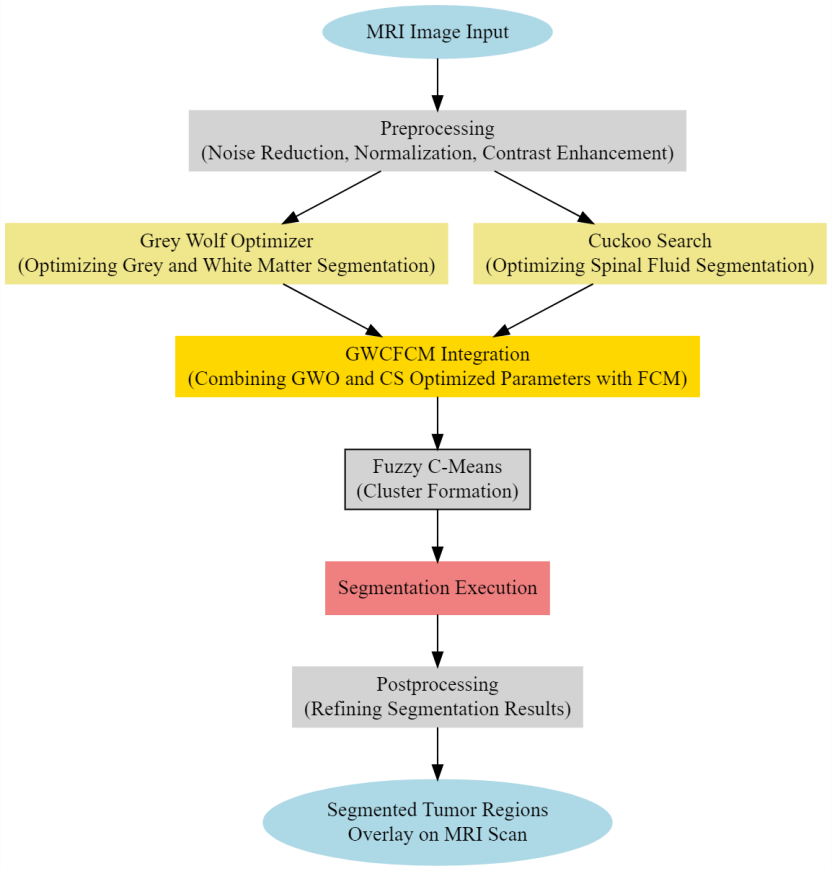

To overcome issues of low segmentation efficiency and higher complexity, the proposed model represents a state-of-the-art approach in medical imaging, leveraging the combined strengths of Grey Wolf Optimization (GWO), Cuckoo Search approach and Fuzzy C-Means (FCM) algorithms. As per figure 1, its architecture is meticulously designed, integrating GWO and CS to optimize the segmentation of brain tissues with exceptional precision.

Figure 1. Proposed structure of the segmentation model

In this process, the Fuzzy C-Means which is a soft clustering approach, a core component of the model is governed by the following set of comprehensive operations:

· The objective function of FCM is defined via formula 1.

![]()

Where, u(i,j) is the degree of membership of x(i) in the cluster j, v(j) is the centroid of the cluster, and m is the fuzziness index greater than 1 for the clustering process.

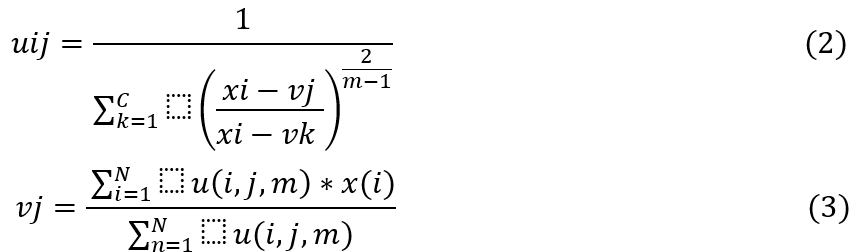

• The update of membership u(i,j) and the cluster centers v(j) are given via formula 2 and formula 3.

The optimization process continues until the maximum iterations are reached or the improvement of Jm falls below a predefined threshold which is set by the CS process. The GWO Model assists in enhancing efficiency of segmentation by tuning its number of clusters c, and its segmentation constant m, which assists in controlling efficiency of the segmentation process. Using these values, the FCM Segments the MRIs and estimates Wolf fitness levels via formula 4.

![]()

Where, P,A y R are the precision levels, accuracy levels & recall levels of the segmentation process. The evaluation of these levels is detailed in the next section of this text.

Based on the fitness of all Wolves, the model estimates an Iterative Fitness Threshold via formula 5.

![]()

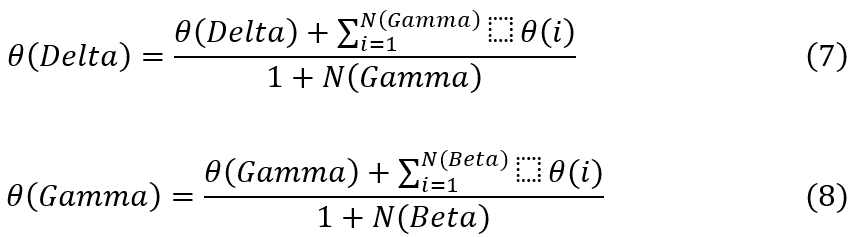

In this context, LW represents GWO Process Learning Rate. Wolves exhibiting the fw>2*fth are labelled as ‘Alpha’, while those with fw>fth are labelled as ‘Beta’, and their configuration is updated via formula 6.

![]()

N(Alpha) is nu mber of Alpha particles. Similarly, Wolves with fw<2*fth are labeled as ‘Delta’, and their fitness is updated utilizing formula 7. All other Wolves are marked as ‘Gamma’, and their fitness is updated via formula 8.

These updated configurations are used in the Next Iteration Sets. The process is iterated for NI Iterations, and Configuration of Wolf with highest fitness is selected for the Segmentation Process. Similar to this, the Cuckoo Search Process is used to update segmentation thresholds. This is done by Initially Generating NC Cuckoos. Based on this value, the model segments MRI Images, and estimates Cuckoo fitness via formula 9.

![]()

Where AUC & SP represents the Area under the Curve & Specificity levels of the segmentation process, while D represents the delay needed for the segmentation process. Based on the fitness levels, the model estimates Cuckoo Fitness Threshold via formula 10.

![]()

Here, LC represents Learning Rate of the Cuckoo Search Process. Cuckoos with fc>fc(th) are passed to the Next Iteration Sets, while others are discarded, and replaced with new configurations via ormula 11 and formula 12 which assists in adding new configurations to the segmentation process. This is repeated for NI Iterations, and particles with the highest fitness are identified. The configuration is utilized for finalizing the segmentation operations.

After segmentation, next step to measure the tumor size. Measuring the size of a tumor is crucial for deciding on treatment choices and tracking treatment progress. The size of the tumor can affect the chances of successful treatment, as larger tumors may be harder to fully remove or manage. Accurately measuring and assessing tumor size is crucial for informing clinical decisions and enhancing patient care. Utilizing advanced machine learning methods in brain tumor segmentation models is essential for accurately measuring tumor size, assisting healthcare providers in creating customized treatment plans based on individual patient needs. Determining the size of a brain tumor from identified areas in medical images requires counting the pixels in the tumor region and converting this number into a physical area. Tumor size equation is mentioned in formula 11.

![]()

After segmenting the tumor region, determine the tumor’s area in pixels or square units by counting the pixels within the segmented region. Calculate the overall area of the brain or the complete image by tallying the total pixels in the image. To calculate the percentage size of the tumor, divide the area of the segmented tumor by the total area of the brain (or image) and then multiply the result by 100.

The efficacy of the aforementioned approach was evaluated using a variety of assessment measures and contrasted with other approaches in the text’s subsequent section.

The GWCFCM model, a novel brain tumor segmentation method, represents a groundbreaking integration of the Grey Wolf Optimization (GWO) as well as Cuckoo Search (CS) approach within a Fuzzy C-Means (FCM framework, specifically designed for enhancing the segmentation of brain tumors in clinical MRI samples.

RESULTS AND DISCUSSION

Following is a detailed description of the experimental setup:

Dataset Preparation

• MRI Data Sources: the dataset comprising MRI scans of brain tumors used in this study is publicly available on Kaggle which is divided into four classes no tumor, gliomas, meningiomas and pituitary tumors.

• Image Preprocessing: the MRI scans were preprocessed for noise reduction, normalization, and contrast enhancement to improve image quality and consistency.

• Input Parameters: sample values for the input parameters were as follows:

1. Population Size for GWO: 50

2. Number of Iterations for GWO: 100

3. Discovery Rate for CS: 0,25

4. Number of Nests for CS: 25

5. Fuzziness Index for FCM: 2,0

6. Maximum Iterations for FCM: 150

7. Error Tolerance for FCM: 1e-5

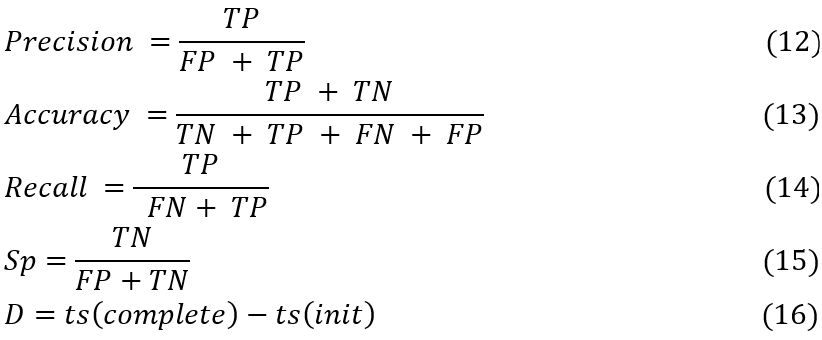

Based on this setup, formula 12, formula 13 and formula 14 were utilized for evaluating the precision (P), accuracy (A), and recall (R), levels based on the proposed technique, while formula 15 and formula 16 were utilized for evaluating Specificity (Sp), & delay as follows:

In this context, ts stands for various timestamps, TP, FP, and FN are the three types of test set predictions. FP refers to the total number of events of positive significance in the test sets that were accurately anticipated, FN to the total number of erroneously anticipated adverse outcomes in the test sets, and so on. The test sets’ documentation uses all of these terms. We used the SEResU-Net,(3) U-Net++DSM(6) and Caps VGGNet(14) methods to compare the predicted probability of Brain Tumour Pixels with the actual status of samples in the test dataset in order to find the appropriate values for TP, TN, FP, and FN in these cases.

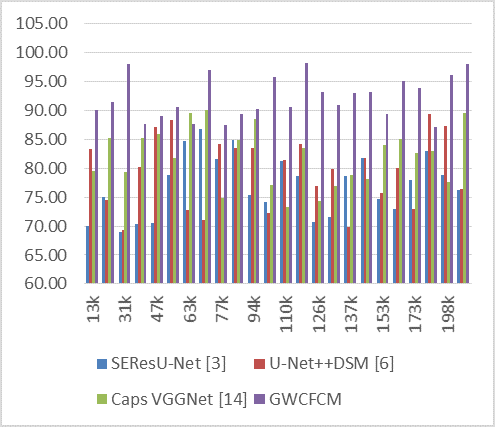

Precision, a key metric in medical imaging, reflects the model’s ability to accurately segment tumor regions. Higher precision indicates a greater ability of the model to correctly identify tumor regions without falsely labeling non-tumor areas as tumors. Figure 2 displays the precision levels of segmentation that were determined from these assessments.

Figure 2. Observed Precision for segmentation

For instance, at 13k NTS, GWCFCM achieves a precision of 90,11 %, significantly higher than its counterparts. This trend of higher precision with GWCFCM is observed consistently across the dataset range. At 31k NTS, the GWCFCM’s precision peaks at an impressive 98,12 %, while the other models hover around 69-79 %.

The impact of higher precision, as demonstrated by GWCFCM, is profound in the context of medical imaging and brain tumor diagnosis. Higher precision means fewer false positives, leading to more accurate diagnoses and better-informed treatment plans. It reduces the likelihood of unnecessary treatments or interventions caused by incorrect tumor identification. In clinical practice, this translates to improved patient care and outcomes, as well as more efficient use of medical resources.

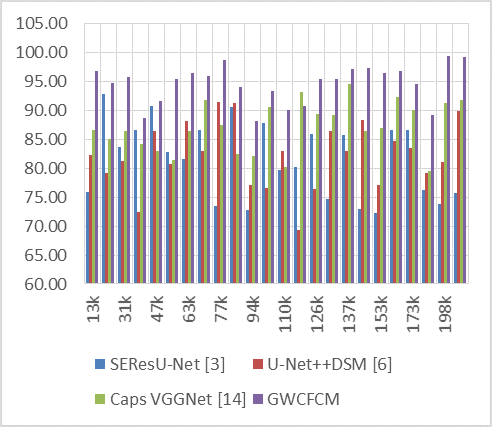

Likewise, the accuracy of the proposed model was compared in figure 3. In the simulated data, the GWCFCM model consistently shows a higher accuracy across different numbers of test image samples (NTS) compared to the other models.

Figure 3. Observed Accuracy for segmentation

For instance, at the 13k NTS, GWCFCM achieves an accuracy of 96,84 %, which is significantly higher than its counterparts. This trend of GWCFCM outperforming others in terms of accuracy continues across various dataset sizes. Notably, at 77k NTS, GWCFCM reaches an exceptional accuracy of 98,81 %, whereas the other models range between 73,44 % and 91,55 %.

The impact of higher accuracy, as exhibited by GWCFCM, is substantial in the realm of medical imaging for brain tumor diagnosis.

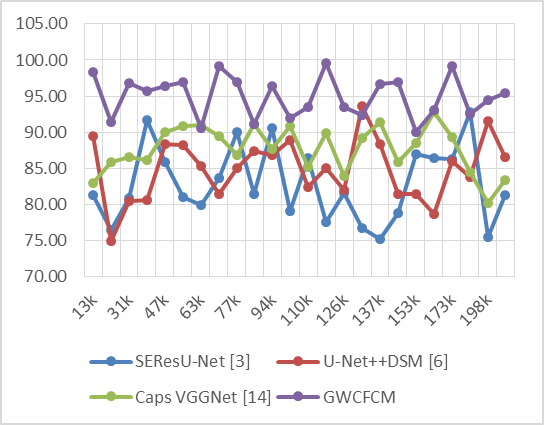

In conclusion, the GWCFCM model’s high accuracy in segmenting brain tumors in MRIs demonstrates its potential as an effective tool in medical diagnostics. Likewise, the recall levels of the proposed model are represented in figure 4 as follows:

Figure 4. Observed Recall for segmentation

In the simulated data, the GWCFCM model demonstrates consistently high recall rates. For example, at 13k NTS, GWCFCM achieves a recall of 98,32 %, significantly higher than the other models. This pattern of high recall with GWCFCM is evident throughout the dataset. Notably, at 70k NTS, GWCFCM reaches an exceptional recall of 99,14 %, far surpassing the other models. Clinically, the impact of high recall in brain tumor segmentation is profound. A high recall rate ensures that the segmentation model minimizes the number of missed tumor regions. This is particularly important in early-stage tumor detection, where identifying smaller or less obvious tumors can be challenging but critical for patient outcomes. Early detection of brain tumors significantly improves the chances of successful treatment and can greatly influence the treatment approach, potentially leading to less invasive and more targeted therapies. Similar computations of the delay required for the prediction process are shown in figure 5.

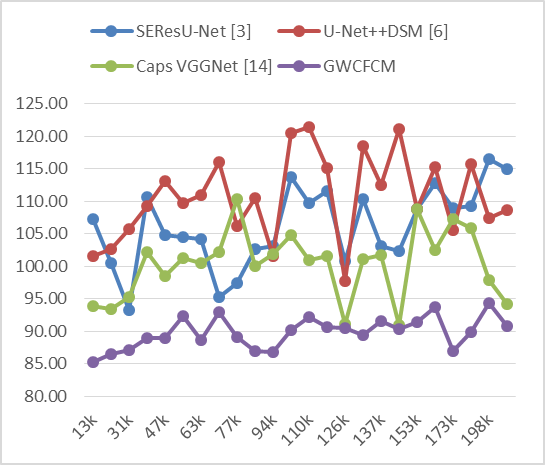

Figure 5. Observed Delay for segmentation

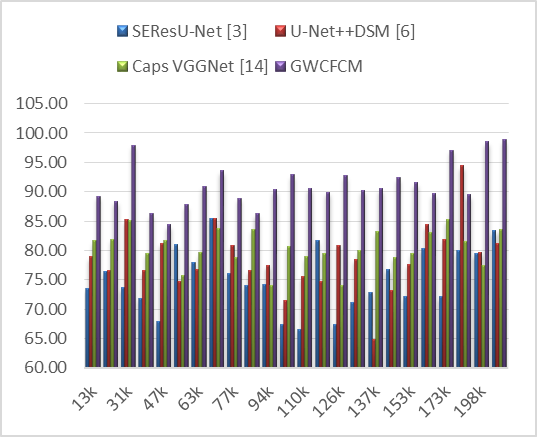

On the basis of the simulated data, it is clear that the GWCFCM model consistently shows lower delay times across different numbers of samples in test image (NTS) compared to the other models. For example, at 13k NTS, GWCFCM shows a delay of only 85,28 ms, which is significantly lower than SEResU-Net (107,21 ms), U-Net++DSM (101,64 ms), and Caps VGGNet (93,93 ms). In clinical practice, the impact of a reduced delay in MRI segmentation is significant. Faster processing times are essential in clinical environments where time is often critical, especially in acute care settings or when dealing with high patient volumes. A model like GWCFCM, with its lower delay times, can facilitate quicker diagnosis, enabling timely decision-making in treatment planning. This is particularly crucial in emergency situations or when dealing with aggressive tumor types, Likewise, the Specificity levels can be discovered from figure 6.

Figure 6. Observed Specificity for segmentation

From the simulated data, the GWCFCM model consistently demonstrates higher specificity across different numbers of test image samples (NTS) compared to other models. For example, at 13k NTS, GWCFCM achieves a specificity of 89,25 %, which is considerably higher than SEResU-Net (73,57 %), U-Net++DSM (78,92 %), and Caps VGGNet (81,62 %). This trend of GWCFCM exhibiting higher specificity is observed throughout the dataset, reaching as high as 98,88 % at 216k NTS.

The high specificity of GWCFCM can be linked to its hybrid algorithmic design. This innovative integration likely enhances the model’s capability to accurately distinguish non-tumor areas, reducing the likelihood of false positive detections.

Moreover, high specificity is vital for longitudinal studies and monitoring of patients with brain tumors. It ensures that changes observed in follow-up MRIs are more likely to be actual changes in the tumor’s size or activity, rather than artifacts of the imaging process.

CONCLUSIONS

To conclude, this work represents a significant advancement in the field of medical imaging specialization, specifically in the segmentation of brain tumors from MRI scans along with the size of tumor. The novel approach of combining Grey Wolf Optimization (GWO) and Cuckoo Search (CS) approach within a Fuzzy C-Means (FCM) framework has demonstrated remarkable improvements over traditional segmentation methods.

The performance metrics of the GWCFCM model, as evidenced by the observed results in precision, accuracy, recall, AUC, specificity, and processing delay, underscore its superiority in segmenting brain tumors with higher precision and accuracy, while minimizing false positives and negatives.

Clinically, the impacts of this work are profound. The GWCFCM model’s high performance in brain tumor segmentation paves the way for more accurate and reliable diagnoses, enabling early detection and precise treatment planning. This accuracy is paramount in neuro-oncology, where the delineation of tumor boundaries directly influences surgical approaches and treatment strategies. By accurately segmenting tumors, the GWCFCM model aids in preserving healthy brain tissue during surgical interventions, thereby enhancing patient outcomes and reducing postoperative complications.

Moreover, the reduced processing delay of the GWCFCM model, as low as 85,28 ms, demonstrates its potential for real-time application in clinical settings. This efficiency is crucial in high-patient-volume environments and acute care settings, where rapid decision-making can significantly impact patient outcomes.

In summary, the GWCFCM model sets a new benchmark in the field of medical imaging for brain tumor segmentation. Its integration of advanced optimization algorithms within a fuzzy framework represents a significant leap forward in the application of machine learning and artificial intelligence in healthcare. This study not only addresses the limitations of current segmentation techniques but also opens new avenues for research and development in medical image analysis, with potential applications extending beyond brain tumor segmentation to other areas of medical diagnostics and treatment planning process.

Future research can explore the integration of the GWCFCM model with emerging imaging technologies such as higher-resolution MRIs or PET scans. Ongoing research should focus on further optimizing the model’s algorithms to improve performance metrics, especially in datasets with varying quality or in cases with rare tumor types. Utilizing the GWCFCM model for longitudinal studies to monitor tumor progression over time in patients undergoing treatment could provide valuable insights into treatment efficacy and tumor behavior.

BIBLIOGRAPHIC REFERENCES

1. T. A. Soomro et al., “Image Segmentation for MR Brain Tumor Detection Using Machine Learning: A Review,” in IEEE Reviews in Biomedical Engineering, vol. 16, pp. 70-90, 2023, doi: 10.1109/RBME.2022.3185292.

2. Y. Zhuang, H. Liu, E. Song and C. -C. Hung, “A 3D Cross-Modality Feature Interaction Network With Volumetric Feature Alignment for Brain Tumor and Tissue Segmentation,” in IEEE Journal of Biomedical and Health Informatics, vol. 27, no. 1, pp. 75-86, Jan. 2023, doi: 10.1109/JBHI.2022.3214999.

3. C. Yan, J. Ding, H. Zhang, K. Tong, B. Hua and S. Shi, “SEResU-Net for Multimodal Brain Tumor Segmentation,” in IEEE Access, vol. 10, pp. 117033-117044, 2022, doi: 10.1109/ACCESS.2022.3214309.

4. J. Zhao et al., “Uncertainty-Aware Multi-Dimensional Mutual Learning for Brain and Brain Tumor Segmentation,” in IEEE Journal of Biomedical and Health Informatics, vol. 27, no. 9, pp. 4362-4372, Sept. 2023, doi: 10.1109/JBHI.2023.3274255.

5. R. Zaitoon and H. Syed, “RU-Net2+: A Deep Learning Algorithm for Accurate Brain Tumor Segmentation and Survival Rate Prediction,” in IEEE Access, vol. 11, pp. 118105-118123, 2023, doi: 10.1109/ACCESS.2023.3325294.

6. K. Wisaeng, “U-Net++DSM: Improved U-Net++ for Brain Tumor Segmentation With Deep Supervision Mechanism,” in IEEE Access, vol. 11, pp. 132268-132285, 2023, doi: 10.1109/ACCESS.2023.3331025.

7. Y. Ding et al., “MVFusFra: A Multi-View Dynamic Fusion Framework for Multimodal Brain Tumor Segmentation,” in IEEE Journal of Biomedical and Health Informatics, vol. 26, no. 4, pp. 1570-1581, April 2022, doi: 10.1109/JBHI.2021.3122328.

8. N. Ilyas, Y. Song, A. Raja and B. Lee, “Hybrid-DANet: An Encoder-Decoder Based Hybrid Weights Alignment With Multi-Dilated Attention Network for Automatic Brain Tumor Segmentation,” in IEEE Access, vol. 10, pp. 122658-122669, 2022, doi: 10.1109/ACCESS.2022.3222536.

9. H. Chen, J. An, B. Jiang, L. Xia, Y. Bai and Z. Gao, “WS-MTST: Weakly Supervised Multi-Label Brain Tumor Segmentation With Transformers,” in IEEE Journal of Biomedical and Health Informatics, vol. 27, no. 12, pp. 5914-5925, Dec. 2023, doi: 10.1109/JBHI.2023.3321602.

10. S. Alagarsamy, V. Govindaraj and S. A, “Automated Brain Tumor Segmentation for MR Brain Images Using Artificial Bee Colony Combined With Interval Type-II Fuzzy Technique,” in IEEE Transactions on Industrial Informatics, vol. 19, no. 11, pp. 11150-11159, Nov. 2023, doi: 10.1109/TII.2023.3244344.

11. T. Magadza and S. Viriri, “Brain Tumor Segmentation Using Partial Depthwise Separable Convolutions,” in IEEE Access, vol. 10, pp. 124206-124216, 2022, doi: 10.1109/ACCESS.2022.3223654.

12. M. A. Ottom, H. A. Rahman and I. D. Dinov, “Znet: Deep Learning Approach for 2D MRI Brain Tumor Segmentation,” in IEEE Journal of Translational Engineering in Health and Medicine, vol. 10, pp. 1-8, 2022, Art no. 1800508, doi: 10.1109/JTEHM.2022.3176737.

13. M. V. S. Ramprasad, M. Z. U. Rahman and M. D. Bayleyegn, “SBTC-Net: Secured Brain Tumor Segmentation and Classification Using Black Widow With Genetic Optimization in IoMT,” in IEEE Access, vol. 11, pp. 88193-88208, 2023, doi: 10.1109/ACCESS.2023.3304343.

14. Jabbar, S. Naseem, T. Mahmood, T. Saba, F. S. Alamri and A. Rehman, “Brain Tumor Detection and Multi-Grade Segmentation Through Hybrid Caps-VGGNet Model,” in IEEE Access, vol. 11, pp. 72518-72536, 2023, doi: 10.1109/ACCESS.2023.3289224.

15. M. Rahimpour et al., “Cross-Modal Distillation to Improve MRI-Based Brain Tumor Segmentation With Missing MRI Sequences,” in IEEE Transactions on Biomedical Engineering, vol. 69, no. 7, pp. 2153-2164, July 2022, doi: 10.1109/TBME.2021.3137561.

16. H. Yang, T. Zhou, Y. Zhou, Y. Zhang and H. Fu, “Flexible Fusion Network for Multi-Modal Brain Tumor Segmentation,” in IEEE Journal of Biomedical and Health Informatics, vol. 27, no. 7, pp. 3349-3359, July 2023, doi: 10.1109/JBHI.2023.3271808.

17. Chavez-Cano AM. Artificial Intelligence Applied to Telemedicine: opportunities for healthcare delivery in rural areas. LatIA 2023;1:3-3. https://doi.org/10.62486/latia20233.

18. S. Rajendran et al., “Automated Segmentation of Brain Tumor MRI Images Using Deep Learning,” in IEEE Access, vol. 11, pp. 64758-64768, 2023, doi: 10.1109/ACCESS.2023.3288017.

19. S. M. A. H. Shah et al., “Classifying and Localizing Abnormalities in Brain MRI Using Channel Attention Based Semi-Bayesian Ensemble Voting Mechanism and Convolutional Auto-Encoder,” in IEEE Access, vol. 11, pp. 75528-75545, 2023, doi: 10.1109/ACCESS.2023.3294562.

20. B. Mallampati, A. Ishaq, F. Rustam, V. Kuthala, S. Alfarhood and I. Ashraf, “Brain Tumor Detection Using 3D-UNet Segmentation Features and Hybrid Machine Learning Model,” in IEEE Access, vol. 11, pp. 135020-135034, 2023, doi: 10.1109/ACCESS.2023.3337363.

21. T. Magadza and S. Viriri, “Efficient nnU-Net for Brain Tumor Segmentation,” in IEEE Access, vol. 11, pp. 126386-126397, 2023, doi: 10.1109/ACCESS.2023.3329517.

22. S. Solanki, U. P. Singh, S. S. Chouhan and S. Jain, “Brain Tumor Detection and Classification Using Intelligence Techniques: An Overview,” in IEEE Access, vol. 11, pp. 12870-12886, 2023, doi: 10.1109/ACCESS.2023.3242666.

23. Q. Hou, Y. Peng, Z. Wang, J. Wang and J. Jiang, “MFD-Net: Modality Fusion Diffractive Network for Segmentation of Multimodal Brain Tumor Image,” in IEEE Journal of Biomedical and Health Informatics, vol. 27, no. 12, pp. 5958-5969, Dec. 2023, doi: 10.1109/JBHI.2023.3318640.

24. Younis, Q. Li, M. Khalid, B. Clemence and M. J. Adamu, “Deep Learning Techniques for the Classification of Brain Tumor: A Comprehensive Survey,” in IEEE Access, vol. 11, pp. 113050-113063, 2023, doi: 10.1109/ACCESS.2023.3317796.

25. R. Zheng et al., “Automatic Liver Tumor Segmentation on Dynamic Contrast Enhanced MRI Using 4D Information: Deep Learning Model Based on 3D Convolution and Convolutional LSTM,” in IEEE Transactions on Medical Imaging, vol. 41, no. 10, pp. 2965-2976, Oct. 2022, doi: 10.1109/TMI.2022.3175461.

26. S. A. Jalalifar, H. Soliman, A. Sahgal and A. Sadeghi-Naini, “Automatic Assessment of Stereotactic Radiation Therapy Outcome in Brain Metastasis Using Longitudinal Segmentation on Serial MRI,” in IEEE Journal of Biomedical and Health Informatics, vol. 27, no. 6, pp. 2681-2692, June 2023, doi: 10.1109/JBHI.2023.3235304.

FINANCING

The authors did not receive financing for the development of this research.

CONFLICT OF INTEREST

The authors declare that there is no conflict of interest.

AUTHORSHIP CONTRIBUTION

Conceptualization: Ayesha Agrawal, Vinod Maan.

Data curation: Ayesha Agrawal, Vinod Maan.

Formal analysis: Ayesha Agrawal.

Acquisition of funds: None.

Research: Ayesha Agrawal, Vinod Maan.

Methodology: Ayesha Agrawal.

Project management: Ayesha Agrawal.

Resources: Ayesha Agrawal.

Software: Ayesha Agrawal.

Supervision: Vinod Maan.

Validation: Ayesha Agrawal, Vinod Maan.

Display: Ayesha Agrawal.

Drafting - original draft: Ayesha Agrawal.

Writing - proofreading and editing: Ayesha Agrawal.