doi: 10.56294/dm2024.364

ORIGINAL

Drones in Action: A Comprehensive Analysis of Drone-Based Monitoring Technologies

Drones en acción: Un análisis exhaustivo de las tecnologías de vigilancia con drones

Ayman Yafoz1 ![]() *

*

1Department of Information Systems, Faculty of Computing and Information Technology, King Abdulaziz University, Jeddah 22254, Saudi Arabia.

Cite as: Yafoz A. Drones in Action: A Comprehensive Analysis of Drone-Based Monitoring Technologies. Data and Metadata. 2024; 3:.364. https://doi.org/10.56294/dm2024.364

Submitted: 24-01-2024 Revised: 19-04-2024 Accepted: 06-09-2024 Published: 07-09-2024

Editor: Adrián

Alejandro Vitón-Castillo ![]()

Corresponding author: Ayman Yafoz *

ABSTRACT

Unmanned aerial vehicles (UAVs), commonly referred to as drones, are extensively employed in various real-time applications, including remote sensing, disaster management and recovery, logistics, military operations, search and rescue, law enforcement, and crowd monitoring and control, owing to their affordability, rapid processing capabilities, and high-resolution imagery. Additionally, drones mitigate risks associated with terrorism, disease spread, temperature fluctuations, crop pests, and criminal activities. Consequently, this paper thoroughly analyzes UAV-based surveillance systems, exploring the opportunities, challenges, techniques, and future trends of drone technology. It covers common image preprocessing methods for drones and highlights notable one- and two-stage deep learning algorithms used for object detection in drone-captured images. The paper also offers a valuable compilation of online datasets containing drone-acquired photographs for researchers. Furthermore, it compares recent UAV-based imaging applications, detailing their purposes, descriptions, findings, and limitations. Lastly, the paper addresses potential future research directions and challenges related to drone usage.

Keywords: Unmanned Aerial Vehicles (UAVs); Applications; Image Processing; Datasets; Trends.

RESUMEN

Los vehículos aéreos no tripulados (UAV), comúnmente denominados drones, se emplean ampliamente en diversas aplicaciones en tiempo real, como la teledetección, la gestión y recuperación de catástrofes, la logística, las operaciones militares, la búsqueda y rescate, el cumplimiento de la ley y la vigilancia y control de multitudes, debido a su asequibilidad, su rápida capacidad de procesamiento y sus imágenes de alta resolución. Además, los drones mitigan los riesgos asociados al terrorismo, la propagación de enfermedades, las fluctuaciones de temperatura, las plagas en los cultivos y las actividades delictivas. En consecuencia, este documento analiza a fondo los sistemas de vigilancia basados en UAV, explorando las oportunidades, retos, técnicas y tendencias futuras de la tecnología de los drones. Cubre métodos comunes de preprocesamiento de imágenes para drones y destaca notables algoritmos de aprendizaje profundo de una y dos etapas utilizados para la detección de objetos en imágenes capturadas por drones. El artículo también ofrece a los investigadores una valiosa recopilación de conjuntos de datos en línea que contienen fotografías captadas por drones. Además, se comparan aplicaciones recientes de captura de imágenes basadas en UAV, detallando sus propósitos, descripciones, hallazgos y limitaciones. Por último, el artículo aborda posibles direcciones de investigación futuras y retos relacionados con el uso de drones.

Palabras clave: Vehículos Aéreos no Tripulados (UAV); Aplicaciones; Procesamiento de Imágenes; Conjuntos de Datos; Tendencias.

INTRODUCTION

Unmanned aerial vehicles (UAVs), commonly known as drones,(1) have gained significant popularity in real-time application systems because of their affordability, enhanced detection and tracking efficiency, mobility, and ease of deployment. They have matured as versatile instruments, with uses ranging from remote sensing and disaster management to law enforcement and crowd control. Their cost, rapid processing capabilities, and high-quality images all contribute to their widespread use.

In recent years, UAVs have been utilized for crowd analysis, complementing static monitoring cameras.(2) While drones are predominantly used for military purposes, their use in video capture is increasing as they provide a cost-effective and less complex alternative to manned aircraft, satellites, and helicopters. The benefits of drones include the following:(3)

1. Drones can be equipped with essential payloads and sensors to gather additional visual data and metrics.

2. They are capable of delivering real-time data to model crowd dynamics, supported by powerful onboard processing components for estimating crowd behavior.

3. They greatly reduce the operational and maintenance expenses linked to conventional monitoring systems.

4. Drones decrease the need for labor, intervention, and resources.

5. They expand the area that can be monitored.

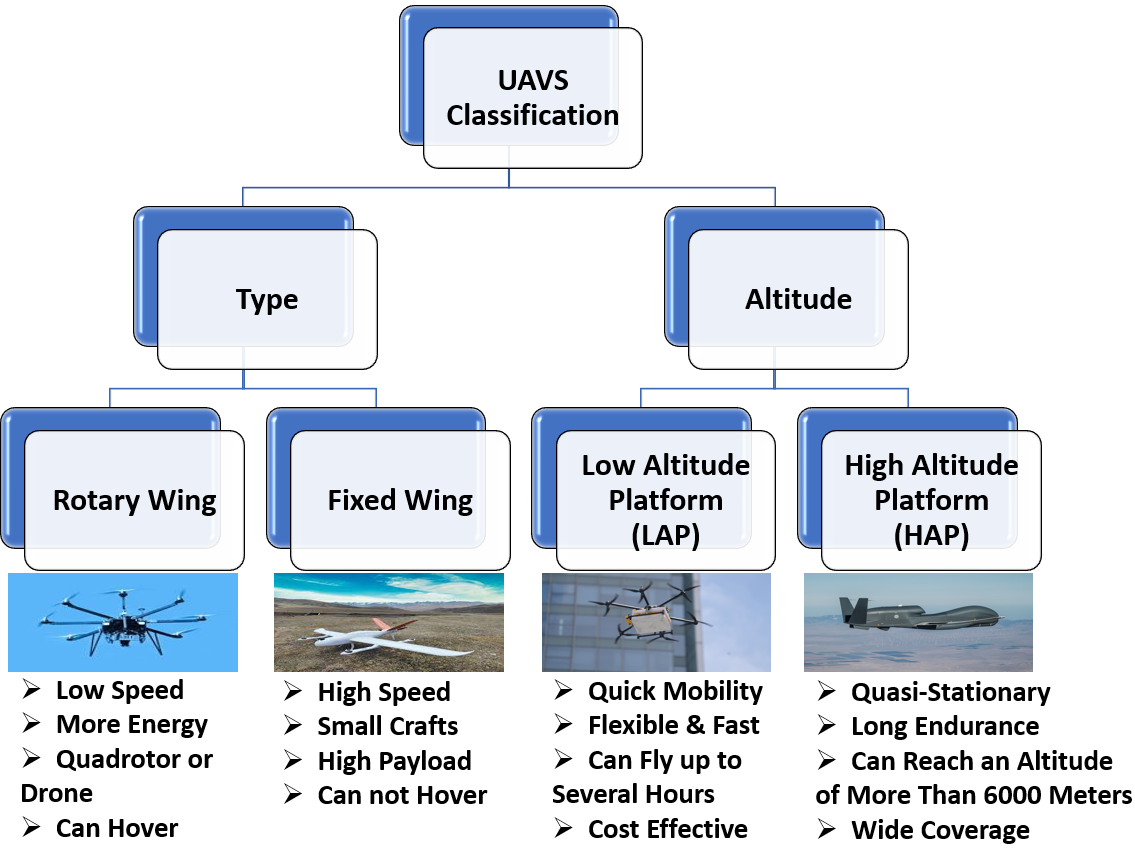

The benefits of employing surveillance drones are evident in scenarios requiring aerial and mobile monitoring to enhance location access and visibility. These scenarios include pandemic management, mining operations, maritime environments, tsunami response, agricultural areas for tasks like detecting plant diseases,(4) disaster situations, and rescue operations in remote or abandoned locations.(3) Moreover, figure 1 shows the taxonomy of UAV applications. There are four UAV types: High Altitude Platform (HAP), Low Altitude Platform (LAP), fixed winding, and rotary winding as shown in figure 1. On the other hand, figure 2 shows the taxonomy of UAV applications.(5)

This paper thoroughly examines unmanned aerial vehicle (UAV) surveillance systems. The paper investigates UAV applications in remote sensing, logistics, military operations, search and rescue, law enforcement, and crowd management. The paper discusses publicly available drone datasets and evaluates image preprocessing and processing techniques for UAV-based images. The paper evaluates current contributions to identify knowledge gaps and suggest promising future research directions in the field of UAV surveillance.

The primary goals of this paper are as follows:

· To examine the use of UAVs across different monitoring domains.

· To analyze various deep learning models for processing images captured by UAV cameras.

· To evaluate several studies in this field.

· To highlight future advancements in UAV-based monitoring systems.

Figure 1. Different Types of UAVs

The rest of this paper is organized as follows: Section 2 reviews UAVs and their applications in various fields. Section 3 examines UAV datasets and the methods used to analyze and process images captured by UAVs. Section 4 provides a critical review of related studies in this domain. Section 5 explores future trends in UAV monitoring systems. Finally, Section 6 concludes the paper by summarizing its findings and offering examples of future work in the field.

Applications Of Unmanned Aerial Vehicles (UAVs)

UAV imaging has been widely used in a variety of real-time application systems, including the following:

1. Detection of cracks.

2. Forest fire surveillance.

3. Detection of crowds.

4. Agriculture surveillance.

5. Video monitoring.

6. Wireless communications.

For monitoring and controlling traffic, the environment, spotting crowds, remote sensing, and disaster recovery, UAVs are particularly beneficial. Due to their advantages of being less expensive, having extensive coverage areas, receiving regular updates, and being quicker at taking pictures, UAVs are crucial in applications for monitoring forest fires.(6) Typically, the multi-UAV-based system for tracking forest fires aids in locating latent explosions and trigger events.

A. Remote Sensing

In general, remote sensing systems(7) are divided into two categories: active and passive. In an active remote sensing system, sensors generate the energy needed to detect the target objects. This type of sensing system, which includes elements of radar, sounder, LiDAR, laser altimeter, and ranging instruments, has been used more often in remote application systems. The passive remote sensing system sensors, on the other hand, pick up the radiation pattern that the target object emits. The radiometer, hyperspectral radiometer, spectrometer, and accelerometer are all included in their parts. UAVs can be primarily employed in remote sensing applications to collect data from sensors(8) and send the collected data to base stations. The UAVs usually include certain built-in sensors for monitoring the environment.

Figure 3 illustrates the classification of UAVs used in remote sensing applications. Due to the wide variety of spectral signatures, assessing the physical characteristics of metropolitan areas for mapping is typically one of the most difficult tasks. The main causes of erroneous analysis and mapping are also the fluctuating atmospheric conditions and temporal gaps. As a result, UAVs are found to be a promising choice for remote sensing applications since they provide valuable data for urban studies. UAV’s monitoring systems can give the following data: spatial location and extent, census statistics, land cover data, and transportation data. Cost-effectiveness and a fast rate of revisit are the main benefits of using UAV imageries instead of other satellite imageries.

However, UAVs with sensors may face a number of restrictions, including:(9)

1. Expensive sensor acquisition and maintenance.

2. The restricted range (LiDAR sensors, for example, generally have an ultimate range of roughly 100 m).

3. Sensitivity to the UAV’s motions and vibrations.

4. It has a limited field of vision than other remote sensing systems (for instance, visual cameras).

5. The difficulties of thermal imaging sensors in determining the origin of heat emission.

6. The inability to identify features or objects that are out of the visible spectrum.

7. The variations in illumination, which might produce variances in the visual appearance of the photos.

8. The sensitivity to glare and reflections, which might impact photo accuracy.

9. The sensitivity to signal interference, which could end up in decreased reliability and accuracy of navigation and positioning data.

10. There may be restrictions on the usage of UAVs equipped with sensors in rural or distant locations due to variables such as the communication link’s quality with the base station or the reference station’s availability, which may further raise the cost or delay the processing of the pictures.

B. Incorporating Drone Technology into Logistics

The rapid improvements to the UAVs’ technologies have made UAVs a preferred solution for many logistic operations. A report issued by Markets and Markets stated that in 2018, the market for small UAVs was valued at USD 13,40, and in 2025 is expected to grow to USD 40,31 billion, with a compound annual growth rate of 17,04 percent. One of the most important reasons expected to boost the small UAVs’ market is the growing procurement of small military-purpose UAVs by armies around the world. Small UAVs are increasingly being used in many logistic operations, for instance, product delivery, precision agriculture, monitoring, mapping, surveying, transportation, aerial remote sensing, and crowd movement organization, which is fueling the market expansion and usage of UAVs in logistic operations.(10)

Moreover, large companies such as DHL, Google, Amazon, Facebook, and Federal Express are researching the capabilities of UAVs in logistics. UAVs offer a revolutionary approach to enhancing logistic responsiveness. Due to their autonomous operations, flying capabilities, small size, and speed, using UAVs in monitoring and delivery in logistic tasks results in organizing the operations, providing better mobility, reducing the supply chain costs, reducing time, reducing efforts, reducing labor and human intervention, reaching remote and congested areas, and optimizing and accelerating logistic tasks (such as route planning, inventory management, warehousing, transportation, and so forth).(11) On the other hand, because UAVs are electric vehicles, they also contribute to environmental sustainability.(12) However, the benefits of applying UAVs in logistic operations are limited by the number of UAVs deployed, their endurance, weight, loading, and battery capacities. Furthermore, regulatory and legal frameworks, public acceptance and awareness, and the required skilled employees to maintain and operate UAVs are among the challenges that could hurdle the implantation of UAVs in logistic operations. These challenges need more investigations by researchers in future studies.(11)

C. Military UAVs

The defense industry has the largest market share for UAVs. According to the 2022 Military Gliders and Drones Global Market Report, the military UAVs and gliders market is expected to grow from $29,98 billion in 2021 to $35,02 billion in 2022, at a compound annual growth rate of 16,8 percent. The market is expected to reach $61,80 billion in 2026 at a compound annual growth rate of 15,3 percent. A military UAV is used for reconnaissance, surveillance, intelligence, and target acquisition and can carry aircraft ordnance such as anti-tank guided missiles, bombs, or missiles for drone strikes. Asia-Pacific dominated the market for UAVs and military gliders in 2021. Western Europe is expected to grow the fastest during the forecast period.(13)

Leading companies in the industry are focusing on developing and manufacturing UAVs that use artificial intelligence technologies. For example, the enterprises providing military UAVs with AI technologies include Neurala (which provides Neurala Brain, the artificial intelligence system that allows military UAVs to perform reconnaissance and patrol tasks), Lockheed Martin (provides Desert Hawk III, a UAV having operators training functionalities), AeroVironment (provides Raven series, the most widely used military UAVs), and Sheild.AI (provides Nova, the autonomous indoor navigation UAV).(13)

Nowadays, the primary tasks of UAVs in the military are reconnaissance, information gathering, target acquisition, and surveillance. These tasks necessitate connecting several sensors with vary4ng functionalities in order to generate a spherical view of the battlefield that field commanders can use in real time. UAVs play a significant role in improving the effectiveness of these tasks because they are thought of as extremely flexible platforms capable of carrying numerous advanced sensors. UAVs are increasingly ruling modern armed battles, particularly those requiring strikes against irregular armies or guerilla forces.(14)

However, because the payloads of nano or micro UAVs are limited, they can only carry light sensors for particular tasks. Additionally, the energy capacity and operational range of UAVs are constrained, and it is uncertain how long they will last mechanically. Moreover, skyscrapers in semi-urban or urban regions are one example of a physical barrier that can further restrict the area that can be exploited. On the other hand, in addition to offering expanded capabilities with high complexity that a single UAV cannot achieve, UAV swarms can tackle some of these problems.(14)

However, the absence of appropriate communication protocols that enable dependable and secure coordination and communications between UAVs and other air entities or mobile ground entities is the primary impediment to the use of UAV swarms in civil or military missions.(14)

D. Search and Rescue (SAR) Systems

Because they provide warnings and alerts during emergencies like floods, terrorist attacks, transportation, earthquakes, hurricanes, etc., UAVs are given major attention in both the public and civil sectors. In general, SAR missions involving traditional aerial equipment, such as helicopters and aircrafts, are more expensive. Additionally, they need the proper education and training to conduct SAR missions effectively. However, UAV-based SAR systems reduce the risks to people, as well as the time, money, and resource utilization. Moreover, image/video transmission and target object detection are the two main uses of single and multi-UAV systems. In order to obtain high-resolution images/videos for disaster management and recovery, these UAVs include built-in onboard sensors. The operational flow of the multi-UAV systems utilized in SAR operations to find missing people is depicted in figure 4.(15)

Figure 2. Taxonomy of UAV Applications

E. Law Enforcement Authorities

To reduce risk to the public and the subject while maximizing officer safety, intelligence on the location and the suspect’s movements must be gathered. When the crime scene needs to be surveyed remotely for safety or tactical purposes, aerial imaging from UAVs can help locate the suspect and then assess the risk level. UAVs’ data and imagery can be used to put together a variety of crucial bits of information during an operation. For instance, detecting the number of civilians in a specific region, pinpointing the suspect’s location, ascertaining whether the person is armed, or even spotting a nearby car or escape route. These insights improve the efficiency of tactical planning and deployment.(16)

The Drone Wars Company sent requests to 48 police units in the UK in 2020. 40 UK police units confirmed they were utilizing UAVs out of the 42 who answered. In the UK, police services were using at least 288 UAVs as of 2020. Over 5,500 UAV uses by UK police occurred in 2020. The Guardian reported in 2021 that police in England employed UAVs to keep an eye on protests. UAVs were reportedly utilized to monitor Black Lives Matter protests, according to police authorities in Staffordshire, Surrey, Gloucestershire, Cleveland, and the West Midlands. According to the Mayor of London in 2021, the Metropolitan Police Service has used unmanned aerial vehicles (UAVs) to provide aerial assistance for pre-planned tasks, provide live footage of operational deployments, survey properties, cover crime scenes to aid command decision-making, and thus promote a broader policing strategy.(17)

However, altitudes exceeding 30 meters are where the present artificial intelligence algorithms are pushed to their breaking points. Modern algorithms’ accuracy suffers at high elevations, necessitating the development of new approaches. Furthermore, the majority of the photos in a number of well-known open-access labeled datasets, such as the Shanghai dataset, were captured from the perspective of a CCTV camera or from the ground, with the photos being captured at lower heights than a typical UAV image. In order to adequately train machine learning algorithms, photos used for UAV studies should be captured from an altitude similar to that used by police UAVs, with a zenithal viewing angle.(18)

Furthermore, detecting small objects smaller than 50 × 50 pixels is still difficult. Some of the issues identified include a lack of context information, insufficient data gathered by feature detection layers, a lack of sufficient positive training instances, and an uneven background to small sparsely distributed objects with a ratio between 100:1 and 1000:1. Detecting objects in heavily dense photos gets far more challenging. When a detection method is applied to these photos, the bounding box of humans overlaps with the boundary box of the neighbor, making calculating the loss function more difficult.(18) Therefore, in order to increase the effectiveness of deploying UAVs in law enforcement operations, future research should address these challenges.

F. Crowd Monitoring and Controlling

Surveillance systems, which incorporate sensing, alerting, and action components, are used in crowd management and monitoring systems to track and regulate the behavior of crowds. Crowd monitoring includes the processes of crowd density estimation, crowd detection, crowd behavior analysis, and tracking. Crowd monitoring and controlling are important because they help with issues such as saving lives, reducing casualties, improving event organization, enhancing crowd movement, assisting people in need, reducing stampede damages, improving detection of suspicious activities, lowering the rates of missing people, and improving reaching people. Since it helps to prevent the tragedies brought on by an abnormal crowd, crowd monitoring is typically seen as a crucial topic in the field of public safety. Regression algorithms, machine learning-based detection algorithms, and other estimation models are used to develop traditional crowd density estimation models. Many video surveillance, real-time tracking, and security systems rely on crowd-tracking models.(3) The widely used technique for determining the motion of crowds is optical flow, which computes partial pixel motion throughout the entire photo.(19) Drones might also be used for illness detection, broadcasting announcements, sprinkling sanitizations, and transporting medical supplies in the event of a pandemic virus.(20)

However, conventional crowd analysis approaches rely on visual inputs from fixed-location or static surveillance cameras recording videos or photos, resulting in limited coverage and static angle visibility. Unless a network of many monitoring devices is set up, static visual inputs cannot track moving crowds in a constant and continuous manner.(3) On the other side, UAVs might be employed to fill the gap left by static surveillance cameras. UAVs’ mobility allows them to overcome issues with static surveillance systems’ extended coverage, increased costs, and varying imaging angles.(21) UAVs provide real-time data for crowd dynamics modeling by utilizing onboard technologies, such as LiDAR sensors, real-time processing units, and moving cameras.(22) UAVs are being utilized more frequently in crowd monitoring and surveillance applications, where machine learning and deep learning techniques are implemented to improve object detection and image processing.

Nonetheless, the growing use of drone wireless systems reveals new cyber challenges, such as data reconciliation concerns, eavesdropping, forgery, and privacy, making crowd control more difficult. When some malicious adversary gains access to surveillance-transmitted data, it could interrupt the whole surveillance operation. Therefore, any authorized user should be able to access data gathered by a particular hovering UAV through the shared authentication procedure using an agreed-upon session key. Hence, it is crucial to create a lightweight and secure agreement on the authentication key for the Internet of UAVs’ architecture.(23)

Furthermore, research on light variations in high-density images, crowd occlusion, and dense crowd management is still needed.(24) Several object tracking concerns, such as not having enough annotated training datasets, various views, and non-stationary cameras, might restrict monitoring the crowd.(25) However, the aforementioned limitations could be mitigated by using modern advanced drones with high-quality cameras (such as 4k cameras), a millimeter-wave radar,(26) and real-time crowd detection algorithms that rely on well-trained deep learning algorithms for detecting objects (such as faster CNN and YOLO).(27) Furthermore, advanced techniques such as density estimation algorithms such as Gaussian Mixture Models.(28) kernel density estimation (29) applied to point clouds captured by airborne LiDAR sensors, motion tracking using Kalman filters.(30) and optical flow calculations.(31) could resolve some of the limitations in photos and videos taken by UAVs used to monitor and control the crowd.

Datasets, Image Preprocessing and Processing

To train the models and acquire reliable test results when the models are deployed, specialized datasets including collections of videos and/or photographs that have been properly tagged and curated with the aid of specialists in the discipline are necessary.(32) Moreover, digital images are altered during the image processing phase to extract information or improve the photos. This can involve modifying an image’s brightness, contrast, or color as well as resizing or cropping it. The technique of drawing out important information from an image is called image analysis. This could entail spotting movement, gauging distances, or recognizing items.(33,34)

Object identification is one of the most often used image processing and analysis techniques for UAV cameras. With the aid of machine and deep learning algorithms, this technology can recognize people, buildings, and automobiles among other items in an image. This can be used for monitoring or to spot potential dangers. In motion detection, algorithms are used to detect motion in photos, such as an automobile driving or a person walking. This can be used to monitor traffic patterns or for security concerns.(33)

A growing number of real-time crowd detection systems use UAV image processing techniques to identify target objects. In single and multi-UAV object detection systems, conventional imaging methods including preprocessing, feature extraction, and classification are applied.(35) The missing people are identified using vision and thermal cameras in these systems, which use aerial photographs of specified objects to determine their whereabouts. Figure 5 visually illustrates the main flow of the UAV image processing system and specifies the following modules:

· Frame Capturing - From the UAV aerial photos, the video or imaging frames are captured.

· Preprocessing - To improve the quality of the images, filtering techniques like median, gaussian, adaptive, and others are applied at this step.

· Feature extraction - The features used to train the classifier have a significant impact on the detection performance.

· Classification - Typically, the target object is detected using machine learning/deep learning classification approaches based on the features extracted from the UAV photos.

A. Datasets

It is necessary to have trustworthy datasets with multi-task labels, such as regression and classification labels, for the application of supervised learning in visual-based navigation for UAVs. The available public datasets do, however, contain some restrictions.(36)

To identify and segment cracks in hydraulic concrete structures, the researchers in (37) developed the Deeplab V3+ network using the adaptive attention mechanism network and the Xception backbone. To create crack datasets, crack photos from various types of concrete hydraulic structures were gathered, and there are 5000 photos in the dataset. The recognition results of the proposed technique often exhibited fewer fractures than those of the other comparative deep learning-based methods, which is more in accordance with the actual crack distribution. The experimental findings demonstrate that the proposed strategy may achieve pretty accurate crack detection, and the test set identification results were obtained with a 91,264 F1 score.

In (38), researchers developed a single-frame infrared drone detection dataset (SIDD) and annotated the dataset’s infrared drone photos at the pixel level. The SIDD dataset includes 713 photos of sea scenes,4737 photos of 640 × 512 pixels, 1093 photos of city scenes, 2151 photos of mountain landscapes, and 780 photos of sky scenes. Eight prevalent segmentation detection algorithms (Blendmask, Yolov5, CondInst, Mask-Rcnn, Yolact++, Solov2, BoxInst, and Yolov7) and the proposed IRSDD-YOLOv5 method were compared in various experiments on the SIDD dataset.

Figure 3. UAV Remote Sensing Systems

Figure 4. SAR Operations

Figure 5. A UAV-Based Target Object-Detecting System

The results showed that the proposed IRSDD-YOLOv5 method outperformed these segmentation detection algorithms. The IRSDD-YOLOv5 measurements in the ocean and mountain scenes obtained 93,4 % and 79,8 %, respectively, which represent gains of 4 % and 3,8 % above YOLOv5.

The researchers in (39) created a drone benchmark dataset by manually annotating object bounding boxes for 2860 drone photographs. In this study, they ran a series of tests on the dataset they gathered to assess the drone detection network using a tiny iterative backbone called TIB-Net, which is built on an iterative architecture that combines with a spatial attention module and cyclic pathways. The findings show that the researchers’ approach with a model size of 697 KB obtained a mean average precision of 89,2 %, which was higher than the mean average precision of the majority of detection methods used in this study (Faster RCNN, Cascade RCNN, YOLOv3, YOLOv4, YOLOv5, and EXTD).

In addition, table 1 displays the datasets that are available online and include images captured using a drone-based camera.

B. Image Preprocessing

Images need to be preprocessed before they can be utilized for model training and inference. This covers modifications to the orientation, color, and size, among other modifications. Enhancing the image’s quality by pre-processing will enable more efficient analysis. Preprocessing enables the enhancement of certain attributes that are crucial for the software and removes undesired distortions. These attributes may vary based on the intended use. The results of image analysis and the quality of feature extraction may both benefit from image preprocessing.(40)

The curved flight trajectory of UAVs makes it challenging to acquire and arrange data points effectively, as there is limited horizontal overlap between succeeding photographs. The accuracy of aerial triangulation is significantly impacted by this issue, which forces the development of novel solutions. Moreover, the irregular gray levels have a negative effect on the alignment of consecutive images, which lowers the accuracy of further image processing. Another concern with UAV photography is the significant amount of data and photos that are taken. Small image frames also make it more difficult and intense to come up with a solution.(41) As a result, the image preprocessing of multispectral sensors on UAVs generally involves five fundamental operations to increase image quality and accuracy,(42) which are:

1. Noise correction: to improve the overall image quality, noise correction aims to eliminate systematic flaws found in multispectral sensors.

2. Vignetting correction: the term “vignetting” describes the spatially dependent reduction in light intensity away from the center of a picture. The goal of vignetting correction is to minimize this loss of brightness throughout the image and maintain homogeneity.

3. Lens distortion correction: lens distortion is a result of misalignment between the detector plane and the lens as well as changes in magnification across the lens surface. Any geometric distortions in the collected photos can be corrected by correcting lens distortion.

4. Band registration: to achieve spatial consistency between several spectral bands, band registration must be performed. The pictures from different bands are aligned throughout this procedure, allowing for precise comparison and analysis.

5. Radiometric correction: radiometric correction is essential for transforming the digital numbers that sensors record into useful spectral reflectance values.

Moreover, many image preprocessing techniques can be performed to improve image quality, pixel intensity, analysis, restoration, compression, reconstruction, reduce distortion, eliminate noise, remove unwanted and irrelevant elements, filter the images, and so forth. Some of these techniques are categorized into groups and illustrated in figure 6.(40,43,44,45,46,47)

C. Image Processing

With the fast advancement of image processing methods, numerous researchers have considered this topic and attempted to automate it by utilizing bio-metric identification techniques such as fingerprint recognition and facial recognition (FR). FR is one of the most efficient approaches utilized in comparison to other biometric measurements since it can detect and verify numerous identities at the same time utilizing a less expensive sensor, the camera.FR is primarily based on matching a camera-captured image of a person to the most similar image kept in a prepared database. FR requires precise face detection, as well as effective face analysis and transformation, to accomplish accurate matching. These processes may encounter certain difficulties that affect identification accuracy, such as variable head pose, lighting, occlusions, and facial expression settings. Furthermore, these difficulties are exacerbated by crowded settings and cluttered backgrounds.(48)

Computer vision (CV) and machine learning (ML) approaches provide reliable solutions for facial identification and representation. For instance, the Viola-Jones algorithm(49) detects human faces by cascading a series of Haar (an object detection approach (50)) or any engineered features. On the other hand, engineering approaches such as discrete wavelet transform (DWT), principal component analysis (PCA), discrete cosine transform (DCT), and eigenfaces were extensively employed to encode the facial characteristics for optimal recognition. These techniques, however, are likely insufficient for extracting representations with deep hierarchy from dimensional data. Deep learning models, on the other hand (such as CNN and YOLO), may learn high-level representations directly from raw images using certain representation layers. As a result, they performed well in tests involving recognition and unconstrained face detection.(49)

To maintain continued success using deep facial recognition, the training must be meticulously prepared by adding several samples for each identity to cover varied poses, light conditions, and occlusion conditions, allowing deep features to remain invariant for these variations. Besides deep learning models’ accuracy, deep learning models promise to satisfy real-time demands and provide a viable solution under real-time settings. Deep networks for face detection, such as Faster Region-Based Convolutional Neural Networks (Fast RCNN) and You Only Look Once (YOLO), can process video frames in real-time.

In a recent publication,(51) when trained on the MS COCO dataset, YOLOv7 surpassed all renowned object detectors in terms of accuracy and speed in the range of 5 to 160 frames per second (FPS). Its average precision (AP) of 56,8 % was the highest among all renowned real-time object detectors with 30 FPS or higher using GPU V100. Moreover, Convolutional-based detector ConvNeXt-XL Cascade-Mask R-CNN (8,6 FPS A100, 55,2 % AP) and transformer-based detector SWIN-L Cascade-Mask R-CNN (9,2 FPS A100, 53,9 % AP) were both outperformed by YOLOv7-E6 object detector (56 FPS V100, 55,9 % AP). In terms of speed and accuracy, Scaled-YOLOv4, ViT-Adapter-B, YOLOR, YOLOX, DETR, YOLOv5, DINO-5scale-R50, and Deformable DETR were all outperformed by YOLOv7.

The recent version, YOLOv8, is developed by Ultralytics, the same company that released YOLOv5. With a picture size of 640 pixels, YOLOv8x earned an average precision of 53,9 % when tested on the MS COCO dataset test-dev 2017 at a speed of 280 frames per second using TensorRT and NVIDIA A100 (as opposed to 50,7 % for YOLOv5 on an input of the same size).(52) Moreover, figure 7 illustrates popular one and two-stage deep learning algorithms used by researchers to detect objects.(53,54,55)

Figure 6. Image Preprocessing Techniques Commonly Used by Researchers

Figure 7. Popular One and Two-Stage Deep Learning Algorithms Used to Detect Objects

|

Table 1. Datasets That are Online Available and Include Images Captured Using a Drone-Based Camera |

|||

|

Developers |

Description |

Content |

Link to the dataset |

|

Svanström, et al. 2021 (56) |

A multi-sensor dataset for UAV spotting that contains visible and infrared films as well as audio recordings. |

The film section, which is around 311,3 megabyte (MB) in size, includes 650 visible and infrared films of helicopters, UAVs, birds, and aircraft (285 visible and 365 infrared). Each video lasts 10 seconds, for an overall of 203,328 labeled frames. The dataset contains 90 audio recordings from the categories of background noise, helicopters, and UAVs. |

|

|

Vélez, et al. 2022 (57) |

A drone RGB footage dataset for 3D photogrammetric reconstruction and precision agriculture. |

The dataset consists of 248 photos captured during two flights above a Spanish pistachio plantation. The dataset is around 22,3 gigabytes (GB) in size. |

|

|

Maulit, et al. 2023 (58) |

A Dataset of Multispectral Drone Images of barley, soybean, and wheat harvests in east Kazakhstan |

The drone photography dataset consists of two parts made up of eight files. The raw drone-captured imagery is represented by the first part of the dataset, while the processed orthomosaic photography with crop categories is represented by the second part. The first part has around 85,1 GB and 38,377 files, while the second part comprises approximately 26,84 GB and 131 files. |

https://doi.org/10.5281/zenodo.7749239 https://doi.org/10.5281/zenodo.7749362 |

|

Suo, et al. 2023 (59) |

Using drones to produce an infrared thermal high-altitude dataset for item detection |

The dataset contains 2,898 infrared thermal photos taken from 43,470 frames in many films taken by drones in diverse contexts such as playgrounds, roadways, parking lots, and schools. The dataset is around 590 MB in size. |

https://github.com/suojiashun/HIT-UAV-Infrared-Thermal-Dataset |

|

Krestenitis, et al. 2022 (60) |

A dataset of photographs taken by a drone for recognizing species and detecting weed |

The dataset, which is around 436 MB in size, comprises 201 RGB (1280×720 RGB) photographs captured by a drone in a cotton growing area in Larissa city in Greece during the early phases of the plant‘s growth. |

https://zenodo.org/record/6697343#.YrQpwHhByV4 |

|

Kraft et al. 2021 (61) |

Using a drone, a specific dataset for airborne litter and trash detection is gathered |

The dataset includes 772 photos containing 3718 manually annotated labels showing garbage in natural and urban areas including lawns, parks, and streets. The dataset is around 3 GB in size. |

https://github.com/PUTvision/UAVVaste or |

|

Wang, et al. 2022 (62) |

A multiple-view dataset for automobile identification in complicated situations using drone-captured photos |

The dataset is around 14 GB in size and has 49,712 automobile examples (2193 large automobiles and 47,519 small automobiles) labeled with arbitrary quadrilateral and oriented bounding boxes. |

|

|

Rosende, et al. 2022 (63) |

A dataset of drone-captured photos of road traffic in Spain for training machine vision algorithms to control traffic |

The dataset consists of 30,140 files including photos (15,070 photos) and descriptions. These photos include 155,328 vehicles annotated, including motorbikes (17,726) and automobiles (137,602). The dataset is around 35 GB in size. |

https://www.kaggle.com/datasets/javiersanchezsoriano/traffic-images-captured-from-uavs |

|

Brown et al. 2022 (64) |

A dataset gathered by employing drones to analyze group size inaccuracy and availability for aerial coastal dolphin surveys in Australia |

The dataset contains around 20 hours of drone video collected following 32 confrontations with Australian dolphins off the coast of northwestern Australia. The dataset is around 1,2 MB in size. |

https://datadryad.org/stash/dataset/doi:10.5061/dryad.qbzkh18mq |

|

Milz, et al. 2023 (65) |

A drone-captured multidisciplinary 3D recognition dataset for the forest ecosystem |

The study area includes 1967 trees, and the dataset was gathered in the Hainich-Dün region in Germany. The raw sensor recordings files on the Zenodo repository are around 217,0 GB in size, whereas the zipped file containing the metadata (which involves calibration) on the Dryad repository is around 34,32 MB in size. |

https://datadryad.org/stash/dataset/doi:10.5061/dryad.4b8gthtft or |

Analysis of Relevant Studies in This Field

Table 2 includes a summary of recent studies that examined the use of UAVs for photo collection and image processing, as well as the findings and limitations.

|

Table 2. A Comparative Analysis of UAV-Based Imaging Application Systems |

||||

|

Authors and Year |

Purpose |

Description |

Findings |

Limitations |

|

Guo, et al. 2023 (66) |

Introducing An enhanced YOLOv5s-based algorithm called UN-YOLOv5s. |

The goal of this study is to improve the detection of small targets by using the UN-YOLOv5s algorithm. |

1. The UN-YOLOv5s algorithm has a greater mean average precision (Map@0,5 %) than YOLOv8s, YOLOv5l, YOLOv5s, and YOLOv3. 2. When compared to YOLOv5l, UN-YOLOv5s lowered the amount of computation by 65,3 %. |

1. The mean average accuracy (Map@0,5:0,95 %) of the UN-YOLOv5s algorithm is lower than that of the YOLOv8s method. 2. The precision rate of the UN-YOLOv5s algorithm is lower than that of the YOLOv8s algorithm. |

|

Fei, et al. 2023 (67) |

Estimating wheat production through combining data from the three UAV-based sensor types (TIR, MS, and RGB), as well as using the support vector machines, random forest, ridge regression, deep neural network, and Cubist. |

The primary purpose of this study is to build an ensemble learning framework to enhance the prediction accuracy of machine learning algorithms and assess the multi-sensor data fusion of UAVAs for forecasting the yield of wheat at the stage of grain filling. |

1. In the data fusion of three sensors, ridge regression, Cubist, DNN, and SVM all produced greater prediction values than individual-sensor and dual-sensor (with the exception of random forest). 2. In the data fusion of the three sensors, the ensemble models outperformed individual machine-learning models in terms of prediction accuracy. 3. The combination of both ensemble learning and multi-sensor data has enhanced the accuracy of wheat yield prediction. |

1. Ensemble learning requires extensive training for each base model, requiring significant time compared to individual models. 2. This study did not use any modern deep learning algorithms. |

|

Di Sorbo, et al. 2023 (68) |

Evaluating various models for safety-related phrases in drone platform issues, classifying accidents and dangers, and creating a dataset of 304 issues extracted from UAV platforms. |

Evaluation of Random Forest, J48 decision tree, Naive Bayes, Logistic Regression, and SMO models for finding safety-related phrases in user-reported drone platform issues, as well as classifying accidents and dangers in safety-related issues encountered on open-source drone platforms. |

1. The findings reveal that the Random Forest results are comparable to the ones achieved by utilizing fastText. 2. The research discovered that the bulk of the dangerous scenarios in the studied UAV issue reports are due to software-related faults. |

1. No modern deep learning methods (such as Bert) were used in this research. The trials were only carried out utilizing machine-learning algorithms provided by Weka software. 2. The research didn’t show any preprocessing methods for preparing the text (such as lemmatization) for categorization. |

|

Yadav, et al. 2023 (69) |

Utilizing RGB photos obtained with a drone to identify volunteer cotton plants in a cornfield using a deep-learning algorithm. |

The goals of this study are to discover if the YOLOv3 algorithm can be used to detect volunteer cotton in a corn field using RGB photos collected from a drone, as well as to investigate how YOLOv3 performs on photos with three different pixel sizes. |

1. Using a drone and computer vision, YOLOv3 can detect volunteer cotton plants regardless of the three input picture sizes as well as perform real-time mitigation and detection. 2. YOLOv3 had a greater average detection accuracy (mAP) than the researchers’ prior papers, where they utilized traditional machine-learning techniques and the simple linear iterative clustering super pixel segmentation algorithm. |

1. The researchers used just one algorithm to detect volunteer cotton plants in a cornfield. 2. The researchers did not conduct experiments using the most recent versions of the YOLO algorithm (such as YOLOv8). If they did, it may enhance the results. 3. The researchers only compared the results of their study to their previous works, not to similar papers that employed new deep learning algorithms. |

|

Chen et al. 2023 (70) |

Examining the use of drone remote sensing for ecological environment investigation and regional three-dimensional model development. |

The research employs drone remote sensing to investigate the natural environment on a regional scale and build a 3D model. The data from the drone is initially gathered. Furthermore, the geometric validity of the 3D model is assessed using the Pix4D Mapper program. The researchers next analyzed the regional environment and assessed the trial results using the leaf area index, fractional vegetation cover, and normalized difference vegetation index data. The normalized differential vegetation index computation results are then utilized to examine the water environment in the research region. |

1. The high-resolution photos produced by drone remote sensing may address the issue of the hyperspectral photos’ inadequate resolution in the satellite remote sensing approach and increase the precision of border discrimination. 2. This research resolved the problems of low timeliness in conventional environmental quality studies using the satellite remote sensing method. |

1. Due to structural restrictions on drones, low spectral resolution remains a concern when compared to satellite remote sensing technology, and this has an effect on data processing. 2. This work does not cover the 3D model’s generalization or applications in different domains. |

|

Beltran-Marcos, et al. 2023 (71) |

Examining the feasibility of using multispectral photographs at various geographic and spectral resolutions for determining soil markers of fire severity. |

The paper includes findings from an examination of soil biophysical characteristics in burnt regions using remote sensing image fusion methods effective for predicting the effects of burn at a small spatial scale. A decision tree algorithm was employed to evaluate which soil parameters (mean weight diameter, soil moisture content, and soil organic carbon) were most associated with soil burn damage. |

1. Spectral and spatially upgraded photographs produced by merging satellite and drone images are helpful for assessing the main effects on soil characteristics in burnt forest zones where emergency actions are needed. 2. An efficient technique for estimating soil organic carbon in heterogeneous and complex forest landscapes affected by mixed-severity fires is produced by the fusion of multispectral data at various spatial and spectral resolutions, collected immediately after wildfire by sensors onboard Sentinel-2A satellite platform and drone. |

1. An increase in the spatial resolution of multispectral photography may result in a modest reduction in the spectral quality of the combined images. Poor performance in the soil moisture content projections may have occurred due to geographical variability in the pre-fire soil characteristics at the research location. 2. Using commercial satellite sensors with enhanced spatial and spectral resolution or aircraft hyperspectral surveys can improve the outcomes. 3. Further development is required to boost the fused datasets’ predictive potential. 4. The results may not be generalizable due to logistical limitations in the field sampling, such as the limited time available for data gathering following the fire and the particular distribution of samples gathered from soil fields. |

|

Chao-yu, et al. 2023 (72) |

Introducing the YOLOX-tiny algorithm for detecting maize tassels in a convoluted background in the flowering phase. |

The researchers employed a drone to gather photographs of maize tassels at various times. They also presented a SEYOLOX-tiny architecture that extends YOLOX by incorporating a Squeeze and Excitation Block. Furthermore, to train the object branch, the researchers used Binary Cross-Entropy Loss instead of Varifocal Loss to improve sensitivity to ignored aspects while decreasing the influence of negative variables like overlap, diverse sizes, and occlusion. |

1. The experimental findings in a real-world context demonstrate that this approach effectively recognized maize tassels, outperforming competing approaches in terms of prediction accuracy. 2. In comparison with earlier study efforts, the research’s approach is more effective at solving the issue of incorrect recognition brought on by small size and occlusion. |

1. When comparing the YOLOX models in the trials, the researchers excluded several deep-learning models, such as the modified YOLOv5n, which proved effective at identifying maize tassels. 2. The proposed method included a few cases of missed identifications, including high overlap, tiny size, and severe occlusion, that demonstrated the various extremes in the identification of maize tassels. |

|

Cruz et al. 2023 (73) |

Evaluating the efficiency of drone remote sensing in mapping different types of complicated coastal dunes habitats. |

Using multi-temporal photos collected over the course of the growing season, the researchers assessed how categorization accuracy varies. After that, they determined if adding topography from drones enhances categorization accuracy. In order to distinguish between distinct coastal dune ecosystems, the researchers determined the best time of the growth season for drone data collecting. The Random Forest algorithm was used to categorize the various habitat categories. |

1. This study demonstrated how to integrate drone data into the monitoring of complicated dune habitats and used the Random Forest classification algorithm to automatically and accurately map the habitats. 2. Based on the findings of the study, the researchers argue that for Irish coastal dune systems, the middle of the growth season is preferable to the late or early seasons for picture gathering. 3. The researchers recommend the adoption of their approach to aid in the spatiotemporal monitoring of ecosystems located in Annex I. |

1. Dunes with dune slacks and Salix repens were incorrectly classified as Annex I habitats. The classification of white dunes as recolonizing bare ground and exposed sand was similarly incorrect. White sands’ outcome was in line with its slightly lower accuracy values. 2. Even with the addition of topographic data, distinguishing between white dunes and grey dunes is still problematic. 3. This study might benefit from adopting LiDAR technology to obtain optical and topographic data since it provides more precision due to its laser-based method of data collection. |

|

Quan et al. 2023 (74) |

Evaluating the application of a hybrid feature selection approach for categorizing tree species in natural secondary forests. The researchers in this research combined laser scanning and hyperspectral data from drones. |

From drone hyperspectral imagery, the researchers produced texture and spectral variables, and from laser scanning, they produced radio-metric and detailed geometric variables. The most beneficial feature sets from drone hyperspectral imagery and laser scanning were then chosen by the researchers, who used these features to distinguish between different species of trees. Finally, the researchers investigated and determined how robust the selected features were using a simulation program. |

1. The findings showed that combining drone hyperspectral imaging with laser scanning enhanced tree species categorization accuracy when compared to relying on either one alone. 2. The simulation program demonstrated that the selected features had considerable robustness with pictures of varying spatial resolutions and in point clouds of varying densities. |

1. For several tree species, such as Tilia, Acer Mono, and Phellodendron Amurense, incorrect categorization was observed. The similarity in morphology and spectra and between various tree species as well as variation among the species of a single tree, in addition to the impact of the limited sample size, are likely responsible for this. 2. Incorrect tree identification results in a decrease in crown architecture because the crowns of nearby trees overlap. 3. Leaf-off/on data from several seasons should be used to examine shifts in tree characteristics over the course of the phenological cycle and to increase the accuracy of species categorization. 4. More data should be gathered and experiments using deep learning algorithms that do not depend on feature selection should be conducted to enhance classification results. |

|

Zhou et al. 2023 (75) |

Proposing the Temporal Attention Gated Recurrent Unit to efficiently extract temporal information based on transformers and recurrent neural networks in an effort to solve the problem of basic feature aggregation approaches which often introduce background interference into targets. |

o allow high efficiency and accuracy for drone-based image object detection, the researchers created the Temporal Attention Gated Recurrent Unit (TA-GRU) YOLOv7 video object identification framework. T The researchers created four modules in particular: 1. TA-GRU to obtain better attention to particular features in the present frame and boost the accuracy of motion information extraction across frames. 2. The temporal deformable transformer layer, which improves the target features while reducing unnecessary computational costs. 3. A deformable alignment module that extracts motion data and aligns features using two frames of pictures. 4. A temporal attention-based fusion module that merges important temporal feature information with the present frame feature. |

1. By using a frame-by-frame alignment technique instead of aligning each adjacent frame with a frame of reference, this approach minimizes computation while also improving alignment accuracy. 2. Using the VisDrone2019-VID dataset, the TA-GRU YOLOv7 approach outperformed YOLOv7 in terms of mAP and detection across a wide range of categories. 3. The proposed approach successfully addressed the visual degradation in UAVs and made it possible to switch from effective static picture object recognition to video object recognition. 4. The combination of fusion modules, feature alignment, transformer layers, and recurrent neural networks resulted in a robust module for dealing with temporal features in UAV videos. |

1. The module struggled to handle information about long-term motion, and the deterioration of appearance features in different objects within drone photos made it difficult for the module to successfully learn temporal information. 2. For improvements, more accurate motion predictions and annotated data are required. |

|

|

||||

Future Trends

Although advanced deep learning algorithms have been employed in drones, they have yet to be completely deployed. It’s because of restricted processing and power capabilities.(76) As a result, developers should develop unique deep-learning-based drone approaches, notably for SAR tasks. These approaches can help with learning and contextual judgments based on trajectory information. Moreover, fog, precipitation, and strong winds can negatively impact a UAV’s visibility, endurance, and sensors for collision avoidance and navigation, even though numerous developments have been implemented in the design of UAVs in order to make them better adapted for situations like these.(76) Therefore, to guarantee the effective execution of duties, further studies should be done to evaluate the impacts of poor weather on drone resilience and to develop solutions.(77)

Drones are vulnerable to cyber-attacks on several levels, raising security concerns. Malicious actors take advantage of drone weaknesses, putting sensitive and personal data at risk. UAV manufacturers sometimes overlook privacy and security problems during production, indicating the necessity for further research in this area.(78) Furthermore, because drones are now often used in sectors containing critical data (such as infrastructure search, emergency services, and military), establishing security in drone communications and services is a demanding issue. As a result, effective techniques for providing secure and dependable services and communications in drone-related systems will be needed.(79)

Furthermore, because of their faint look and limited differentiating features, small items pose substantial hurdles in drone monitoring systems, resulting in a lack of critical information. This deficiency affects the tracking operation, frequently resulting in decreased accuracy and efficiency. Tracking may be fairly complicated because of a variety of circumstances. When items move quickly, become invisible, or undergo occlusion, problems arise. Problems also arise when moving cameras, rotation, non-rigid objects, scale changes, and noise are present. Despite significant developments in the field, these complications exist, particularly when detecting small items from a far distance (80) or in poor weather conditions,(81) and they should be addressed in future research.

The usage of drones in indoor conditions presents a number of technological challenges. These challenges include the size of the drone, the high machine/worker density, the height of barriers, GPS denial, and the risk of drone accidents and failures with indoor objects.(82) Although numerous studies have been undertaken to provide solutions to the drone avoidance of barriers challenge, it is important to note that there are currently many unresolved issues that necessitate new solutions and considerations. Airborne sensor modeling needs to be improved in future studies, and barrier information processing methods should be developed for drones’ sensors. The modeling of complicated settings with irregular and U-shaped barriers, and the dynamics of drones in tight areas such as caves and indoors need also to be focused on in future research. Moreover, future research should focus on the integration of heterogeneous data gathered from numerous sources. It is also important to focus on the drone controller features in route planning, as well as enhance drone path tracking accuracy to avoid barriers. It is important to continue developing 3D obstacle-avoiding models with improved detection accuracy.(9)

CONCLUSION

This paper provided a comprehensive analysis of UAVs in various applications, discussing current datasets, image preprocessing, and analysis methods for photos captured by UAV cameras. It reviewed recent studies related to UAV-monitoring applications and identified prospective research gaps in UAV-monitoring systems. The paper illustrated the common use of machine learning and deep learning techniques for processing aerial images captured by UAVs, aiding in crowd detection, object identification, density estimation, and tracking. This paper argued that UAVs are highly regarded in both public and civil sectors for their capacity to issue warnings and alerts during disasters such as floods, terrorist incidents, and interruptions in transportation and telecommunications. Additionally, the paper explored other UAV applications, including remote sensing, logistics, military operations, search and rescue, and law enforcement.

Finally, this paper demonstrated that outfitting UAVs with advanced image capture and processing technologies would provide numerous benefits, address many current limitations, and significantly aid in enhancing many other community services highlighted in this paper. Nonetheless, future research needs to tackle the current constraints associated with deploying UAVs for monitoring applications, as highlighted in this paper.

REFERENCES

1. Yao H, Qin R, Chen X. Unmanned Aerial Vehicle for Remote Sensing Applications—A Review. Remote Sens (Basel) [Internet]. 2019;11(12). Available from: https://www.mdpi.com/2072-4292/11/12/1443

2. Skogan WG. The future of CCTV. Vol. 18, Criminology and Public Policy. Wiley-Blackwell; 2019 Feb.

3. Husman MA, Albattah W, Abidin ZZ, Mustafah YMohd, Kadir K, Habib S, et al. Unmanned Aerial Vehicles for Crowd Monitoring and Analysis. Electronics (Basel) [Internet]. 2021;10(23). Available from: https://www.mdpi.com/2079-9292/10/23/2974

4. Chin R, Catal C, Kassahun A. Plant disease detection using drones in precision agriculture. Vol. 24, Precision Agriculture. Springer; 2023. p. 1663–82.

5. Nowak MM, Dziób K, Bogawski P. Unmanned Aerial Vehicles (UAVs) in environmental biology: A review. Eur J Ecol. 2019 Jan 1;4(2):56–74.

6. Sherstjuk V, Zharikova M, Sokol I. Forest Fire-Fighting Monitoring System Based on UAV Team and Remote Sensing. In: 2018 IEEE 38th International Conference on Electronics and Nanotechnology (ELNANO). 2018. p. 663–8.

7. Tuyishimire E, Bagula AB, Rekhis S, Boudriga N. Real-time data muling using a team of heterogeneous unmanned aerial vehicles. ArXiv [Internet]. 2019;abs/1912.08846. Available from: https://api.semanticscholar.org/CorpusID:209415203

8. Chennam Krishna Keerthi and Aluvalu R and SS. An Authentication Model with High Security for Cloud Database. In: Das Santosh Kumar and Samanta S and DN and PBS and HAE, editor. Architectural Wireless Networks Solutions and Security Issues [Internet]. Singapore: Springer Singapore; 2021. p. 13–25. Available from: https://doi.org/10.1007/978-981-16-0386-0_2

9. Liang H, Lee SC, Bae W, Kim J, Seo S. Towards UAVs in Construction: Advancements, Challenges, and Future Directions for Monitoring and Inspection. Drones [Internet]. 2023;7(3). Available from: https://www.mdpi.com/2504-446X/7/3/202

10. International Forum to Advance First Responder Innovation’s (IFAFRI). The International Forum to Advance Capability Gap 6 “Deep Dive” Analysis [Internet]. 2019 [cited 2023 Nov 8]. Available from: https://www.internationalresponderforum.org/sites/default/files/2023-02/gap6_analysis.pdf

11. Abderahman Rejeb Karim Rejeb SJS, Treiblmaier H. Drones for supply chain management and logistics: a review and research agenda. International Journal of Logistics Research and Applications [Internet]. 2023;26(6):708–31. Available from: https://doi.org/10.1080/13675567.2021.1981273

12. Kunze O. Replicators, Ground Drones and Crowd Logistics A Vision of Urban Logistics in the Year 2030. Transportation Research Procedia [Internet]. 2016;19:286–99. Available from: https://www.sciencedirect.com/science/article/pii/S2352146516308742

13. The Business Research Company. Military Gliders and Drones Global Market Report 2022. 2022.

14. Gargalakos M. The role of unmanned aerial vehicles in military communications: application scenarios, current trends, and beyond. The Journal of Defense Modeling and Simulation [Internet]. 2024;21(3):313–21. Available from: https://doi.org/10.1177/15485129211031668

15. Scherer J, Yahyanejad S, Hayat S, Yanmaz E, Andre T, Khan A, et al. An Autonomous Multi-UAV System for Search and Rescue. Proceedings of the First Workshop on Micro Aerial Vehicle Networks, Systems, and Applications for Civilian Use [Internet]. 2015; Available from: https://api.semanticscholar.org/CorpusID:207225452

16. Mavic 2 Enterprise. POLICE OPERATIONS-MADE MORE EFFECTIVE BY DRONES. 2017.

17. UK Metropolitan Police. Use of Drones in policing [Internet]. [cited 2024 Aug 8]. Available from: https://www.met.police.uk/foi-ai/metropolitan-police/d/november-2022/use-of-drones-in-policing/

18. Royo P, Asenjo À, Trujillo J, Çetin E, Barrado C. Enhancing Drones for Law Enforcement and Capacity Monitoring at Open Large Events. Drones [Internet]. 2022;6(11). Available from: https://www.mdpi.com/2504-446X/6/11/359

19. Chen W, Shang G, Hu K, Zhou C, Wang X, Fang G, et al. A Monocular-Visual SLAM System with Semantic and Optical-Flow Fusion for Indoor Dynamic Environments. Micromachines (Basel) [Internet]. 2022;13(11). Available from: https://www.mdpi.com/2072-666X/13/11/2006

20. Mohsan SAH, Zahra Q ul A, Khan MA, Alsharif MH, Elhaty IA, Jahid A. Role of Drone Technology Helping in Alleviating the COVID-19 Pandemic. Vol. 13, Micromachines. MDPI; 2022.

21. Gu X, Zhang G. A survey on UAV-assisted wireless communications: Recent advances and future trends. Comput Commun [Internet]. 2023 Aug;208(C):44–78. Available from: https://doi.org/10.1016/j.comcom.2023.05.013

22. Thakur N, Nagrath P, Jain R, Saini D, Sharma N, Hemanth DJ. Autonomous pedestrian detection for crowd surveillance using deep learning framework. Soft Comput [Internet]. 2023 May;27(14):9383–99. Available from: https://doi.org/10.1007/s00500-023-08289-4

23. Bander Alzahrani Ahmed Barnawi AIAARAMS. A Secure Key Agreement Scheme for Unmanned Aerial Vehicles-Based Crowd Monitoring System. Computers, Materials & Continua [Internet]. 2022;70(3):6141–58. Available from: http://www.techscience.com/cmc/v70n3/44981

24. Weng W, Wang J, Shen L, Song Y. Review of analyses on crowd-gathering risk and its evaluation methods. Journal of safety science and resilience [Internet]. 2023;4(1):93–107. Available from: http://dx.doi.org/10.1016/j.jnlssr.2022.10.004

25. Amosa TI, Sebastian P, Izhar LI, Ibrahim O, Ayinla LS, Bahashwan AA, et al. Multi-camera multi-object tracking: A review of current trends and future advances. Neurocomputing [Internet]. 2023;552:126558. Available from: https://www.sciencedirect.com/science/article/pii/S0925231223006811

26. Zhou Y, Dong Y, Hou F, Wu J. Review on Millimeter-Wave Radar and Camera Fusion Technology. Sustainability [Internet]. 2022;14(9). Available from: https://www.mdpi.com/2071-1050/14/9/5114

27. Gündüz MŞ, Işık G. A new YOLO-based method for real-time crowd detection from video and performance analysis of YOLO models. J Real Time Image Process [Internet]. 2023;20(1):5. Available from: https://doi.org/10.1007/s11554-023-01276-w

28. Ye L, Zhang K, Xiao W, Sheng Y, Su D, Wang P, et al. Gaussian mixture model of ground filtering based on hierarchical curvature constraints for airborne lidar point clouds. Photogramm Eng Remote Sensing. 2021 Sep 1;87(9):615–30.

29. Wang Y, Xi X, Wang C, Yang X, Wang P, Nie S, et al. A Novel Method Based on Kernel Density for Estimating Crown Base Height Using UAV-Borne LiDAR Data. IEEE Geoscience and Remote Sensing Letters. 2022;19:1–5.

30. Zhang G, Yin J, Deng P, Sun Y, Zhou L, Zhang K. Achieving Adaptive Visual Multi-Object Tracking with Unscented Kalman Filter. Sensors [Internet]. 2022;22(23). Available from: https://www.mdpi.com/1424-8220/22/23/9106

31. Ammar A, Fredj H Ben, Souani C. Accurate Realtime Motion Estimation Using Optical Flow on an Embedded System. Electronics (Basel) [Internet]. 2021;10(17). Available from: https://www.mdpi.com/2079-9292/10/17/2164

32. Ruszczak B, Michalski P, Tomaszewski M. Overview of Image Datasets for Deep Learning Applications in Diagnostics of Power Infrastructure. Sensors [Internet]. 2023;23(16). Available from: https://www.mdpi.com/1424-8220/23/16/7171

33. Laghari AA, Jumani AK, Laghari RA, Li H, Karim S, Khan AA. Unmanned Aerial Vehicles Advances in Object Detection and Communication Security Review. Cognitive Robotics [Internet]. 2024; Available from: https://www.sciencedirect.com/science/article/pii/S2667241324000090

34. Qin S, Li L. Visual Analysis of Image Processing in the Mining Field Based on a Knowledge Map. Sustainability [Internet]. 2023;15(3). Available from: https://www.mdpi.com/2071-1050/15/3/1810

35. Li P, Khan J. Feature extraction and analysis of landscape imaging using drones and machine vision. Soft comput [Internet]. 2023;27(24):18529–47. Available from: https://doi.org/10.1007/s00500-023-09352-w

36. Chang Y, Cheng Y, Murray J, Huang S, Shi G. The HDIN Dataset: A Real-World Indoor UAV Dataset with Multi-Task Labels for Visual-Based Navigation. Drones [Internet]. 2022;6(8). Available from: https://www.mdpi.com/2504-446X/6/8/202

37. Zhu Y, Tang H. Automatic Damage Detection and Diagnosis for Hydraulic Structures Using Drones and Artificial Intelligence Techniques. Remote Sens (Basel) [Internet]. 2023;15(3). Available from: https://www.mdpi.com/2072-4292/15/3/615

38. Yuan S, Sun B, Zuo Z, Huang H, Wu P, Li C, et al. IRSDD-YOLOv5: Focusing on the Infrared Detection of Small Drones. Drones [Internet]. 2023;7(6). Available from: https://www.mdpi.com/2504-446X/7/6/393

39. Sun H, Yang J, Shen J, Liang D, Ning-Zhong L, Zhou H. TIB-Net: Drone Detection Network With Tiny Iterative Backbone. IEEE Access. 2020;8:130697–707.

40. Chaki J, Dey N. A Beginner’s Guide to Image Preprocessing Techniques [Internet]. Dey N, editor. 2019. 1–97 p. Available from: https://www.crcpress.com/Intelligent-Signal-Processing-and-Data-

41. Chen J, Yang H, Xu R, Hussain S. Application and Analysis of Remote Sensing Image Processing Technology in Robotic Power Inspection. J Robot [Internet]. 2023 Jan;2023. Available from: https://doi.org/10.1155/2023/9943372

42. Jiang J, Zheng H, Ji X, Cheng T, Tian Y, Zhu Y, et al. Analysis and Evaluation of the Image Preprocessing Process of a Six-Band Multispectral Camera Mounted on an Unmanned Aerial Vehicle for Winter Wheat Monitoring. Sensors [Internet]. 2019;19(3). Available from: https://www.mdpi.com/1424-8220/19/3/747

43. Li M, Yang C, Zhang Q. Soil and Crop Sensing for Precision Crop Production (Agriculture Automation and Control). 1st ed. Springer; 2022. 1–336 p.

44. Mishra KN. An Efficient Palm-Dorsa-Based Approach for Vein Image Enhancement and Feature Extraction in Cloud Computing Environment. In: Al-Turjman F, editor. Unmanned Aerial Vehicles in Smart Cities [Internet]. Cham: Springer International Publishing; 2020. p. 85–106. Available from: https://doi.org/10.1007/978-3-030-38712-9_6

45. Maharana K, Mondal S, Nemade B. A review: Data pre-processing and data augmentation techniques. Global Transitions Proceedings [Internet]. 2022;3(1):91–9. Available from: https://www.sciencedirect.com/science/article/pii/S2666285X22000565

46. Elgendy M. Deep Learning for Vision Systems. 1st ed. Manning Publications; 2020. 1–410 p.

47. Dey S. Hands-On Image Processing with Python. Packt Publishing; 2018. 1–492 p.

48. J A, Suresh L P. A novel fast hybrid face recognition approach using convolutional Kernel extreme learning machine with HOG feature extractor. Measurement: Sensors [Internet]. 2023;30:100907. Available from: https://www.sciencedirect.com/science/article/pii/S266591742300243X

49. Boesch G. Image Recognition: The Basics and Use Cases (2024 Guide) [Internet]. 2023 [cited 2024 Apr 2]. Available from: https://viso.ai/computer-vision/image-recognition/

50. Adeshina SO, Ibrahim H, Teoh SS, Hoo SC. Custom Face Classification Model for Classroom Using Haar-Like and LBP Features with Their Performance Comparisons. Electronics (Basel) [Internet]. 2021;10(2). Available from: https://www.mdpi.com/2079-9292/10/2/102

51. Wang CY, Bochkovskiy A, Liao HYM. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In: 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 2023. p. 7464–75.

52. Terven J, Córdova-Esparza DM, Romero-González JA. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach Learn Knowl Extr [Internet]. 2023 Nov;5(4):1680–716. Available from: http://dx.doi.org/10.3390/make5040083

53. Mittal P, Singh R, Sharma A. Deep learning-based object detection in low-altitude UAV datasets: A survey. Image Vis Comput [Internet]. 2020;104:104046. Available from: https://www.sciencedirect.com/science/article/pii/S0262885620301785

54. Xiao Y, Tian Z, Yu J, Zhang Y, Liu S, Du S, et al. A review of object detection based on deep learning. Multimed Tools Appl [Internet]. 2020;79(33):23729–91. Available from: https://doi.org/10.1007/s11042-020-08976-6

55. Kaur J, Singh W. Tools, techniques, datasets and application areas for object detection in an image: a review. Multimed Tools Appl [Internet]. 2022;81(27):38297–351. Available from: https://doi.org/10.1007/s11042-022-13153-y

56. Svanström F, Alonso-Fernandez F, Englund C. A dataset for multi-sensor drone detection. Data Brief [Internet]. 2021;39:107521. Available from: https://www.sciencedirect.com/science/article/pii/S2352340921007976

57. Vélez S, Vacas R, Martín H, Ruano-Rosa D, Álvarez S. High-Resolution UAV RGB Imagery Dataset for Precision Agriculture and 3D Photogrammetric Reconstruction Captured over a Pistachio Orchard (Pistacia vera L.) in Spain. Data (Basel) [Internet]. 2022;7(11). Available from: https://www.mdpi.com/2306-5729/7/11/157

58. Maulit A, Nugumanova A, Apayev K, Baiburin Y, Sutula M. A Multispectral UAV Imagery Dataset of Wheat, Soybean and Barley Crops in East Kazakhstan. Data (Basel) [Internet]. 2023;8(5). Available from: https://www.mdpi.com/2306-5729/8/5/88

59. Suo J, Wang T, Zhang X, Chen H, Zhou W, Shi W. HIT-UAV: A high-altitude infrared thermal dataset for Unmanned Aerial Vehicle-based object detection. Sci Data [Internet]. 2023;10(1):227. Available from: https://doi.org/10.1038/s41597-023-02066-6

60. Krestenitis M, Raptis EK, Kapoutsis ACh, Ioannidis K, Kosmatopoulos EB, Vrochidis S, et al. CoFly-WeedDB: A UAV image dataset for weed detection and species identification. Data Brief [Internet]. 2022;45:108575. Available from: https://www.sciencedirect.com/science/article/pii/S235234092200782X

61. Kraft M, Piechocki M, Ptak B, Walas K. Autonomous, Onboard Vision-Based Trash and Litter Detection in Low Altitude Aerial Images Collected by an Unmanned Aerial Vehicle. Remote Sens (Basel) [Internet]. 2021;13(5). Available from: https://www.mdpi.com/2072-4292/13/5/965

62. Wang J, Teng X, Li Z, Yu Q, Bian Y, Wei J. VSAI: A Multi-View Dataset for Vehicle Detection in Complex Scenarios Using Aerial Images. Drones [Internet]. 2022;6(7). Available from: https://www.mdpi.com/2504-446X/6/7/161

63. Bemposta Rosende S, Ghisler S, Fernández-Andrés J, Sánchez-Soriano J. Dataset: Traffic Images Captured from UAVs for Use in Training Machine Vision Algorithms for Traffic Management. Data (Basel) [Internet]. 2022;7(5). Available from: https://www.mdpi.com/2306-5729/7/5/53

64. Brown AM, Allen SJ, Kelly N, Hodgson AJ. Using Unoccupied Aerial Vehicles to estimate availability and group size error for aerial surveys of coastal dolphins. Remote Sens Ecol Conserv [Internet]. 2023;9(3):340–53. Available from: https://zslpublications.onlinelibrary.wiley.com/doi/abs/10.1002/rse2.313

65. Milz S, Wäldchen J, Abouee A, Ravichandran AA, Schall P, Hagen C, et al. The HAInich: A multidisciplinary vision data-set for a better understanding of the forest ecosystem. Sci Data [Internet]. 2023;10(1):168. Available from: https://doi.org/10.1038/s41597-023-02010-8

66. Guo J, Liu X, Bi L, Liu H, Lou H. UN-YOLOv5s: A UAV-Based Aerial Photography Detection Algorithm. Sensors [Internet]. 2023;23(13). Available from: https://www.mdpi.com/1424-8220/23/13/5907

67. Fei S, Hassan MA, Xiao Y, Su X, Chen Z, Cheng Q, et al. UAV-based multi-sensor data fusion and machine learning algorithm for yield prediction in wheat. Precis Agric [Internet]. 2023;24(1):187–212. Available from: https://doi.org/10.1007/s11119-022-09938-8

68. Di Sorbo A, Zampetti F, Visaggio A, Di Penta M, Panichella S. Automated Identification and Qualitative Characterization of Safety Concerns Reported in UAV Software Platforms. ACM Trans Softw Eng Methodol [Internet]. 2023 Apr;32(3). Available from: https://doi.org/10.1145/3564821

69. Kumar Yadav P, Alex Thomasson J, Hardin R, Searcy SW, Braga-Neto U, Popescu SC, et al. Detecting volunteer cotton plants in a corn field with deep learning on UAV remote-sensing imagery. Comput Electron Agric [Internet]. 2023;204:107551. Available from: https://www.sciencedirect.com/science/article/pii/S0168169922008596

70. Chao Chen Yankun Chen HJLCZLHSJHHWSFXZ. Correction: 3D Model Construction and Ecological Environment Investigation on a Regional Scale Using UAV Remote Sensing. Intelligent Automation & Soft Computing [Internet]. 2024;39(1):113–4. Available from: http://www.techscience.com/iasc/v39n1/55873

71. Beltrán-Marcos D, Suárez-Seoane S, Fernández-Guisuraga JM, Fernández-García V, Marcos E, Calvo L. Relevance of UAV and sentinel-2 data fusion for estimating topsoil organic carbon after forest fire. Geoderma [Internet]. 2023;430:116290. Available from: https://www.sciencedirect.com/science/article/pii/S0016706122005973

72. SONG C yu, ZHANG F, LI J sheng, XIE J yi, YANG C, ZHOU H, et al. Detection of maize tassels for UAV remote sensing image with an improved YOLOX Model. J Integr Agric [Internet]. 2023;22(6):1671–83. Available from: https://www.sciencedirect.com/science/article/pii/S2095311922002465

73. Charmaine Cruz Jerome O’Connell KMJRMPMP, Connolly J. Assessing the effectiveness of UAV data for accurate coastal dune habitat mapping. Eur J Remote Sens [Internet]. 2023;56(1):2191870. Available from: https://doi.org/10.1080/22797254.2023.2191870

74. Ying Quan Mingze Li YHJL, Wang B. Tree species classification in a typical natural secondary forest using UAV-borne LiDAR and hyperspectral data. GIsci Remote Sens [Internet]. 2023;60(1):2171706. Available from: https://doi.org/10.1080/15481603.2023.2171706

75. Zhou Z, Yu X, Chen X. Object Detection in Drone Video with Temporal Attention Gated Recurrent Unit Based on Transformer. Drones [Internet]. 2023;7(7). Available from: https://www.mdpi.com/2504-446X/7/7/466

76. Gugan G, Haque A. Path Planning for Autonomous Drones: Challenges and Future Directions. Drones [Internet]. 2023;7(3). Available from: https://www.mdpi.com/2504-446X/7/3/169

77. Mohsan SAH, Othman NQH, Li Y, Alsharif MH, Khan MA. Unmanned aerial vehicles (UAVs): practical aspects, applications, open challenges, security issues, and future trends. Intell Serv Robot [Internet]. 2023;16(1):109–37. Available from: https://doi.org/10.1007/s11370-022-00452-4