doi: 10.56294/dm2024.417

ORIGINAL

AI in the Sky: Developing Real-Time UAV Recognition Systems to Enhance Military Security

IA en el Cielo: Desarrollo de Sistemas de Reconocimiento en Tiempo Real para UAV con el Fin de Mejorar la Seguridad Militar

Mowafaq Salem Alzboon1 ![]() *, Muhyeeddin Alqaraleh2

*, Muhyeeddin Alqaraleh2 ![]() *, Mohammad Subhi Al-Batah1

*, Mohammad Subhi Al-Batah1 ![]() *

*

1Jadara University, Computer Science. Irbid, Jordan.

2Zarqa University, Software Engineering. Zarqa, Jordan.

Cite as: Mowafaq SA, Muhyeeddin A, Al-Batah MS. AI in the Sky: Developing Real-Time UAV Recognition Systems to Enhance Military Security. Data and Metadata. 2024; 3:.417. https://doi.org/10.56294/dm2024.417

Submitted: 24-01-2024 Revised: 16-05-2024 Accepted: 06-10-2024 Published: 07-10-2024

Editor: Adrián

Alejandro Vitón Castillo ![]()

Corresponding Author: Mowafaq Salem Alzboon *

ABSTRACT

In an era where Unmanned Aerial Vehicles (UAVs) have become crucial in military surveillance and operations, the need for real-time and accurate UAV recognition is increasingly critical. The widespread use of UAVs presents various security threats, requiring systems that can differentiate between UAVs and benign objects, such as birds. This study conducts a comparative analysis of advanced machine learning models to address the challenge of aerial classification in diverse environmental conditions without system redesign. Large datasets were used to train and validate models, including Neural Networks, Support Vector Machines, ensemble methods, and Random Forest Gradient Boosting Machines. These models were evaluated based on accuracy and computational efficiency, key factors for real-time application. The results indicate that Neural Networks provide the best performance, demonstrating high accuracy in distinguishing UAVs from birds. The findings emphasize that Neural Networks have significant potential to enhance operational security and improve the allocation of defense resources. Overall, this research highlights the effectiveness of machine learning in real-time UAV recognition and advocates for the integration of Neural Networks into military defense systems to strengthen decision-making and security operations. Regular updates to these models are recommended to keep pace with advancements in UAV technology, including more agile and stealthier designs.

Keywords: Crewless Aerial Vehicles (UAV); Real-Time Recognition; Surveillance; Security Operations; Image Recognition; Algorithm Performance.

RESUMEN

En una era en la que los vehículos aéreos no tripulados (UAV) se han convertido en elementos cruciales de la vigilancia y las operaciones militares, la necesidad de reconocerlos en tiempo real y con precisión es cada vez más crítica. El uso generalizado de UAV presenta diversas amenazas para la seguridad, por lo que se requieren sistemas que puedan diferenciar entre UAV y objetos benignos, como pájaros. Este estudio realiza un análisis comparativo de modelos avanzados de aprendizaje automático para abordar el reto de la clasificación aérea en diversas condiciones ambientales sin rediseñar el sistema. Se utilizaron grandes conjuntos de datos para entrenar y validar modelos, incluidas redes neuronales, máquinas de vectores de apoyo, métodos de conjunto y máquinas de aumento gradual de bosques aleatorios. Estos modelos se evaluaron en función de su precisión y eficiencia computacional, factores clave para su aplicación en tiempo real. Los resultados indican que las redes neuronales ofrecen el mejor rendimiento, demostrando una gran precisión a la hora de distinguir los vehículos aéreos no tripulados de las aves. Los resultados ponen de relieve que las redes neuronales tienen un potencial significativo para aumentar la seguridad operativa y

mejorar la asignación de los recursos de defensa. En general, esta investigación pone de relieve la eficacia del aprendizaje automático en el reconocimiento de UAV en tiempo real y aboga por la integración de las redes neuronales en los sistemas de defensa militar para reforzar la toma de decisiones y las operaciones de seguridad. Se recomienda actualizar periódicamente estos modelos para seguir el ritmo de los avances en la tecnología de los vehículos aéreos no tripulados, incluidos los diseños más ágiles y sigilosos.

Palabras clave: Vehículos Aéreos sin Tripulación (UAV); Reconocimiento en Tiempo Real; Vigilancia; Operaciones de Seguridad; Reconocimiento de Imágenes; Rendimiento del Algoritmo.

INTRODUCTION

The last decade has seen an evolution in the military use of drones. From being used mainly for surveillance and reconnaissance, they are now used for launching predawn strikes in target-specific operations.(1) Whether a small handheld drone or a giant-controlled aircraft, military drones serve one crucial purpose: providing an eye in the sky that humans cannot reach. In real-time, this aerial view of things decides whether innocents' lives are at stake.(2) Hovering for hours at a stretch in an uninteresting area but one that may turn hostile at any moment, drones perform the kind of surveillance that no camera previously did. But, with such unprecedented capabilities that drones put at military commanders' disposal, two crucial problems arise. One, an army commander's worst nightmare, cyberterrorism, is what the cyber boffins call it. And, two, the most severe weapon ever invented by man, and the only real threat to it is war itself. Drones qualify as the most severe weapon ever invented.(3)

The more and more common use of UAV swarms in battle tactics, shown by the Iranian raid on Israeli territories drone and missile storm, demonstrates the strategic significance of robust UAV recognition systems. Cases like this, where UAVs are used to assault military radars and intelligence systems, benefit from many aspects of electronic and psychological warfare that focus on overwhelming the defense apparatus and creating confusion among the staff in charge of the defense. For this reason, a practical, real-time recognition platform based on advanced computational technologies that can distinguish UAV birds is critical.(4)

In this context, research into developing advanced machine learning algorithms is pivotal. This article provides an in-depth exploration of the various machine learning models and techniques that can assist military and civilian security personnel in more effectively countering the escalating threat of UAVs. Several machine learning algorithms, such as deep neural networks, Support Vector Machines (SVMs), random forests, and gradient boosting machines, are compared and contrasted to identify the best candidates for use in high-security zones and places where UAVs are prohibited. Furthermore, these machine learning models are analyzed from the perspective of computational efficiency and the false positive rate. The best-performing models for real-time military detection systems are explained in detail. The authors propose several machine learning algorithms that minimize exposure to more severe false negatives, which are not admissible in a military environment. The performance of these algorithms is evaluated in terms of accuracy, precision, recall, FP rate, and computational complexity. Here, the authors describe a solution that will provide crucial insights to enable more robust and effective development of UAV detection and countermeasures in the future. Of course, an article such as this is begging for some powerful machine learning strategies and technologies available today - and it's certainly an area that has seen significant progress in the last decade. Indeed, as the wave of the "new era" of UAVs increases, the authors expect our advancements in these technologies to be refined and pushed forward through the current surveillance methodologies. While the authors provide our thoughts on how machine learning might be used in a particular context, they understand that this is a new area of research and did not seek to explore it in depth. However, the authors feel that there is an opportunity to provide some functional new perspectives on machine learning, especially regarding UAVs, as there is much excitement surrounding the technology. The authors would love to hear any additional insight about how the authors might be able to use machine learning in the context of UAV detection in space.(5,6,7)

Problem Statement

The development of crewless aerial vehicles (UAVs) in many army programs has highlighted the urgent need for sophisticated structures capable of apprehending UAVs in real-time and with high accuracy. One of the essential demanding situations that this study endeavors to deal with is the advent of efficient device-mastering models that might be able to appropriately distinguish crewless aerial vehicles (UAVs) from other entities, including birds, in many environmental conditions. This capability is critical for boosting safety operations and reaction strategies in navy and civilian contexts, where the fast identity and category of aerial objects are vital for retaining airspace security and operational protection. It is also crucial for facilitating the identity of aerial items.

Article Objectives

This research aims to beautify the capability of distinguishing between Unmanned Aerial Vehicles (UAVs) and birds in navy surveillance operations. This improvement is crucial for optimizing beneficial, helpful resource allocation, enhancing reaction techniques, and preserving airspace protection. The particular goals of the studies are outlined first: to develop advanced detection algorithms, which will create and refine sophisticated algorithms that leverage device getting-to-know and synthetic intelligence techniques to differentiate between UAVs and birds. These algorithms will analyze complex datasets regarding flight patterns, sizes, and other distinguishing capabilities. Secondly, Enhance Image Recognition Capabilities: to improve the accuracy of photo popularity structures in detecting UAVs against numerous natural backgrounds. This entails education models on considerable datasets encompassing a range of UAV and chook photos captured beneath various environmental conditions. Thirdly, Minimize False Positives and Negatives: to reduce the charge of false alarms (fake positives) where birds are misidentified as UAVs and missed detections (false negatives) in which UAVs are omitted. This goal aims to streamline the performance of surveillance systems in excessive-protection zones. Fourthly, Implement Real-time Processing: to broaden a gadget capable of processing and studying records in actual-time, permitting immediate and knowledgeable choice-making in dynamic and probably adversarial environments. Fifthly, Evaluate System Robustness in Simulated Environments: to carefully test the evolved systems in simulated environments that mimic real-global operational situations, including scenarios involving UAV swarms and electronic war techniques. Sixthly, Assess Operational Integration: To determine the feasibility and effectiveness of integrating the evolved technologies into current army protection structures, evaluating each technical and operational element to ensure seamless deployment and functionality. The research aims to significantly boost the technological competencies in military surveillance by attaining those goals, contributing to superior country-wide Security and strategic protection effectiveness.

Contribution of the Article

This article makes numerous contributions to army surveillance generation, particularly distinguishing between Unmanned Aerial Vehicles (UAVs) and birds. The detection and category capabilities upgrades mentioned herein are poised to redefine the strategic parameters within contemporary military operations. This text's critical contributions: firstly, advancement in Detection Algorithms: this research introduces new machine-gaining knowledge of artificial intelligence algorithms specifically designed to differentiate UAVs from birds. By focusing on advanced pattern popularity and evaluation of flight dynamics, the look affords a sturdy framework for reducing misidentifications, thereby enhancing the accuracy of threat tests in navy operations. Secondly, real-time Data Processing: The development of real-time processing talents represents a sizeable jump forward in surveillance generation. The potential to research and reply to aerial information properly away is critical in immoderate-stakes environments in which timely preference-making can significantly affect the outcome of army engagements. Thirdly, discount of False Alarms: by notably reducing the occurrence of faux positives and negatives, this study mitigates the useless deployment of shielding belongings and the capacity for overlooking real threats. This development is essential for preserving operational integrity and optimizing the allocation of navy assets. Fourthly, operational Integration and Testing: the article records complete testing protocols in simulated environments, ensuring that the superior systems are theoretically sound and almost viable. It also addresses the combination of these technologies into present army frameworks, highlighting traumatic situations and answers, thereby paving the way for smoother transitions and implementations. Fifthly, Strategic Implications and Policy Recommendations: beyond technological advancements, this study offers strategic insights and coverage hints for military and protection entities. These consist of recommendations for deploying new technology, techniques for updating present-day practices, and concerns for destiny studies and improvement in UAV detection. Sixthly, enhanced Airspace Security: by improving the ability to pick out UAVs as it should, this research contributes to more robust airspace protection, specifically in touchy or excessive-protection regions. This capability is vital for shielding in opposition to espionage, unauthorized surveillance, and capability assaults. Overall, the contributions of this newsletter are intended to aid and inform ongoing efforts to decorate security features against the increasingly state-of-the-art use of UAVs in army contexts, ensuring that protection strategies evolve in tandem with rising technological threats.

Article Organization

The article is meticulously prepared to facilitate deep know-how of system studying programs in UAV detection. The article starts with an advent that units the context by highlighting the significance of UAV recognition in navy surveillance. This is followed by a literature evaluation summarising existing studies and identifying improvements and gaps in contemporary methodologies. The technique segments information from the experimental setup, which includes information collection and version improvement, and describes the assessment metrics used to assess the models' overall performance. The effects and analysis section then assesses diverse fashions, including neural networks and gradient-boosting machines, and analyzes their effectiveness in UAV recognition. The discussion translates these consequences, thinking about their implications for real-international packages and discussing the strengths and weaknesses of every version. The article concludes with a phase on future paintings, presenting guidelines for in addition research to enhance UAV detection systems' accuracy and performance, thereby ensuring a smooth progression from theoretical frameworks to realistic applications.

Related Work

This study examines real observation scenarios and suggests an effective method to precisely differentiate drones from birds by analyzing features extracted from their micro-Doppler (MD) signatures. The classification accuracy decreased in simulations using rotating-blade and flapping-wing models due to the variety of drones and birds. However, combining features collected over extended observation periods notably enhanced accuracy. The study discovered that MD bandwidth was the most compelling feature, but it required a significant observation period to fully utilize the time-varying MD as a valuable feature.(8)

Conventional object detectors are trained on a diverse range of familiar objects and are ready to use for various everyday tasks. The training data for these detectors typically contains objects prominently displayed in the scene, making them easily recognizable. Objects captured by camera sensors in real-world situations may not always be significant, in focus, or positioned at the center of an image. Many detectors are not meeting the required performance levels for successful use in uncontrolled environments. Specialized applications require extra training data to ensure accuracy, especially when small objects are on the scene. This paper introduces an object detection dataset containing videos showing helicopter exercises in a maritime environment with no restrictions. Special attention was given to highlighting small helicopters in the field-of-view to create a balanced mix of small, medium, and large objects for training detectors in this particular domain. The authors utilize the COCO evaluation metric to assess various detectors on their data, including the WOSDETC (Drone vs. bird) dataset. The authors also analyze different augmentation techniques to enhance detection accuracy and precision in this context. These comparisons provide valuable insights as the authors modify standard object detectors to analyze data with non-traditional perspectives from field-specific applications.(9)

Wild gliding birds evade obstacles or predators by folding and twisting their wings to perform a rapid roll. The authors seek to explore the potential for improving the roll rate of drones by using a morphing technique inspired by birds. This entails imitating the folding of birds' wings by asymmetrically sweeping back the wings and simulating wing twisting by deflecting the aileron. This paper explores the effects of wing morphing on the centroid, inertia matrix, and aerodynamic traits of the drone and develops a nonlinear dynamic model. A new cooperative approach combining wing morphing with aileron deflection for roll manipulation is added to the side of introducing a flight controller that utilizes this approach. The morphing wing drone's collaborative strategy and dynamic modeling were demonstrated for their effectiveness during outdoor flights.(10)

Wildlife population tracking poses a sizable venture in the face of global biodiversity loss. Innovative strategies like drones and unmanned aerial automobiles/systems (UAV/UAS), offer promising opportunities. The effectiveness of using high-resolution imagery from Unmanned Aerial Systems (UAS) for wildlife census of terrestrial mammals or birds visible in pictures is now broadly recognized. The capability of Unmanned Aerial Systems (UASs) to pick out inconspicuous species, like small birds hidden under the wooded area canopy, remains unsure. Bioacoustics can be used to deal with this hassle in acoustically lively species like bats and birds. UASs provide a promising solution that may be applied on a bigger scale with reduced danger for operators, especially in challenging terrains like woodland canopies or complex topographies, in evaluating standard strategies. This look suggests a methodological framework for comparing Unmanned Aerial Systems (UASs) competencies in bioacoustic surveys for birds and bats. It entails using lower-priced audible and ultrasound recorders connected to a low-cost quadcopter UAS (DJI Phantom three Pro). The suggested methodological process can be easily duplicated in different situations to evaluate the influence of alternative UAS bioacoustic recording systems on the specified species and the particular UAS configuration. This protocol enables the assessment of UAS approaches' sensitivity by estimating the effective detection radius for various species at different flight heights. The study indicates a high potential for using bioacoustic monitoring to track birds. However, the effectiveness for recording bats is less clear due to quadcopter noise, specifically from the electronic speed controller (ESC), and partly due to the experimental setup using a directional speaker with limited call intensity. Advancements like utilizing a winch to increase the distance between the UAS and the recorder during UAS sound recordings or creating an innovative platform like a plane-blimp hybrid UAS could help address these problems.(11)

Transfer learning is a modern deep learning technique that can significantly reduce the time required to train deep networks. It can be executed by utilizing pre-trained deep networks. This paper will introduce a novel model for classifying drones and birds using transfer learning. The authors adapt and assess three commonly used pre-trained deep models on a dataset containing images of drones and birds. Each pre-trained network's performance is evaluated and contrasted. Results indicate that pre-trained networks can effectively classify the dataset in question.

Moreover, ResNet18 demonstrates superior accuracy compared to the other networks assessed. ResNet18 consistently achieves an accuracy and F-Score of over 98 % in all scenarios. The different models also demonstrate outstanding performance. The problem addressed has significant applications, particularly in Security, defense, and surveillance. Precise categorization of drones and birds is crucial.(12)

This paper presents a method for efficiently classifying multiple drones and bids by considering the observation scenario. The simulation results show that a training database for the convolutional neural network classifier must include a combination of drones and birds.(13)

The study introduces a drone classification method based on convolutional neural networks. A substantial and varied real dataset for training is a crucial requirement for achieving high-accuracy neural network-based classification. The initial objective of the study was to establish an extensive database containing micro-Doppler spectrogram images of drones and birds in flight. Two distinct datasets contain identical photos, one consisting of RGB images and the other containing grayscale images. The GoogLeNet architecture was trained using the RGB dataset. The study utilized the greyscale dataset for training with an explicitly developed set of architectures. The dataset was split into two categories: one with four classes (drone, bird, clutter, and noise) and the other with two (drone and non-drone). 20 % of the dataset was allocated for validation during training. Following the training, the models were evaluated using new and unlabeled datasets. The validation and testing accuracy of the developed series network is 99,6 % and 94,4 %, respectively, for the four classes and 99,3 % and 98,3 %, respectively, for the two classes. The GoogLeNet model demonstrated validation and testing accuracies of approximately 99 % across all scenarios.(14)

Deep learning is a powerful, cutting-edge method for target recognition. Deep getting-to-know networks face demanding situations in radar target popularity regarding their trustworthiness and reliability. This letter proposes an inductive conformal prediction (ICP) better lengthy brief-time period memory spiking neural community (LSTM-SNN). It is connected to conformal prediction in statistical gaining knowledge of the concept and deep mastering, and it is used to figure out birds and drones and the usage of radar generation. The proposed technique gives correct reputation effects for drones and birds by presenting confidence and credibility for each identity. It also generates a self belief c language that includes the authentic fee of the predicted parameter at a specified self belief stage, which contains 98 %. The benefits of the ICP's better LSTM-SNN technique were shown in the use of bird detection datasets accrued by Radar at the airport.(15)

Drones have become a controversial public interest technology that has gained significant media attention due to the emergence of the "humanitarian drone" in recent years. Drones can potentially revolutionize health supply logistics in the aid sector, but routine drone delivery is still a new and unproven concept. This paper discusses a field study in 2019 in Malawi, where drones were used to overcome the challenge of delivering medical supplies to remote areas. It also highlights the dual benefits observed when using drones in low-resource settings. The paper aims to analyze the effects of implementing a systemic change in health supply chain systems using a real-world case study and an ethical perspective. In conclusion, there is a need for more thoughtful approaches in critically examining and providing structured guidance for the responsible evaluation of medical cargo drones.(16)

This paper discusses the classification outcomes of different features extracted from the micro-Doppler (MD) signature of drones and birds in actual flight situations. The classification results show that the MD bandwidth is the most efficient feature when using the rotating blade and the flapping wing made of the plate and the ellipsoid.(17)

Consumer drones have recently intruded upon airports and present a potential hazard to aviation safety. Radar is a practical remote sensing instrument for detecting and monitoring airborne drones. Radar echoes from flying birds are considered clutter when a radar detects drones. However, limited research has addressed the impact of radar reflections from birds on drone detection, the similarities in radar cross section (RCS) and flight characteristics between birds and drones, and why birds in flight can complicate radar signal identification. 3900× 256 Ku-band radar echoes of flying birds and consumer drones were collected in this study. The targets include a pigeon, a crane, a waterfowl, and a DJI Phantom 3 Vision drone. The authors analyzed the maximum detectable range of birds and drones, as well as the time series and Doppler spectrum of Radar echoes from both, taking into account radar data from both oncoming and outgoing directions relative to the radar location. The statistical findings suggest flying birds exhibit comparable Radar Cross Section (RCS), velocity range, signal fluctuation, and signal amplitude. His radar automatic target recognition (ATR) results indicate that the identification probability of airborne drones will decrease because the radar signal is interfered with by flying birds. These facts confirm that flying birds are the primary source of interference for radars detecting and identifying airborne drones.(18)

Efficient technology is required to detect drones in airspace due to the significant rise in the availability of affordable drones in the consumer market and their unfortunate misuse. This paper discusses the distinct radar micro-Doppler features of drones and birds. Drones and birds create micro-Doppler signatures by rotating propeller blades and wingbeats. These unique characteristics can be utilized to distinguish between a drone and a bird and analyze them individually. Experimental measurements of micro-Doppler signatures of various drones and birds are presented and analyzed here. The data was gathered using two radars operating at distinct frequencies: K-band (24GHz) and W-band (94GHz). Three distinct drone models and four different bird species of various sizes were utilized for data gathering. The results show that a phase-coherent radar system can accurately capture unique micro-Doppler signatures of flying targets at K-band and W-band frequencies. The comparison of the signatures at the two frequencies shows that the micro-Doppler return from the W-band Radar has a higher Signal-to-Noise Ratio (SNR). Micro-Doppler features in K-band radar returns effectively reveal the micro-motion characteristics of drones and birds.(19)

Drones, called crewless aerial vehicles, are more frequently utilized in ecological research, especially to reach sensitive wildlife in remote locations. Impact studies are urgently required and will result in recommendations for optimal practices. The authors examined how the color, speed, and flight angle of drones affected the behavior of mallards (Anas platyrhynchos) in a semi-captive setting, as well as wild flamingos (Phoenicopterus roseus) and common greenshanks (Tringa nebularia) in a wetland environment. The authors conducted 204 approach flights using a quadrocopter drone, and 80 % of them could approach unaffected birds within 4 meters. The speed of approach, the color of the drone, and the number of flights did not affect bird behavior significantly, but birds were more responsive to drones approaching from above. Launching drones at a distance greater than 100 metres from birds and adjusting the approach distance based on the species is advised. His study represents an initial effort to utilize drones effectively in wildlife research. Subsequent research should evaluate how various drones affect different groups of organisms and track physiological stress indicators in animals exposed to drones based on group sizes and reproductive status.(20)

Many birds in the wild can glide effortlessly in thermal updrafts for extended periods without the need to flap their wings. Autonomous soaring can significantly increase the range and length of small drones. This paper provides a way for forecasting thermal updraft centers utilizing the extended Kalman filter (EKF) with regular least squares (OLS) to create a self-sustaining soaring gadget for small drones. An adaptive step size update strategy is integrated into the Extended Kalman Filter (EKF). The proposed technique is compared to EKF thermal updraft prediction methods in simulated experiments. The consequences suggest that the proposed prediction method is well-known for low computational complexity, speedy convergence pace, and more desirable balance in susceptible thermal updrafts. The benefits arise from the Ordinary Least Squares (OLS) method, which presents an estimated distribution of the thermal updraft across the drone for the Extended Kalman Filter (EKF). This component is the Extended Kalman Filter (EKF) set of rules with enough information to adjust the thermal updraft center in real time constantly. The adaptive step size update method improves the convergence fee of this method. Flight assessments were conducted using the Talon constant-wing drone platform to assess the autonomous hovering gadget. The drone efficaciously utilized thermal updrafts to jump into the region at some point during the flight take a look at, effectively hovering and replenishing electricity. The drone used its propulsion machine for 8 mins out of the 40-minute flight. The effectiveness of the autonomous soaring gadget was tested using the EKF thermal updraft Centre prediction method using OLS. Suggestions for improving the current work are proposed after analyzing and comparing the simulation experiment results with those of the flight experiment.(21)

The rapid expansion of the worldwide drone industry has brought significant focus to the security risks associated with drones. Given the coexistence of various types of drones in the airspace, it is crucial to be able to differentiate between them for security purposes. This paper suggests a resilient and accurate identification method based on the ID for various non-cooperative drones. A novel identification feature and extraction method are proposed for obtaining features from waveforms without prior knowledge. The authors utilize deep learning and a data augmentation technique to develop a robust identification model for distinguishing between individual drones of the same type. The authors have developed a multi-head deep learning model based on the concept of multi-task learning to achieve distinct identification of various drone types without enlarging the model size. The experiment includes 18 drones of three different types and their respective controllers. The results validate that his method can achieve outstanding performance in identifying various types of individual drones and detecting intrusive drones while also being resilient to noise and channel variations. The author's approach has been validated to have strong performance in identifying drone controllers emitting frequency hopping signals.(22)

Crewless aerial vehicles are used more frequently than crewed aircraft for aerial wildlife surveys. However, the most effective and least disruptive survey protocols for wildlife behaviors remain uncertain. The authors evaluated the effect of drone flights on non-target species to assist in developing a survey for Florida mottled geese. The look aimed to assess the impact of flight altitude on the behaviour of marsh birds, its impact on a surveyor's chicken species identification skills, and to check protocols for destiny MODU surveys. The authors executed 120 consecutive transects at elevations starting from 12 to ninety-one meters, reading the variables influencing non-target species' detection, popularity, and moves. Drone flights seldom disrupted marsh birds. The authors encountered problems in precisely detecting birds at the 2 highest altitudes and had a hassle figuring out the species of birds seen in videos captured at 30 meters. The authors' studies indicate that Mobile Offshore Drilling Units (MODUs) can be visible from heights between 12 and 30 meters without causing a high-quality effect on nearby marshland birds. Moreover, large non-goal marsh species may be prominent from films captured during MODU drone surveys.(23)

Radar drone surveillance structures should be able to differentiate between birds and drones to function efficaciously. Convolutional neural networks (CNNs) distinguish between fowl and drone spectrograms. The classifier is evaluated on actual records with a low sign-to-background ratio (SBR) amassed using an L-band staring Radar. This complements comprehension of the classifier's capacity to generalize to novel drone fashions and exceptional litter environments. This looks at and emphasizes the importance of Synthetic Aperture Radar (SBR) for drone surveillance by determining the size of drones that may be appropriately classified and the space in which they may be detected.(24)

The precise identity of far-off drones in a crowded setting with numerous airborne entities like birds is critical in designing anti-drone structures. The study delivered a new item detection model intended to as it should be perceived and distinguish drones from other airborne gadgets in various weather conditions. The custom dataset comprises drone images and bird samples created manually under sunny, cloudy, and evening conditions. KITYOLO demonstrated superior performance compared to YOLOv5 in terms of precision and recall across different situations, achieving an overall F1-score of 98 % versus 91,9 % while also maintaining efficiency regarding timeliness and memory usage.(25)

28S rRNA gene sequences from 16 isolates of digenean parasites belonging to the family Dicrocoeliidae, obtained from 16 bird species in the Czech Republic, were utilized for phylogenetic analysis. The genus Brachylecithum appears to be paraphyletic based on comparisons with sequences in GenBank, indicating the need for additional validation and potential systematic revision. Partial 28S rDNA is relatively conserved, but analyses indicate that the following taxa are synonymous: Lutztrema attenuatum is equivalent to L. monenteron and L. microstomum, while Brachylecithum lobatum is the same as B. glareoli. Zonorchis petiolatus has been reclassified to the genus Lyperosomum, where it is considered a junior synonym of L. collurionis. The study unveiled the current complexity of the systematics of the family Dicrocoeliidae. The group's morphology varies, making it challenging to accurately identify species and even generic specimens due to the lack of distinct distinguishing characteristics. Identifying GenBank isolates is also unreliable. Comprehensive isolate sampling is required for molecular and morphological analyses to clarify the relationships within the family.(26)

RANGO is a drone detection algorithm created to identify drones in intricate images where the target is challenging to distinguish from the background. RANGO uses a deep learning framework that employs a Preconditioning Operation (PREP) to highlight the target by focusing on the difference between the target gradient and the background gradient. The objective is to highlight traits that will be advantageous for classification. RANGO uses different convolution kernels after preprocessing to detect the drone's existence. The authors assess RANGO using a drone image dataset incorporating multiple existing datasets and extra samples of birds and planes. The authors will compare RANGO with different existing methods to show its superiority. RANGO outperforms the YOLOv5 solution by achieving a 6,6 % higher mean Average Precision (mAP) in detecting disguised drones in images. RANGO improves the mean Average Precision (mAP) by approximately 2,2 % when tested on the standard dataset, showing its effectiveness in various applications.(27)

METHOD

Dataset Description

The look applied the "Birds vs. Drone Dataset" to be had on Kaggle, which Harsh Walia contributed. This dataset includes two fantastic folders categorizing photographs of birds and drones, respectively, which might be essential for the education of the authors's system studying version to differentiate among these lessons. The chook photos had been sourced thru net scraping, while the drone snap shots had been received from any other dataset. Each folder contains a tremendous range of pictures of the topics in herbal sky backgrounds, which provide the visible records necessary for schooling the authors' version.(28)

Data Preprocessing

Given the diverse nature of the images in terms of background, orientation, and scale, the following preprocessing steps were applied to standardize the dataset for practical training.(5)

· Image Resizing: all images were resized to a uniform dimension of 224x224 pixels to ensure consistency in input size for the neural network.

· Normalization: pixel values of each image were normalized to have values between 0 and 1, improving the convergence speed during training.

· Augmentation: to increase the robustness of our model and to prevent overfitting, image augmentation techniques such as rotation, zoom, and horizontal flipping were applied.

Machine Learning Models

In the evaluation process, several machine learning models were employed to assess their performance in predicting the Bords and Drones Dataset. The following models were utilized.(3,29,30,31)

Logistic Regression

The Logistic Regression is a statistical model commonly used for binary classification tasks. It estimates probabilities using a logistic function, an S-shaped curve that can take any real-valued number and map it into a value between 0 and 1. Logistic regression predicts the probability that a given input belongs to a particular category. It is parametric, involving a set of weights learned from the training data. These weights are used to make predictions about the data. The method applies a logistic transformation to the features' linear output, ensuring the production remains within the interval [0, 1]. This model is highly interpretable but may perform poorly with nonlinear decision boundaries unless feature engineering is applied.(1)

Decision Tree

The Decision Tree is a non-parametric supervised learning method for classification and regression tasks. The version predicts the fee of a target variable by employing easy selection policies inferred from the facts' capabilities. A tree is constructed by recursively partitioning the facts into subsets, corresponding to a branching of selections. Each node in the tree represents a function inside the dataset, every department represents a choice rule, and each leaf node represents an expected outcome. This makes decision bushes easy to understand and interpret; however, they are at risk of overfitting, specifically with complex datasets. Pruning, placing the minimal wide variety of samples required at a leaf node, or putting the maximum depth of the tree are not unusual approaches to prevent overfitting.(30)

Random Forest

The Random Forest is an ensemble studying method for classification and regression that operates through building many selection trees at school. For type tasks, the output of the Random Forest is the elegance decided on via most bushes. It provides a higher predictive accuracy than a single decision tree. It achieves this by combining the consequences of more than one choice tree constructed throughout the training process. Each tree inside the forest is built from a pattern drawn with a substitute (i.e., a bootstrap pattern) from the education set. Moreover, when splitting each node during a tree's construction, the best split is found either from all input features or a random subset of features, leading to diversity among the trees, which powers the model's high accuracy.(4)

Gradient Boosting

The Gradient Boosting is a machine learning technique used for regression and classification problems, which produces a prediction model in the form of an ensemble of weak prediction models, typically decision trees. It builds the model in a stage-wise fashion as other boosting methods do, but it generalizes them by allowing optimization of an arbitrary differentiable loss function. In each stage, a tree is fitted on the negative gradient of the given loss function, which is akin to performing gradient descent in a high-dimensional space. This technique is highly effective for complex datasets with nonlinear patterns but can be sensitive to noisy data and overfitting if not appropriately tuned.(4,30)

Neural Networks

The Neural Networks are a subset of machine learning and constitute the backbone of deep learning algorithms. Their structure is inspired by the human brain, consisting of layers of interconnected nodes or neurons. Each neuron in one layer is connected to neurons in the next layer, and these connections, or weights, are adjusted during training. Neural networks are particularly good at capturing nonlinear relationships in data through multiple layers and many neurons. They are used widely in various tasks, such as image recognition, speech recognition, and natural language processing. However, they require large amounts of data, significant computational power, and expertise to tune various parameters such as network architecture and learning rate.(28)

Stochastic Gradient Descent (SGD)

The Stochastic Gradient Descent (SGD)is a simple yet efficient approach to fitting linear classifiers and regressors under convex loss functions such as (linear) Support Vector Machines and Logistic Regression. Unlike conventional gradient descent, which computes the gradient using the entire dataset (computationally expensive), SGD computes the gradient using only a single sample or a small batch of samples, making it much faster for large datasets. It is called "stochastic" because samples are selected randomly. One downside of SGD is that the method can be less stable than other methods. However, with proper tuning of the learning rate and using techniques like learning rate schedules, momentum, and others, SGD can converge fast and effectively.(3)

Research Design

The examine employs the "Birds vs. Drone Dataset," which is publicly available on Kaggle and contributed via Harsh Walia. This dataset is instrumental in the author's exploration of the system, getting to know primarily based on aerial discrimination between birds and drones. It is meticulously prepared into fantastic folders, including pictures mainly categorized as birds and drones.

The pics of birds were obtained via a comprehensive internet scraping manner designed to capture a broad spectrum of hen species in diverse flight poses and environments. This series aims to mirror the natural variability encountered in real-world settings. On the opposite hand, the drone photographs have been sourced from an existing dataset and encompass a variety of drone fashions, each pictured against a backdrop of natural sky. This guarantees that the dataset displays realistic scenarios wherein drones are probably found during flight.(32)

Each folder houses a whole quantity of pics, providing a widespread extent of visual records crucial for schooling sturdy machine learning models. The variety and excellentness of the pictures are paramount to developing a robust classifier that could reliably differentiate among these training under usual operational situations.(28,29,31)

Training

Configuration: the version becomes trained using a batch size of 32 and a mastering charge of 0,001, with changes made thru a studying fee scheduler primarily based on plateauing of the validation loss.

Environment: training was changed to be conducted on a GPU-enabled system to expedite the computation.

Validation Split: the dataset was divided into schooling (eighty%) and validation (20 %) units to reveal and save you from overfitting.

Evaluation Metrics

To verify the overall performance of the author's version, accuracy, precision, and bear-in-mind metrics were computed. These metrics assist in understanding the effectiveness of the version in efficaciously classifying images as birds or drones.

AUC (Area Under the Curve)

AUC The period refers to a version's potential to distinguish between fantastic and poor classes at various type thresholds. The importance of the metric lies in its potential to provide a comprehensive assessment of a version's performance throughout unusual and uncommon occurrences. This is particularly essential in scientific diagnostics and other tasks concerning binary class, in which the selection of the decision threshold can significantly affect results.

CA (Classification Accuracy)

Classification Accuracy assesses a version's potential to effectively perceive exemplary and adverse outcomes. This metric is valuable for determining models with balanced magnificence distributions, supplying a rapid evaluation of model effectiveness in regions of educational trying out and patron delight analysis.

F1 Score

The F1 Score is critical in conditions where each false positive and negative can have vital outcomes, along with legal and financial fields, because it combines precision and keeping in mind. The importance lies in providing an extra accurate assessment of a model's effectiveness when working with imbalanced datasets, wherein mistakes will have tremendous consequences.

Recall

Recall is critical in situations wherein failing to stumble on a favorable occasion (fake poor) isn't tolerable, like in fraud detection or ailment screening. It prioritizes figuring out the most critical instances, even if it makes more fantastic mistakes in identifying instances as unfavorable (false positives).

The metrics collectively create a radical evaluation framework for the system, gaining knowledge of fashions and assisting in making well-informed decisions in specific programs by emphasizing diverse aspects of version overall performance on the subject of the unique costs of prediction errors.

Implementation

Software and Libraries: the version was achieved using Python, with TensorFlow and Keras, for constructing and schooling the neural network. Additional libraries, NumPy and Matplotlib, were used for information manipulation and visualization.

Execution: the script was finished iteratively, refining parameters and configurations primarily based on observed overall performance at the validation set.

Figure 1. The Processes Flow Diagram

RESULTS

Test and Score Analyses

Test and Score analysis is an assessment framework in gadget mastering that gauges models' efficacy by splitting facts into education and checking out units, wherein fashions are educated on the previous and predictions are assessed at the latter. It employs metrics like accuracy (the fraction of predictions a model gets proper), precision (the correctness of superb predictions), don't forget (the model's capacity to stumble on actual positives), and the F1 rating (a harmonic mean of precision and don't forget), complemented by the AUC (the version's performance throughout numerous thresholds). This method is crucial for verifying the model's predictive energy on new statistics, enabling the selection of the most suitable version based on its anticipated real-global performance.

Test and Score Analyses for target class Birds

Table 1 outlines the performance of six machines in getting to know fashions, which were evaluated using stratified 10-fold pass-validation focused on the classification of "Birds." The Neural Network version outperforms others with the best AUC (0,988), indicating splendid average predictive potential, complemented by advanced accuracy (0,955) and F1 score (0,954), at the side of vital consider (0,944). Gradient Boosting and Logistic Regression are also high achievers, with respective AUCs of 0,984 and 0,982, showcasing substantial accuracy and recall, particularly Gradient Boosting, which has the highest recall at 0,937. The Random Forest model shows solid performance with notable recall (0,927). The Stochastic Gradient Descent model presents promising results, although slightly lower than the models above. In contrast, the Tree model trails with the weakest metrics across the board, indicating it may be less suited for the given classification task than its counterparts. Overall, the Neural Network is the leading model, with Gradient Boosting and Logistic Regression being strong contenders for detecting the class "Birds."

|

Table 1. The test and score analyses for the models Random Forest, Tree, Gradient Boosting, Logistic Regression, Neural Network, and Stochastic Gradient Descent |

||||

|

Model |

AUC |

CA |

F1 |

Recall |

|

Random Forest |

0,968 |

0,909 |

0,909 |

0,927 |

|

Tree |

0,853 |

0,883 |

0,881 |

0,878 |

|

Gradient Boosting |

0,984 |

0,934 |

0,934 |

0,937 |

|

Logistic Regression |

0,982 |

0,938 |

0,936 |

0,927 |

|

Neural Network |

0,988 |

0,955 |

0,954 |

0,944 |

|

Stochastic Gradient Descent |

0,933 |

0,933 |

0,931 |

0,923 |

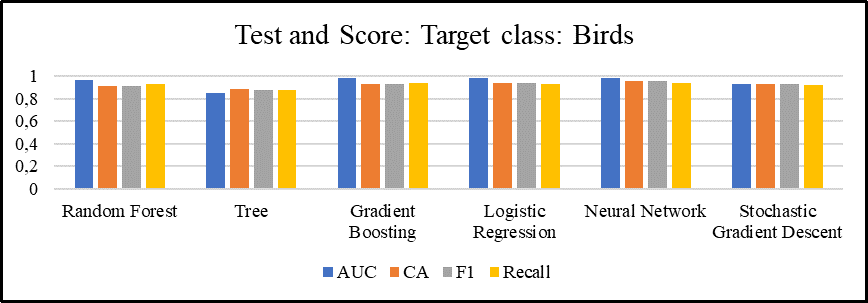

Figure 2 is a bar chart visualizing the performance metrics—AUC, CA (Classification Accuracy), F1 Score, and Recall—for six different machine learning models on classifying the target class "Birds." The Neural Network model exhibits the highest bars across all metrics, indicating it has the best overall performance among the models evaluated. Gradient Boosting and Logistic Regression also demonstrate high metrics, slightly outperformed by the Neural Network, particularly in AUC and F1 scores. Random Forest and Stochastic Gradient Descent display a strong but not top-tier performance, with their metrics being generally comparable and above the baseline. The Tree model has the lowest bars for each metric, signifying it is the least effective model for this task. The chart illustrates that while most models perform well, the Neural Network model is the most reliable for accurately classifying the target class.

Figure 2. Test and Score for Target Class Birds

Test and Score Analyses for target class Drones

Table 2 shows performance metrics for various machine learning models using stratified 10-fold cross-validation with "Drones" as the target class. The Neural Network model leads with the highest AUC of 0,988, indicating excellent overall predictive ability, the best classification accuracy (CA) at 0,955, and the highest F1 score and recall, both over 0,95, suggesting its superior precision and ability to identify relevant instances. Logistic regression follows closely with an AUC of 0,982 and the second-highest recall at 0,949, indicating strong performance, especially in identifying actual positive cases. Gradient Boosting also shows strong performance across all metrics, particularly with an AUC of 0,984. The Stochastic Gradient Descent model demonstrates robustness with consistent scores across all metrics, particularly a high recall of 0,942. While slightly less effective than the top performers, Random Forest still shows high reliability with an AUC of 0,968. The Tree model is the least effective, with the lowest AUC of 0,853, yet its recall is competitive at 0,888. The table shows that while all models carry out nicely, the Neural Network is the handiest for classifying the goal elegance "Drones."

|

Table 2. Test and score analyses for the models Random Forest, Tree, Gradient Boosting, Logistic Regression, Neural Network, and Stochastic Gradient Descent |

||||

|

Model |

AUC |

CA |

F1 |

Recall |

|

Random Forest |

0,968 |

0,909 |

0,908 |

0,891 |

|

Tree |

0,853 |

0,883 |

0,885 |

0,888 |

|

Gradient Boosting |

0,984 |

0,934 |

0,935 |

0,932 |

|

Logistic Regression |

0,982 |

0,938 |

0,939 |

0,949 |

|

Neural Network |

0,988 |

0,955 |

0,956 |

0,966 |

|

Stochastic Gradient Descent |

0,933 |

0,933 |

0,934 |

0,942 |

Figure 3 is a bar chart depicting the performance metrics of six machine-studying models in classifying the goal elegance "Drones." The metrics displayed are AUC (Area Under the Curve), CA (Classification Accuracy), F1 Score, and Recall. The Neural Network model shows the highest values across all metrics, indicating it performs well for this category venture. Gradient Boosting and Logistic Regression also perform well, particularly in the recall, which measures the model's ability to identify actual positives correctly. The Stochastic Gradient Descent model shows slightly lower yet consistent performance across all metrics.

In contrast, the Tree model has the lowest scores, suggesting it is the least effective at classifying drones. Random Forest has respectable scores but does not match the top-performing models. The consistency across the different measures suggests that the models are reliable, with the Neural Network being the most proficient for the task at hand.

Figure 3. Test and Score Analyses for Target Class Drone

Test and Score Analyses for target class average over classes

Table 3 presents performance metrics for six machine learning models, with no specific target class indicated, suggesting that the shown values are averaged over multiple classes using a stratified 10-fold cross-validation method. The Neural Network model outshines the others with the highest AUC (0,988), indicating a superior prediction capability across all thresholds, and matches this with the highest scores in classification accuracy (CA), F1, and recall (all at 0,955), illustrating its excellent balance between precision and the ability to identify true positives correctly. Logistic Regression and Gradient Boosting also score high across the board, particularly in AUC, suggesting these models are also very effective classifiers. While not as high-scoring as the models above, Random Forest still shows robust performance with an AUC of 0,966 and equal CA, F1, and recall scores of 0,909. Stochastic Gradient Descent displays consistent and competent performance, with all metrics at 0,933. The Tree model trails behind, with the lowest AUC of 0,858 and matching CA, F1, and recall scores of 0,883, indicating it may be less adept at classification across multiple classes than the other models. The table highlights the Neural Network as the leading model for generalized classification tasks, with Logistic Regression and Gradient Boosting being solid alternatives.

|

Table 3. Test and score analyses for the models Random Forest, Tree, Gradient Boosting, Logistic Regression, Neural Network, and Stochastic Gradient Descent |

||||

|

Model |

AUC |

CA |

F1 |

Recall |

|

Random Forest |

0,966 |

0,909 |

0,909 |

0,909 |

|

Tree |

0,858 |

0,883 |

0,883 |

0,883 |

|

Gradient Boosting |

0,984 |

0,934 |

0,934 |

0,934 |

|

Logistic Regression |

0,981 |

0,938 |

0,938 |

0,938 |

|

Neural Network |

0,988 |

0,955 |

0,955 |

0,955 |

|

Stochastic Gradient Descent |

0,933 |

0,933 |

0,933 |

0,933 |

Figure 4 is a bar chart that compares the expected performance metrics—AUC, Classification Accuracy (CA), F1 Score, and Recall—of six systems getting to know fashions, with the overall performance averaged over a couple of classes. The Neural Network model stands proud with the best ratings throughout all metrics, indicating excellent predictive accuracy and consistency in class performance. Gradient Boosting and Logistic Regression show robust overall performance correctly, specifically in terms of, do not forget, suggesting their effectiveness in effectively figuring out high-quality instances across specific training. Random Forest indicates a strong yet barely lower standard overall performance, while the Stochastic Gradient Descent model achieves excellent, uniform rankings across the board. The Tree version lags in all metrics, indicating a lower average performance in classification tasks compared to the alternative fashions. The chart succinctly illustrates that even as each model demonstrates competency, the Neural Network version constantly outperforms the others in typical magnificence prediction accuracy.

Figure 4. Test and Score Analyses for Target Average Over Classes

Confusion Matrix Analyses

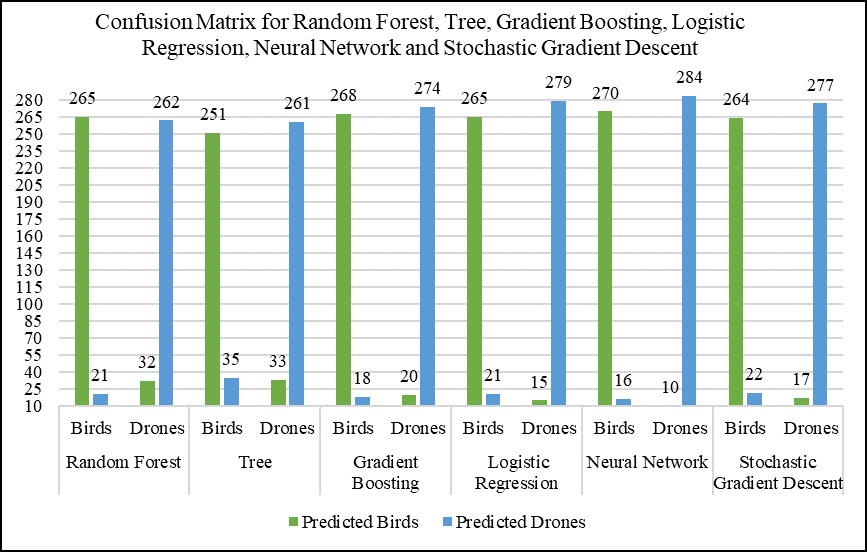

Confusion matrix analysis is a tool used in system mastering to evaluate the overall performance of class fashions. It is a table with dimensions "Actual" and "Predicted" and allows for the visualization of the performance of a set of rules. The matrix compares the actual target values with those anticipated via the model, resulting in four effects: genuine positives (TP), proper negatives (TN), fake positives (FP), and false negatives (FN). True positives and actual negatives represent the observations correctly predicted by way of the version, while false positives and false negatives constitute the mistakes. The confusion matrix is the inspiration for deriving extra precise metrics, accuracy, precision, remember, and the F1 score. Accuracy measures the proportion of overall correct predictions, precision measures the accuracy of superb predictions, take into account measures the capability of the version to locate positive times, and the F1 rating offers a balance among precision and recall. These metrics supply a more excellent nuanced know-how of model overall performance beyond easy accuracy, especially in datasets with imbalanced class distributions. as shown in table 4 and figure 5.

Table 4 presents confusion matrices for six machine-learning models. The matrices show the number of true positive (TP) and true negative (TN) predictions along with the false positive (FP) and false negative (FN) predictions for two classes: 'Birds' and 'Drones.' The Random Forest model has 265 TPs and 262 TNs for 'Birds' and 'Drones,' respectively, with relatively low FPs and FNs, suggesting a balanced classification capability. The Tree model shows higher FPs and FNs, indicating a lower performance. Gradient Boosting achieves impressive results, with high TPs and TNs and the lowest FPs for 'Birds,' signifying a high predictive accuracy. Logistic regression exhibits a high TN rate for 'Drones,' though with slightly higher FPs for 'Birds.' The Neural Network has the highest TPs and TNs and lowest FNs across both classes, indicating superior classification performance. Stochastic Gradient Descent also shows good performance but not as strong as the Neural Network. The Neural Network model is the most accurate classifier for both 'Birds' and 'Drones.'

|

Table 4. Confusion matrix analyses for the models, such as random forest, tree, gradient boosting, logistic regression, neural network, and stochastic gradient descent |

|||||

|

Actual |

Models |

Predicted |

|||

|

Classes |

Birds |

Drones |

∑ |

||

|

Random Forest |

Birds |

265 |

21 |

286 |

|

|

Drones |

32 |

262 |

294 |

||

|

∑ |

297 |

283 |

580 |

||

|

Birds |

251 |

35 |

286 |

||

|

Drones |

33 |

261 |

294 |

||

|

∑ |

284 |

296 |

580 |

||

|

Gradient Boosting |

Birds |

268 |

18 |

286 |

|

|

Drones |

20 |

274 |

294 |

||

|

∑ |

288 |

292 |

580 |

||

|

Logistic Regression |

Birds |

265 |

21 |

286 |

|

|

Drones |

15 |

279 |

294 |

||

|

∑ |

280 |

300 |

580 |

||

|

Neural Network |

Birds |

270 |

16 |

286 |

|

|

Drones |

10 |

284 |

294 |

||

|

∑ |

280 |

300 |

580 |

||

|

Stochastic Gradient Descent |

Birds |

264 |

22 |

286 |

|

|

Drones |

17 |

277 |

294 |

||

|

∑ |

281 |

299 |

580 |

||

Figure 5 is a bar chart illustrating the confusion matrices of six machine learning models, depicting true and false predictions for 'Birds' and 'Drones.' The height of the bars represents the number of predictions. For 'Birds,' the blue bars represent the true positives (correctly predicted birds), and the small orange segments at the base represent the false negatives (birds incorrectly predicted as drones). For 'Drones,' the blue segments represent the false positives (drones incorrectly predicted as birds), and the orange bars represent true negatives (correctly predicted drones). The Neural Network model shows an impressive performance with the highest true positives and true negatives and the lowest false negatives and false positives, indicating it has the best predictive accuracy. The Tree model has visibly more false predictions than the other models, suggesting it is less accurate. Random Forest, Gradient Boosting, Logistic Regression, and Stochastic Gradient Descent exhibit a balanced performance with relatively low false predictions. Overall, the Neural Network stands out for its precision in correctly classifying both 'Birds' and 'Drones.'

Figure 5. Illustrate The Confusion Matrix for Random Forest, Tree, Gradient Boosting, Logistic Regression, Neural Network, and Stochastic Gradient Descent for the Birds and Drones Classes

ROC Analyses

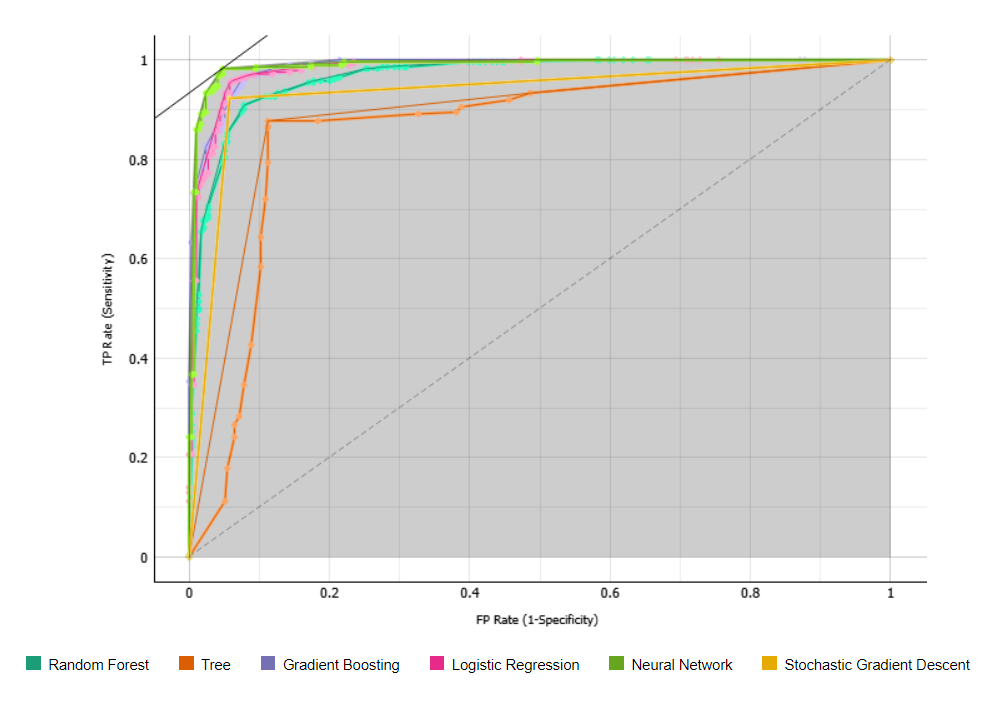

In academic discourse, receiver operating characteristic (ROC) analysis is a statistical technique with a considerable advantage employed to examine the diagnostic ability of binary classifiers. An ROC curve is a graphical representation that delineates the overall performance of a classifier without regard to class distribution or blunder fees via plotting the True Positive Rate (TPR) against the False Positive Rate (FPR) at various threshold settings. The vicinity below the ROC curve, referred to as the AUC, serves as a novel metric encapsulating the classifier's standard potential to discriminate between the two lessons, with a better AUC indicating advanced performance. ROC evaluation is incredibly tremendous in its capability to provide version performance throughout all class thresholds, therefore imparting a solid degree that is unbiased of any particular decision criterion. This analytic tool is imperative to the comparative assessment of various classifiers, clearly visualizing their strengths and weaknesses in diverse operational situations. It is a cornerstone of device mastering for growing models with optimized decision-making skills.

Figure 6 showcases a ROC (Receiver Operating Characteristic) curve evaluation, where the overall performance of six machine-getting-to-know models—Random Forest, Tree, Gradient Boosting, Logistic Regression, Neural Network, and Stochastic Gradient Descent—is evaluated for a classification venture. The ROC curves, which plot the True Positive Rate (TPR) in opposition to the False Positive Rate (FPR) for each model, monitor that the Neural Network version outshines the rest, closely followed with the aid of Gradient Boosting and Logistic Regression fashions, which additionally display commendable predictive capabilities. The Random Forest and Stochastic Gradient Descent models show off proper performance; however, they do now not fit the top-performing models. Conversely, the Tree model, whose curve is the farthest from the coveted pinnacle-left nook, ranks lowest in overall performance, suggesting less effectiveness in category accuracy. Overall, the curves advocate that the Neural Network version, with its superior curve function, possibly boasts the best place beneath the curve (AUC), indicative of its notable capability to parent between the target classes.

Figure 6. Illustrate The ROC Analyses for Random Forest, Tree, Gradient Boosting, Logistic Regression, Neural Network, and Stochastic Gradient Descent for the Birds Class

Figure 7 appears to feature an ROC (Receiver Operating Characteristic) curve, which plots the true positive rate (TPR) against the false positive rate (FPR) of various machine learning models. The ROC curves for six models—Random Forest, Tree, Gradient Boosting, Logistic Regression, Neural Network, and Stochastic Gradient Descent—are displayed in this plot. The Neural Network model's curve is closest to the top-left corner, indicating a higher TPR and lower FPR, which typically suggests superior performance. Gradient Boosting and Logistic Regression also exhibit favorable performances with curves near the top left, indicating high effectiveness in classifying the positive class. The Random Forest model demonstrates a solid performance, although slightly below the models above. The Stochastic Gradient Descent curve is further from the top-left corner. Still, it performs well above the tree model, which has the least favorable performance, with its curve closest to the diagonal line representing a random chance. This visualization allows us to conclude that the Neural Network model is likely the most effective classifier among those evaluated, particularly in its ability to distinguish the positive class with minimal false positives.

Figure 7. Illustrate The ROC Analyses for Random Forest, Tree, Gradient Boosting, Logistic Regression, Neural Network, and Stochastic Gradient Descent for the Drones Class

DISCUSSION

In the evolving landscape of military surveillance, the proliferation of Unmanned Aerial Vehicles (UAVs) presents both advanced operational opportunities and new security challenges. This study developed and compared machine learning models for real-time UAV recognition, particularly focusing on distinguishing UAVs from similarly sized non-threatening objects like birds. Among the models assessed, Neural Networks demonstrated superior performance in terms of precision, recall, and computational efficiency, making them well-suited for military applications where accuracy is crucial. Their high AUC values align with findings from other studies that highlight the efficacy of Neural Networks in scenarios requiring rapid, precise detection. For instance, prior research on UAV detection also supports the reliability of Neural Networks in handling complex classification tasks, suggesting they outperform traditional methods in distinguishing between UAVs and benign entities.

However, compared to similar investigations, Gradient Boosting and Logistic Regression models also showed strong predictive capabilities, especially in terms of recall. This finding mirrors previous studies that emphasize the robustness of these models in environments where balancing speed and precision is essential. These models, though not as accurate as Neural Networks, provide viable alternatives in resource-constrained scenarios, where computational efficiency is critical. The use of a multi-tiered detection system, combining Random Forest for initial filtering with Stochastic Gradient Descent for fine-tuned accuracy, aligns with other research advocating for ensemble approaches to increase detection reliability.

In contrast, the Tree model’s underperformance is consistent with earlier studies that indicate decision trees struggle with complex, dynamic environments. This highlights the need for more sophisticated algorithms to handle the intricacies of UAV detection in real-time. The limitations of existing models, particularly in challenging environmental conditions and against stealth UAV designs, indicate areas where future research can expand. Ongoing model evolution is necessary to address the rapidly advancing UAV technologies, ensuring defense mechanisms remain effective against emerging threats.

By comparing the performance of these models with similar research, it becomes evident that Neural Networks hold significant promise for military surveillance applications, while a multi-model approach could further enhance UAV detection systems. This study underscores the importance of continuous improvement and adaptation of machine learning models to meet the evolving demands of UAV recognition in modern defense strategies.

CONCLUSIONS

Concluding the explorative journey of this research Article, the research's cardinal findings are both profound and instrumental for enhancing contemporary military surveillance operations. The focal point of this investigation was to assess the efficacy of diverse machine learning models, especially under the operational requisites of real-time data processing and the dynamicity of environmental conditions. The Neural Network model, with its sophisticated computational architecture, emerged as a pinnacle, demonstrating paramount accuracy in the differentiation of UAVs from natural entities like birds, an imperative for bolstering airspace security.

The observe's comprehensive opinions unearthed that while Gradient Boosting and Logistic Regression fashions additionally exhibited great predictive prowess, it became the Neural Network that continuously showcased preeminence throughout essential performance metrics, particularly the Area Under Curve (AUC), Classification Accuracy (CA), F1 Score, and Recall. This superior overall performance predicates the advice for integrating Neural Network-primarily based systems into army defenses, an action predicted to increase the precision of chance evaluation and the adept allocation of defense assets, consequently augmenting operational efficacy.

However, the pathway to deploying these advanced models is not without obstacles. Recognizing the mercurial nature of UAV technology, with advancements in stealth capabilities and operational agility, the research underlines the imperative for continually refining these machine learning models. Ensuring that the models evolve in concert with the emerging designs of UAVs is critical for sustaining a robust defense posture.

The article's discourse concludes by advocating a steadfast commitment to advancing research in this domain, emphasizing the necessity for real-world operational testing and advocating for a perpetual state of model adaptation to confront new and unforeseen aerial threats. This research not only marks a significant milestone in military surveillance capabilities but also sets a precedent for future endeavors in securing global airspaces against the multifaceted threats posed by the unfettered proliferation of UAV technology.

BIBLIOGRAPHIC REFERENCES

1. Alqaraleh M, Alzboon MS, Al-Batah MS, Wahed MA, Abuashour A, Alsmadi FH. Harnessing Machine Learning for Quantifying Vesicoureteral Reflux: A Promising Approach for Objective Assessment. Int J Online \& Biomed Eng. 2024;20(11).

2. Ahmad Abuashour, Mowafaq Salem Alzboon MKA. Comparative Study of Classification Mechanisms of Machine Learning on Multiple Data Mining Tool Kits. Am J Biomed Sci Res. 2024;22(1):577–9.

3. Alzboon MS, Qawasmeh S, Alqaraleh M, Abuashour A, Bader AF, Al-Batah M. Machine Learning Classification Algorithms for Accurate Breast Cancer Diagnosis. In: 2023 3rd International Conference on Emerging Smart Technologies and Applications, eSmarTA 2023. 2023.

4. Alzboon MS, Al-Batah MS, Alqaraleh M, Abuashour A, Bader AFH. Early Diagnosis of Diabetes: A Comparison of Machine Learning Methods. Int J online Biomed Eng. 2023;19(15):144–65.

5. Al Tal S, Al Salaimeh S, Ali Alomari S, Alqaraleh M. The modern hosting computing systems for small and medium businesses. Acad Entrep J. 2019;25(4):1–7.

6. Alomari SA, Alqaraleh M, Aljarrah E, Alzboon MS. Toward achieving self-resource discovery in distributed systems based on distributed quadtree. J Theor Appl Inf Technol. 2020;98(20):3088–99.

7. Alzboon MS, Aljarrah E, Alqaraleh M, Alomari SA. Nodexl Tool for Social Network Analysis. Vol. 12, Turkish Journal of Computer and Mathematics Education. 2021.

8. Yoon SW, Kim SB, Jung JH, Cha S Bin, Baek YS, Koo BT, et al. Efficient Classification of Birds and Drones Considering Real Observation Scenarios Using FMCW Radar. J Electromagn Eng Sci. 2021;21(4):270–81.

9. Mediavilla C, Nans L, Marez D, Parameswaran S. Detecting aerial objects: drones, birds, and helicopters. In: Security + Defence. 2021. p. 18.

10. Liu Y, Zhang J, Gao L, Zhu Y, Liu B, Zang X, et al. Employing Wing Morphing to Cooperate Aileron Deflection Improves the Rolling Agility of Drones. Adv Intell Syst. 2023;5(11).

11. Michez A, Broset S, Lejeune P. Ears in the sky: Potential of drones for the bioacoustic monitoring of birds and bats. Drones. 2021;5(1):1–19.

12. Mohamed TM, Alharbi IM. Efficient Drones-Birds Classification Using Transfer Learning. In: 2023 4th International Conference on Artificial Intelligence, Robotics and Control, AIRC 2023. 2023. p. 15–9.

13. Yoon SW, Kim SB, Baek YS, Koo BT, Choi IO, Park SH. A Study on the Efficient Classification of Multiple Drones and Birds By Using Micro-Doppler Signature. In: 2022 International Conference on Electronics, Information, and Communication, ICEIC 2022. 2022.

14. Rahman S, Robertson DA. Classification of drones and birds using convolutional neural networks applied to radar micro-Doppler spectrogram images. IET Radar, Sonar Navig. 2020;14(5):653–61.

15. Zhu N, Xi Z, Wu C, Zhong F, Qi R, Chen H, et al. Inductive Conformal Prediction Enhanced LSTM-SNN Network: Applications to Birds and UAVs Recognition. IEEE Geosci Remote Sens Lett. 2024;21:1–5.

16. Wang N. “Killing Two Birds with One Stone”? A Case Study of Development Use of Drones. In: International Symposium on Technology and Society, Proceedings. 2020. p. 339–45.

17. Park S-H, Jung J-H, Cha S, Kim S-B, Youn S, Eo I-S, et al. In-depth analysis of the micro-doppler features to discriminate drones and birds. In: 2020 International Conference on Electronics, Information, and Communication, ICEIC 2020. 2020.

18. Gong J, Yan J, Li D, Kong D, Hu H. Interference of radar detection of drones by birds. Prog Electromagn Res M. 2019;81:1–11.

19. Rahman S, Robertson DA. Radar micro-Doppler signatures of drones and birds at K-band and W-band. Sci Rep. 2018;8(1).

20. Vas E, Lescroël A, Duriez O, Boguszewski G, Grémillet D. Approaching birds with drones: First experiments and ethical guidelines. Biol Lett. 2015;11(2).

21. An W, Lin T, Zhang P. An Autonomous Soaring for Small Drones Using the Extended Kalman Filter Thermal Updraft Center Prediction Method Based on Ordinary Least Squares. Drones. 2023;7(10).

22. Wang Q, Wang L, Yu L, Wang J, Zhang X. An ID-Based Robust Identification Approach Toward Multitype Noncooperative Drones. IEEE Sens J. 2023;23(9):10179–92.

23. Orange JP, Bielefeld RR, Cox WA, Sylvia AL. Impacts of Drone Flight Altitude on Behaviors and Species Identification of Marsh Birds in Florida. Drones. 2023;7(9).

24. Dale H, Jahangir M, Baker CJ, Antoniou M, Harman S, Ahmad BI. Convolutional Neural Networks for Robust Classification of Drones. In: Proceedings of the IEEE Radar Conference. 2022.

25. Ihekoronye VU, Ajakwe SO, Kim DS, Lee JM. Aerial Supervision of Drones and Other Flying Objects Using Convolutional Neural Networks. In: 4th International Conference on Artificial Intelligence in Information and Communication, ICAIIC 2022 - Proceedings. 2022. p. 69–74.

26. Aldhoun J, Elmahy R, Littlewood DTJ. Phylogenetic relationships within Dicrocoeliidae (Platyhelminthes: Digenea) from birds from the Czech Republic using partial 28S rDNA sequences. Parasitol Res. 2018;117(11):3619–24.

27. Han J, Ren Y, Brighente A, Conti M. RANGO: A Novel Deep Learning Approach to Detect Drones Disguising from Video Surveillance Systems. ACM Trans Intell Syst Technol. 2024;15(2):1–21.

28. Alzboon MS, Qawasmeh S, Alqaraleh M, Abuashour A, Bader AF, Al-Batah M. Pushing the Envelope: Investigating the Potential and Limitations of ChatGPT and Artificial Intelligence in Advancing Computer Science Research. In: 2023 3rd International Conference on Emerging Smart Technologies and Applications, eSmarTA 2023. 2023.

29. Alzboon M. Semantic Text Analysis on Social Networks and Data Processing: Review and Future Directions. Inf Sci Lett. 2022;11(5):1371–84.

30. Alzboon MS, Al-Batah M, Alqaraleh M, Abuashour A, Bader AF. A Comparative Study of Machine Learning Techniques for Early Prediction of Diabetes. In: 2023 IEEE 10th International Conference on Communications and Networking, ComNet 2023 - Proceedings. 2023. p. 1–12.

31. Alzboon MS, Bader AF, Abuashour A, Alqaraleh MK, Zaqaibeh B, Al-Batah M. The Two Sides of AI in Cybersecurity: Opportunities and Challenges. In: Proceedings of 2023 2nd International Conference on Intelligent Computing and Next Generation Networks, ICNGN 2023. 2023.

32. Alzboon MS, Al-Batah M, Alqaraleh M, Abuashour A, Bader AF. A Comparative Study of Machine Learning Techniques for Early Prediction of Prostate Cancer. In: 2023 IEEE 10th International Conference on Communications and Networking, ComNet 2023 - Proceedings. 2023. p. 1–12.

FINANCING