doi: 10.56294/dm2024.649

ORIGINAL

Mobile-Based Skin Cancer Classification System Using Convolutional Neural Network

Sistema de clasificación de cáncer de piel basado en dispositivos móviles que utiliza una red neuronal convolucional

Ihsanul Insan Aljundi1 ![]() *,

Dony Novaliendry1

*,

Dony Novaliendry1 ![]() *,

Yeka Hendriyani1

*,

Yeka Hendriyani1 ![]() *,

Syafrijon1

*,

Syafrijon1 ![]() *

*

1Universitas Negeri Padang, Department of Electronics Engineering. Padang, Indonesia.

Cite as: Aljundi II, Novaliendry D, Hendriyani Y, Syafrijon S. Mobile-Based Skin Cancer Classification System Using Convolutional Neural Network. Data and Metadata. 2024; 3:.649. https://doi.org/10.56294/dm2024.649

Submitted: 14-06-2024 Revised: 14-09-2024 Accepted: 23-12-2024 Published: 24-12-2024

Editor:

Dr.

Adrián Alejandro Vitón Castillo ![]()

Corresponding Author: Dony Novaliendry *

ABSTRACT

Introduction: skin cancer is a growing concern worldwide, often exacerbated by limited awareness and accessibility to diagnostic tools. Early detection is critical for improving survival rates and patient outcomes. This study developed a convolutional neural network (CNN) algorithm integrated into a mobile application to address this issue.

Method: the researchers employed an agile methodology to design and implement a CNN-based skin cancer detection system using the VGG16 architecture. A dataset of skin cancer images from the International Skin Imaging Collaboration (ISIC) was used, consisting of 1500 images divided into six classes. The model was trained on 1200 images and tested on 300 images. Preprocessing steps included resizing images to 224x224 pixels, normalization, and image augmentation to enhance model generalization.

Results: the trained model achieved a test accuracy of 86,67 % in classifying skin cancer types, with the highest performance for healthy skin (100 % accuracy) and melanoma (98 % recall). The mobile application allows users to upload or capture images of skin lesions and receive automated classification results, including lesion characteristics such as asymmetry, border, color, and diameter. Additional features include user authentication and history tracking, enhancing usability and accessibility.

Conclusions: the study successfully developed a reliable CNN-based skin cancer detection system integrated into a user-friendly mobile application. The application provides a valuable tool for early detection and awareness of skin cancer. Future work should focus on clinical validation, expanding the dataset to include diverse populations, and optimizing the system for mobile deployment.

Keywords: CNN; VGG16 Architecture; Skin Cancer; Early Detection; Mobile Application.

RESUMEN

Introducción: el cáncer de piel es una preocupación creciente en todo el mundo, a menudo exacerbada por la conciencia y la accesibilidad limitadas a las herramientas de diagnóstico. La detección temprana es fundamental para mejorar las tasas de supervivencia y los resultados de los pacientes. Este estudio desarrolló un algoritmo de red neuronal convolucional (CNN) integrado en una aplicación móvil para abordar este problema.

Método: los investigadores emplearon una metodología ágil para diseñar e implementar un sistema de detección de cáncer de piel basado en CNN utilizando la arquitectura VGG16. Se utilizó un conjunto de datos de imágenes de cáncer de piel de la International Skin Imaging Collaboration (ISIC), que consta de 1500 imágenes divididas en seis clases. El modelo se entrenó con 1200 imágenes y se probó con 300 imágenes. Lospasos de preprocesamiento incluyeron cambiar el tamaño de las imágenes a 224x224 píxeles, normalización y aumento de imágenes para mejorar la generalización del modelo.

Resultados: el modelo entrenado logró una precisión de prueba del 86,67 % en la clasificación de tipos de cáncer de piel, con el rendimiento más alto para piel sana (100 % de precisión) y melanoma (98 % de recuperación). La aplicación móvil permite a los usuarios cargar o capturar imágenes de lesiones cutáneas y recibir resultados de clasificación automatizados, incluidas características de la lesión como asimetría, borde, color y diámetro. Las características adicionales incluyen autenticación de usuario y seguimiento del historial, lo que mejora la usabilidad y accesibilidad.

Conclusiones: el estudio desarrolló con éxito un sistema confiable de detección de cáncer de piel basado en CNN integrado en una aplicación móvil fácil de usar. La aplicación proporciona una valiosa herramienta para la detección temprana y la concientización sobre el cáncer de piel. El trabajo futuro debería centrarse en la validación clínica, ampliar el conjunto de datos para incluir poblaciones diversas y optimizar el sistema para la implementación móvil.

Palabras clave: CNN; Arquitectura VGG16; Cáncer de Piel; Detección Temprana; Aplicación Móvil.

INTRODUCTION

Skin cancer is one of the most commonly diagnosed dermatological conditions worldwide, with an increasing incidence rate. Skin cancer arises from the uncontrolled proliferation of skin cells, which can eventually spread to other areas of the body.(1) According to data released by the World Cancer Research Fund (WCRF) in 2020, there were approximately 324 635 cases of melanoma and over one million cases of non-melanoma skin cancer globally. In Indonesia, the Indonesia Cancer Care Community (ICCC) reported 6170 cases of non-melanoma skin cancer and 1392 cases of melanoma in 2018. The high mortality rate from skin cancer is largely due to a lack of understanding and knowledge about this condition, causing many people to ignore early symptoms as they often resemble milder skin diseases.

Various factors contribute to the development of skin cancer, including increased ultraviolet radiation, genetic factors, unhealthy lifestyles, and human papillomavirus infections.(2,3) In recent years, advancements in artificial intelligence technology, particularly Convolutional Neural Networks (CNN), have opened new opportunities for early detection of skin cancer. Convolutional Neural Network is one of the deep learning algorithms developed by multi-layer perceptron (MLP) that has been widely used in data classification, especially image classification.(4) CNN excel in image processing tasks due to their specialized architecture designed to efficiently handle and analyze visual input data.(5) CNN has proven effective in classifying medical images, including skin lesions. CNN possesses a good feature extractor for medical image classification tasks.(6) Previous research, such as that conducted by(7) has shown that CNN models can outperform human dermatologists in classifying skin lesions.

A study by(8) on facial skin disease classification using CNN achieved high accuracy rates: 100 % in the training process, 88 % in the testing process, and 90 % on new data testing. Concurrently, the increasing use of smartphones worldwide makes mobile applications an ideal platform for implementing CNN-based skin cancer detection technology. According to GSMA, the number of smartphone users is expected to reach 6 billion by 2025, offering great potential to reach more users with skin cancer detection technology.

This research aims to develop and implement a CNN algorithm in a mobile application for skin cancer classification. Android, an open-source Linux-based operating system, is tailored for touchscreen-enabled mobile devices like smartphones and tablets, serving as an interface between users and hardware components.(9) Mobile application is a ready-made program that performs a specific function that is paired on a mobile device.(10,26) The application is designed to assist users in detecting suspicious skin lesions, raising public awareness about the importance of early skin cancer detection, and providing an effective tool for self-diagnosis of skin conditions.

By integrating CNN technology into a mobile application, this research is expected to make a significant contribution to improving the accessibility and effectiveness of early skin cancer detection. This implementation not only has the potential to reduce the burden on healthcare systems but can also encourage people to be more proactive in regularly checking their skin condition, as demonstrated in a study by.(11)

In this research, we explored the design and implementation of a skin cancer classification system using CNN, as well as the integration of this technology into a mobile application platform. We also evaluated the system's performance in terms of detection accuracy and ease of use, with the ultimate goal of producing a reliable and easily accessible solution for early skin cancer detection.

METHOD

This study employs the Agile software development methodology. Agile is an approach that emphasizes speed in development and responsiveness to client-requested changes while actively involving clients in the process. The resulting software or modules are a collaborative effort of all involved parties.(12)

A key feature of Agile development is its iterative workflow. This allows for revisions and changes to be implemented within a single cycle without waiting for the entire process to be completed.(13) The stages in the agile method are starting with requirements continuing with design, development, testing, deployment to review. A study conducted by(14,27) shows that the application of Agile in software development projects increases team efficiency and end-user satisfaction, as this method allows for rapid adaptation to changing user needs.

Dataset and Preprocessing

The data used is a dataset of skin cancer images obtained from the International Skin Imaging Collaboration (ISIC). The data consists of 5 classes, namely actinic keratosis, basal cell carcinoma, melanoma, seborrheic keratosis, squamous cell carcinoma. The format of the skin cancer image is .jpg which is taken from various types of cameras with a total of 1500 images. The following number of image datasets in table 1.

|

Table 1. Number of Skin Cancer Image Datasets Used |

||

|

Types of Skin Cancer |

Number of Images |

|

|

1. |

Actinic Keratosis |

250 |

|

2. |

Basal Cell Carcinoma |

250 |

|

3. |

Melanoma |

250 |

|

4. |

Seborrheic Keratosis |

250 |

|

5. |

Squamous Cell Carcinoma |

250 |

|

6. |

Healthy Skin |

250 |

Then the author resizes the data to a size of 224x224. Resizing the data was done because this size corresponds to the standard input of the VGG16 architecture, which has been optimized for that image size. This ensures that the model can process and analyze the image efficiently.

After the data has been resized, the next step is normalization. Normalization aims to utilize the entire range of grayscale values so that a sharper and clearer image is obtained, even when viewed with the human eye. In addition, normalization also aims to speed up the performance of the model during the data training process.

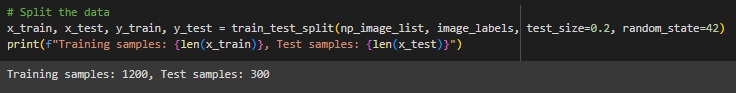

In this study, 1500 skin cancer images were used with a division of 80 % for training and 20 % for testing. The number of images used for training is 1200, while 300 images are used for testing. The following is the code for dividing the division and testing data in figure 1.

Figure 1. Splitting Data

Image Augmentation

Figure 2. Image

Augmentation

CNN Model Development

VGG16 is a CNN model that utilizes convolutional layer with a small convolutional filter specification (3×3).(17) This VGG16 model uses several main layers, namely convolution and pooling layers. The architecture consists of 5 convolutional blocks, each having 2-3 convolution layers (Conv2D) followed by MaxPooling2D, with the number of filters increasing from 64 in the first block to 512 in the fifth block. After the convolution and pooling process, the resulting feature is still 3-dimensional. Therefore, we flatten or reshape the feature into a 1-dimensional array, which results in a vector with 25088 elements. Next, the architecture adds a dense layer with 4096 units and ReLU activation, followed by a 0,5 dropout to reduce overfitting. The last layer is a dense layer with 1000 units, which is commonly used for ImageNet classification, using softmax activation.

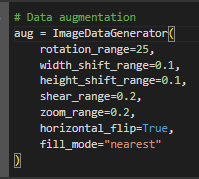

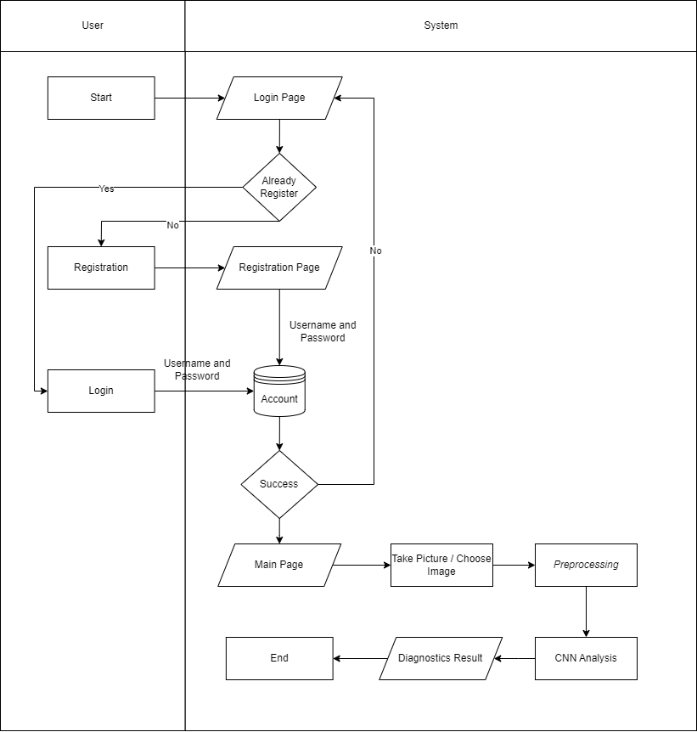

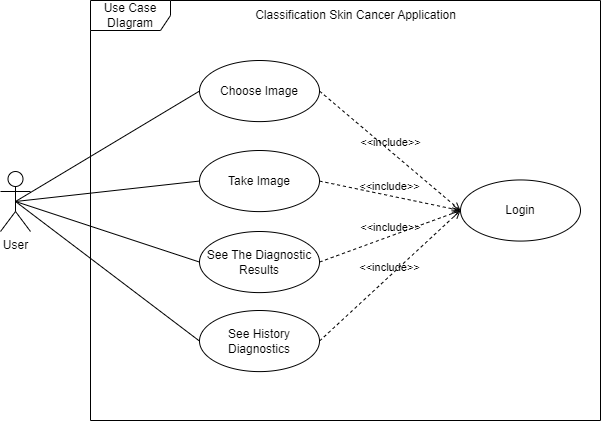

System Design

Figure 3. Flowmap System Skin Cancer Classification Application

UML

Unified Modeling Language (UML) is a graph/image-based language used to visualize, build, and document object-oriented software development systems.(18) UML modeling uses several diagrams, the following UML diagrams are used in designing an application. The UML are illustrated below.

Figure 4. Use Case Diagram

Skin Cancer Classification Application

The use case diagram in figure 4 is a standard diagram often included in computer science curricula at universities.(19) The use case diagram for the mobile-based skin cancer detection application illustrates the interaction between the user and the system. This diagram shows how the user interacts with various features of the application, such as taking photos of skin lesions, uploading images, and viewing diagnosis results.

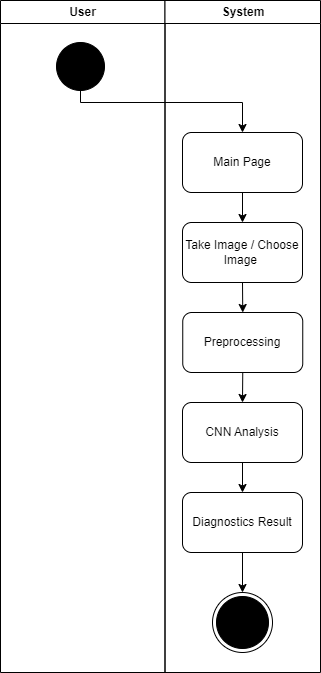

Figure 5. Activity Diagram

Skin Cancer Classification Application

As shown in figure 5, activity diagram is used to visually represent the sequence of events or the flow of actions within a use case diagram.(20) The activity diagram of the skin cancer detection application shows the flow of user activity while using the application. This diagram visualizes the steps taken by the user when trying to detect skin cancer through uploaded or captured images.

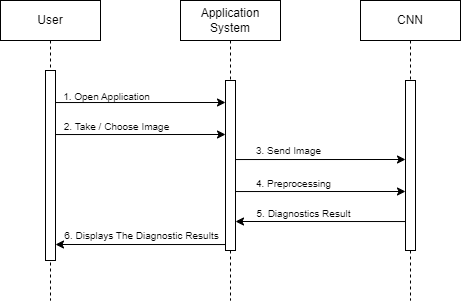

Figure 6. Sequence Diagram Skin Cancer Classification Application

System Requirements

In the skin cancer detection application development project using CNN, system requirements analysis involves several important components that support the application development and deployment process. The following is a brief explanation of some of the necessary system requirements.

First, Google colab is a cloud-based platform that allows authors to write and run Python code directly in the browser. Google colab provides free access to GPUs, which is particularly useful for training computationally intensive CNN models. In addition, Google Colab supports various machine learning libraries such as TensorFlow and Keras, which are used to build and train skin cancer detection models.

For development and code management, Visual Studio Code is a popular and lightweight source code editor, which supports various programming languages and development tools. Visual studio code is used for developing, editing, and debugging application code.

Once the model is developed, the user interface is created using Flutter, an open-source framework developed by Google to build natively compiled applications for mobile, web, and desktop from a single codebase. Flutter is a framework that can develop mobile applications from both Android and Apple.(21) In the context of skin cancer detection apps, Flutter is used to develop responsive and attractive user interfaces (UI) on mobile devices.

Finally, firebase serves as a reliable backend platform. Firebase is a service from Google to make it easier for developers to develop applications on various platforms various platforms.(22) Firebase is an application development platform from Google that provides services such as real-time database, user authentication, cloud storage, and application analytics. In the skin cancer detection application, Firebase is used to manage user data, authentication, and provide a reliable backend infrastructure.

RESULTS AND DISCUSSION

Model Performance

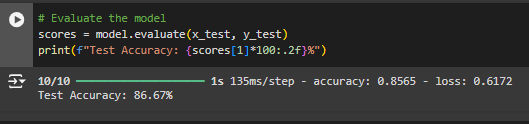

After the training process is complete, the next step is to evaluate the performance of the model with test data (xtest, ytest). The results of this evaluation show how well the model can generalize to new data that has never been seen during training.

Figure 7. Model

Training Accuracy Results

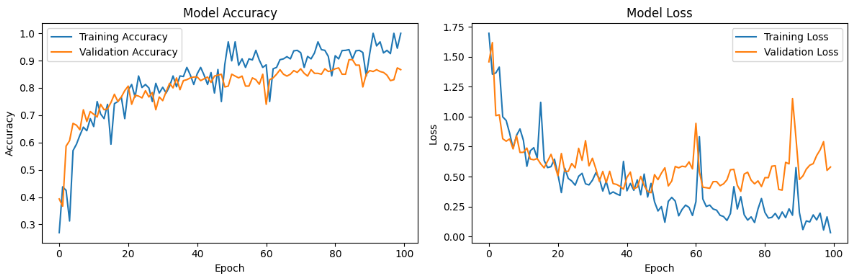

From the evaluation results in figure 7, the model achieved a Test Accuracy of 86,67 %. This means that the model successfully predicted correctly on a percentage of the test data. The results of this model training show some important things regarding the performance and generalization ability of the model. In the Model Accuracy graph, it can be seen that the Training Accuracy experienced a significant increase from the beginning of training until around the 60th epoch, and continued to gradually increase until it was close to 100 %. On the other hand, the Validation Accuracy also increases initially, but after about 20 epochs, it starts to show fluctuations and stabilizes at a slightly lower level compared to the Training Accuracy. The following in figure 8 is a graph of the accuracy and loss of the model.

Figure 8. Model

Accuracy and Loss Result

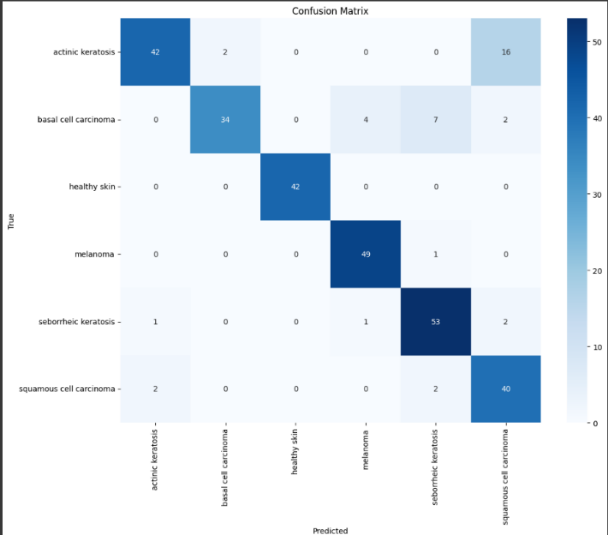

Confusion Matrix is used to display model testing results of actual data comparison and prediction data on skin cancer datasets with 6 classes of disease types. The Figure in 9 below is a confusion matrix image of the VGG16 architecture. It can be seen that there is a miss classification with a total of 18 images in the actinic keratosis class, 13 images in the basal cell carcinoma class, 1 image in the melanoma class, 4 images in the seborrheic keratosis class, and 4 images in the squamous cell carcinoma class.

Figure 9. Confusion

Matrix VGG16

These confusion matrix results demonstrate varying performance across different skin cancer classes. The model achieved the highest accuracy for healthy skin classification (100 % accuracy), followed by melanoma (98 % recall). These results are comparable to previous studies, such as the work of(7) who achieved 91 % accuracy in classifying skin cancer using CNN. The high accuracy in melanoma detection (94 % F1-score) is particularly significant as it aligns with the findings of,(11) who demonstrated that deep learning algorithms can achieve performance comparable to dermatologists in melanoma classification.

The model showed some limitations in classifying actinic keratosis (80 % F1-score) and squamous cell carcinoma (77 % F1-score). This challenge is consistent with findings from(1) who noted that these particular types of skin lesions often share visual characteristics, making them more difficult to distinguish.

Confusion matrix contains various performances that can be evaluated, such as accuracy, precision, recall, specificity, and F1-Score.(23) The table 2 below is the result of model performance evaluation that focuses on each class in the form of precision, Recall and f1-score of the VGG16 model.

|

Table 2. Performance Evaluation Results of the VGG16 Architectural Model |

|||

|

Class |

Precision |

Recall |

F1-Score |

|

Actinic Keratosis |

0,93 |

0,70 |

0,80 |

|

Basal Cell Carcinoma |

0,94 |

0,72 |

0,82 |

|

Healthy Skin |

1,00 |

1,00 |

1,00 |

|

Melanoma |

0,91 |

0,98 |

0,94 |

|

Seborrheic Keratosis |

0,84 |

0,93 |

0,88 |

|

Squamous Cell Carcinoma |

0,67 |

0,91 |

0,77 |

Analysis of Android Application

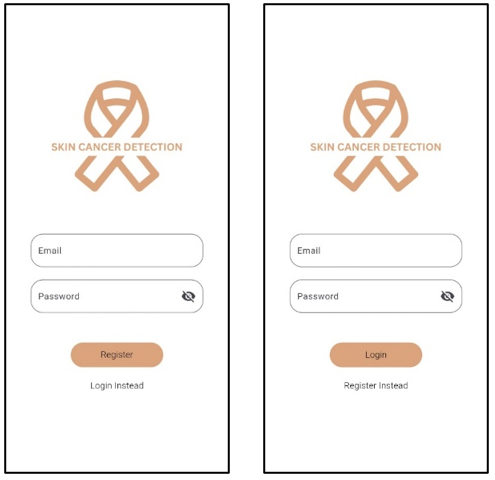

The designed android application has 6 layouts. The figure 10 below, is a view of the registration page of the skin cancer classification android application.

Figure 10. Registration and Login Page

On the registration page in figure 10 there are 2 fields that must be filled in by the user to register or register, namely by entering an email and password. Furthermore, if the user already has an account, then the user can directly press the login button instead to log in. The following is the appearance of the login page. After the user successfully logs in by entering the email and password according to the registration process, the main page of the skin cancer classification application will appear.

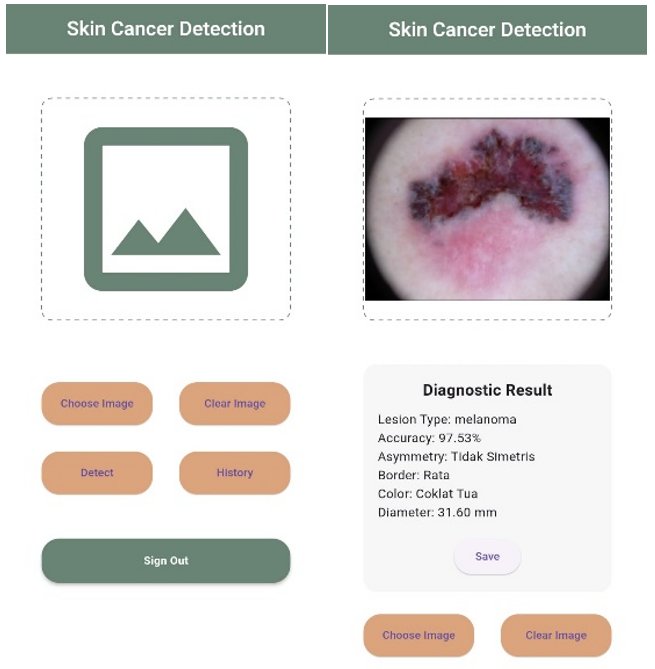

Figure 11. Main and Detection Result Page

On the main page from the figure 11, there are 5 buttons, namely the select image button which is a button for users to take pictures using a camera or take pictures from the gallery. The delete image button is a button to remove the image on the frame. Then there is the detection button, which is a button that detects skin lesions on the frame using the TensorFlow Lite model that has been implemented in the Flutter application. History button, which is a button that serves to direct users to a page that displays a history of skin lesion detection results that have been done before. The last button is the sign out button which functions to log out the user from their account in the application. The detection result done by the user after the user selects the image and presses the detect button. Diagnostic results will display lesion type, accuracy, asymmetry, border, color, diameter.

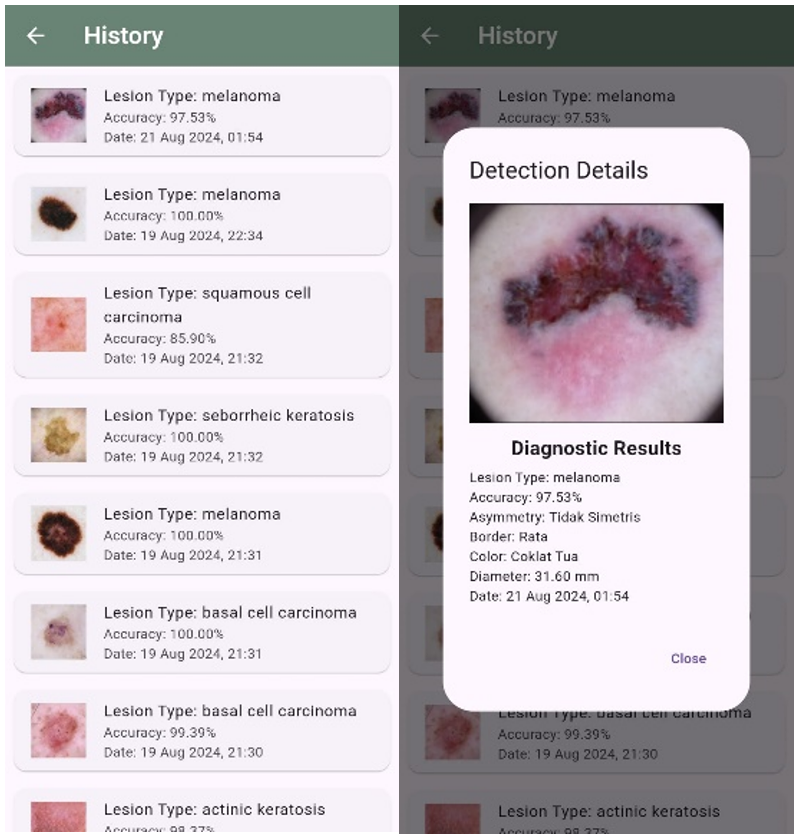

Figure 12. History and Pop Up Details Detection Page

History page based on figure 12. In this page, users can view the history of detection results that have been stored in Firestore, including information such as skin lesion type, accuracy rate, and other attributes such as asymmetry, edge, color, and lesion diameter. On the history page, users can view details of detections that have been performed previously. After the user selects one of the detection histories that the user has done, a pop up will appear in the form of details of the previous detection.

The mobile application implementation successfully integrated the CNN model with a user-friendly interface, addressing several key aspects of mobile healthcare applications. Our implementation makes significant contributions through its comprehensive features. The application provides real-time processing capabilities for skin lesion images, ensuring immediate feedback for users. We have implemented robust security measures through Firebase authentication to protect user data, addressing privacy concerns that are crucial in healthcare applications. The historical tracking feature allows users to monitor changes over time and maintain a record of previous diagnoses, which is essential for ongoing health monitoring. Additionally, the integration of the ABCD rule (Asymmetry, Border, Color, Diameter) in result presentation provides users with structured and clinically relevant information about their skin lesions.

Despite these achievements, our study has identified several areas that require further development and consideration. The current dataset, while substantial for initial implementation, could benefit from greater diversity in ethnic representation and additional examples of rare skin cancer variants. This limitation affects the model's generalization capabilities across different population groups. From a technical perspective, the implementation faces constraints related to mobile processing power, which affects the complexity of models that can be deployed effectively. The system's performance is also dependent on the quality of images captured by mobile device cameras and requires stable network connectivity for cloud-based features to function optimally.

Clinical validation represents another crucial area for future development. The system would benefit from extensive trials in real-world clinical settings and direct comparison with dermatologist diagnoses across diverse patient populations. While the system's 86,67 % accuracy is promising, it should be understood within its intended context as a screening tool rather than a definitive diagnostic solution. This perspective aligns with,(6) who emphasize that AI systems should support rather than replace clinical decision-making in healthcare settings. These limitations and considerations provide clear directions for future research and development efforts to enhance the system's reliability and practical utility in skin cancer screening.

CONCLUSIONS

Additionally, a mobile application was designed to allow users to perform self-diagnosis efficiently and independently. This application fulfills the research objective of creating an accessible and reliable tool for early detection, enabling users to identify potential skin cancer cases before seeking professional medical consultation. The mobile application's integration with the CNN model ensures practical usability and reliability, making it a valuable resource for early skin cancer screening.

Future research is encouraged to enhance the model's generalization and applicability by testing it on larger and more diverse datasets. Improvements in the model's robustness under varying conditions, such as different sources and lighting environments, are essential. Furthermore, exploring alternative CNN architectures optimized for mobile deployment is recommended to increase efficiency and performance. These efforts will contribute to further advancing the application and expanding its usability for broader early detection initiatives.

BIBLIOGRAPHIC REFERENCES

1. Shah A, Shah M, Pandya A, Sushra R, Sushra R, Mehta M, et al. A comprehensive study on skin cancer detection using artificial neural network (ANN) and convolutional neural network (CNN). Clin eHealth [Internet]. 2023;6:76–84. Available from: https://doi.org/10.1016/j.ceh.2023.08.002

2. Novaliendry D, Oktoria, Yang C-H, Desnelita Y, Irwan, Sanjaya R, et al. Hemodialysis Patient Death Prediction Using Logistic Regression. Int. J. Onl. Eng. 2023;19(09):66-80. Available from: https://doi.org/10.3991/ijoe.v19i09.40917

3. Wilvestra S, Lestari S, Asri E. Studi Retrospektif Kanker Kulit di Poliklinik Ilmu Kesehatan Kulit dan Kelamin RS Dr. M. Djamil Padang Periode Tahun 2015-2017. J Kesehat Andalas. 2018;7(Supplement 3):47.

4. Bariyah T, Rasyidi MA, Ngatini N. Convolutional Neural Network untuk Metode Klasifikasi Multi-Label pada Motif Batik. TechnoCom. 2021;20(1):155–65.

5. Novaliendry D, Pratama MFP, Budayawan K, Huda Y, Rahiman WMY. Design and Development of Sign Language Learning Application for Special Needs Students Based on Android Using Flutter. Int. J. Onl. Eng. 2023;19(16):76-92. Available from: https://doi.org/10.3991/ijoe.v19i16.44669

6. Yadav SS, Jadhav SM. Deep convolutional neural network based medical image classification for disease diagnosis. J Big Data [Internet]. 2019;6(1). Available from: https://doi.org/10.1186/s40537-019-0276-2

7. Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature [Internet]. 2017;542(7639):115–8. Available from: http://dx.doi.org/10.1038/nature21056

8. Nurkhasanah, Murinto. Klasifikasi Penyakit Kulit Wajah Menggunakan Metode Convolutional Neural Network Classification of Facial Skin Diseases Using the Method of the Convolutional Neural Network. Sainteks [Internet]. 2021;18(2):183–90. Available from: https://www.kaggle.com/datasets

9. Dony Novaliendry, Asrul Huda, Latifah Annisa, Resti Rahmi Khairati Costa, Yudhistira, Fivia Eliza. The Effectiveness of Web-Based Mobile Learning for Mobile Subjects on Computers and Basic Networks in Vocational High Schools. Int. J. Interact. Mob. Technol. 2023;17(09):20-30. Available from: https://doi.org/10.3991/ijim.v17i09.39337

10. Posumah A, Waworuntu J, Komansilan T. Aplikasi Mobile Pengenalan Budaya Pulau Sulawesi Berbasis Augmented Reality. Edutik J Pendidik Teknol Inf dan Komun. 2021;1(5):513–27.

11. Han SS, Kim MS, Lim W, Park GH, Park I, Chang SE. Classification of the Clinical Images for Benign and Malignant Cutaneous Tumors Using a Deep Learning Algorithm. J Invest Dermatol [Internet]. 2018;138(7):1529–38. Available from: https://doi.org/10.1016/j.jid.2018.01.028

12. Nursakti N, Asri S. Perancangan Aplikasi Online Shop pada Toko Nuzhly Shop Menggunakan Metode Agile. J Ilm Sist Inf dan Tek Inform. 2023;6(1):26–33.

13. Suhari S, Faqih A, Basysyar FM. Sistem Informasi Kepegawaian Mengunakan Metode Agile Development di CV. Angkasa Raya. J Teknol dan Inf. 2022;12(1):30–45.

14. da Camara R, Marinho M, Sampaio S, Cadete S. How do Agile Software Startups deal with uncertainties by Covid-19 pandemic? Int J Softw Eng Appl. 2020;11(4):15–34.

15. AGUSTINA R, MAGDALENA R, PRATIWI NKC. Klasifikasi Kanker Kulit menggunakan Metode Convolutional Neural Network dengan Arsitektur VGG-16. ELKOMIKA J Tek Energi Elektr Tek Telekomun Tek Elektron. 2022;10(2):446.

16. Xu M, Yoon S, Fuentes A, Park DS. A Comprehensive Survey of Image Augmentation Techniques for Deep Learning. Pattern Recognit [Internet]. 2023;137:109347. Available from: https://doi.org/10.1016/j.patcog.2023.109347

17. Natsir AMFM, Achmad A, Hazriani H. Klasifikasi Ikan Tuna Layak Ekspor Menggunakan Metode Convolutional Neural Network. J Ilm Sist Inf dan Tek Inform. 2023;6(2):172–83.

18. Penira A, Zahara A, Ramadhani M, Amin ML. Analisa Dan Perancangan Sistem E-Claim Pada Pt Asuransi Jiwa Syariah Bumiputera Cabang Medan. JTIK (Jurnal Tek Inform Kaputama). 2020;4(1):1–6.

19. Fauzan R, Siahaan D, Rochimah S, Triandini E. A Different Approach on Automated Use Case Diagram Semantic Assessment. Int J Intell Eng Syst. 2021;14(1):496–505.

20. Gedam MN, Meshram BB. Proposed Secure Activity Diagram for Software Development. Int J Adv Comput Sci Appl. 2023;14(6):671–80.

21. Panji Rachmat Setiawan, Rizdqi Akbar Ramadhan, Ause Labellapansa. Pelatihan Pemrograman Flutter. J Pengabdi Masy dan Penerapan Ilmu Pengetah. 2022;3(1):22–7.

22. Kusumo LN, Wijanto MC, Tan R, Royandi Y. Implementasi Realtime Cloud Service dalam Pengelolaan Nilai Tugas Akhir Mahasiswa. J Tek Inform dan Sist Inf. 2023;9(2):1–10.

23. Suprihanto S, Awaludin I, Fadhil M, Zulfikor MAZ. Analisis Kinerja ResNet-50 dalam Klasifikasi Penyakit pada Daun Kopi Robusta. J Inform. 2022;9(2):116–22.

24. Arnold M, Singh D, Laversanne M, Vignat J, Vaccarella S, Meheus F, et al. Global burden of cutaneous melanoma in 2020 and projections to 2040. JAMA Dermatol [Internet]. 2022;158(5):495–503. Available from: https://doi.org/10.1001/jamadermatol.2022.0160.

25. Indonesia Cancer Care Community. Sekilas kanker kulit [Internet]. ICCC; [cited 2025 Jan 6]. Available from: https://iccc.id/sekilas-kanker-kulit

26. Novaliendry D, Ardi N, Yang CH. Development of a Semantic Text Classification Mobile Application Using TensorFlow Lite and Firebase ML Kit. Journal Européen des Systèmes Automatisés. 2024;57:1603-11. doi:10.18280/jesa.570607.

27. Ridhani D, Krismadinata K, Novaliendry D, Ambiyar, Effendi H. Development of an Intelligent Learning Evaluation System Based on Big Data. Data and Metadata. 2024;3. doi:10.56294/dm2024.569.

FINANCING

The authors did not receive financing for the development of this research.

CONFLICT OF INTEREST

The authors declare that there is no conflict of interest.

AUTHORSHIP CONTRIBUTION

Conceptualization: Ihsanul Insan Aljundi, Dony Novaliendry, Yeka Hendriyani, Syafrijon.

Data curation: Ihsanul Insan Aljundi, Dony Novaliendry.

Research: Ihsanul Insan Aljundi, Dony Novaliendry.

Methodology: Ihsanul Insan Aljundi, Dony Novaliendry.

Software: Ihsanul Insan Aljundi.

Validation: Dony Novaliendry, Yeka Hendriyani, Syafrijon.

Drafting - original draft: Ihsanul Insan Aljundi.

Writing - proofreading and editing: Ihsanul Insan Aljundi, Dony Novaliendry.